Exploring the Potent Magic of PaLM 2: A Definitive Guide

Over the years, Google has been at the forefront of natural language processing research, constantly pushing the boundaries of language understanding and generation. Each iteration of their LLMs (Large Language Models) has brought significant advancements in capturing context, understanding complex language structures, and improving the performance of models.

The journey of Google’s large language models started with BERT in 2018. Gradually, they also released XLNet (2019), GLaM (2021), LaMDA (2022), PaLM (2022), and Minerva (2022). PaLM 2, released in 2023, is the latest and the most advanced LLM to join the list.

PaLM 2 represents the pinnacle of this progress, leveraging many innovative techniques and extensive training data to achieve unparalleled accuracy, fluency, and context awareness. This blog post covers everything you need to know about PaLM 2, including its architecture, training methods, and real-world applications.

Let’s begin with understanding what PaLM 2 is capable of and how it was built.

PaLM 2, in a nutshell

PaLM 2 (Pathways Language Model 2) is an advanced language model with remarkable capabilities. This section explores its inception and construction and its impressive features. We’ll also learn how PaLM 2 was meticulously built to ensure responsible development and use of AI.

What is PaLM 2?

PaLM 2 is a large language model developed by Google that is based on the Transformer architecture and the Pathways system. It is trained on a massive dataset of text and code, which gives it a vast knowledge base and the ability to understand and respond to natural language questions, generate text, code, and other creative content, and translate languages. With PaLM 2, Google aims to bring AI capabilities to its products, including Gmail, Google Docs, and Bard.

According to Sundar Pichai, Google’s CEO, PaLM 2 represents a groundbreaking advancement in language modeling. He stated, “PaLM 2 demonstrates Google’s commitment to pushing the boundaries of AI research. With its unprecedented scale, PaLM 2 sets new standards for natural language understanding.”

Today, we’re introducing our latest PaLM model, PaLM 2, which builds on our fundamental research and our latest infrastructure. It’s highly capable at a wide range of tasks and easy to deploy. We’re announcing more than 25 products and features powered by PaLM 2 today. #GoogleIO

— Google (@Google) May 10, 2023

PaLM 2 is the latest step in our decade-long journey to bring AI in responsible ways to billions of people. It builds on progress made by two world-class research teams, the Brain team and @DeepMind.

— Google (@Google) May 10, 2023

PaLM 2 is rooted in Google’s conscientious approach to developing and using AI responsibly. Thorough evaluations were conducted to scrutinize its capabilities, potential biases, and potential negative impacts, both in research and when integrated into various products. This meticulous process ensures that PaLM 2 aligns with Google’s commitment to ethical AI deployment.

Moreover, Google researchers believe that “bigger is not always better.” In fact, instead of focusing on more parameters in PaLM 2, they have focused on integrating techniques like Reinforcement Learning from Human Feedback (RLHF), compute-optimal scaling, and few-shot learning. As a result, PaLM 2 can better grasp the intricacies of language and context, leading to improved performance across various natural language processing and reasoning tasks.

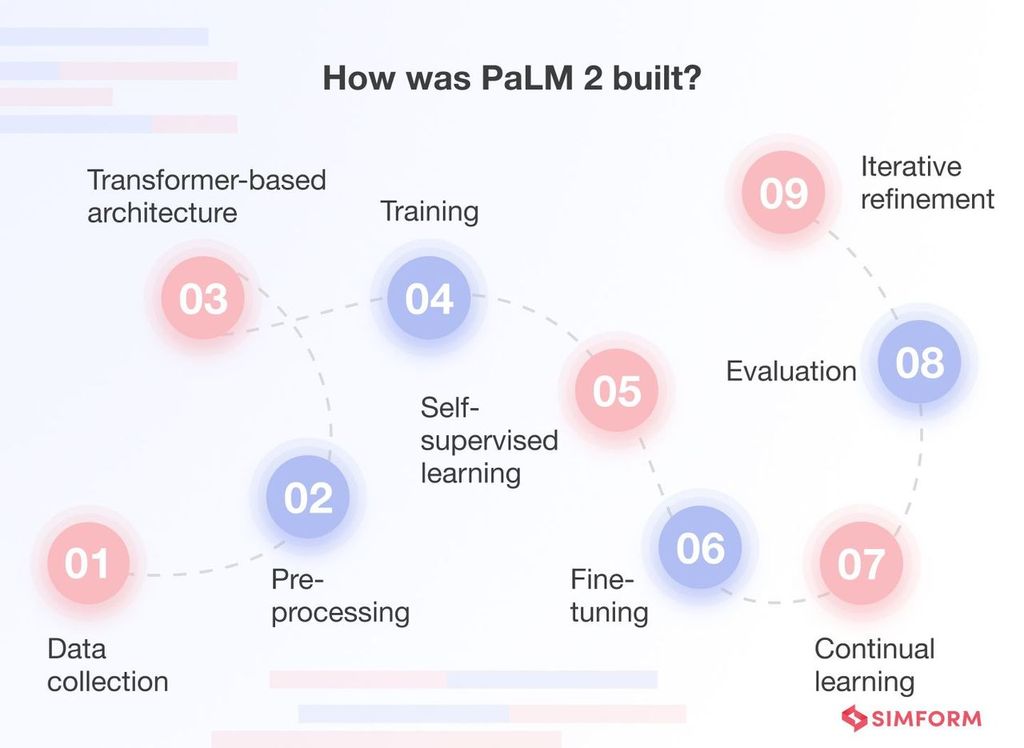

How was PaLM 2 built?

Building LLMs like PaLM 2 requires significant computational resources, data, and expertise in deep learning and natural language processing applications. Google used a combination of powerful hardware, data collection, model architecture design, and training techniques to develop PaLM 2.

- Data collection: Google researchers collected a vast and diverse text dataset from the internet to train PaLM 2 effectively. This corpus included articles, books, websites, and other textual content.

- Pre-processing: The collected data underwent extensive preprocessing to clean and tokenize the text. Tokenization involves breaking down the text into smaller units, such as words or subwords, to facilitate efficient training.

- Model architecture: PaLM 2 was built using the Transformer architecture, a deep neural network for sequence-to-sequence tasks. Transformers have been widely successful in natural language processing due to their ability to handle long-range dependencies in text.

- Training: The researchers employed massive computational power to train PaLM 2 on the preprocessed dataset. This training process involved optimizing millions of parameters within the AI model to learn meaningful patterns and representations from the data.

- Self-supervised learning: PaLM 2 was trained using self-supervised learning, a technique where the model learns from the data without explicit human annotations. It involved predicting missing words or phrases within sentences, enabling the AI model to understand the context and semantics of the text.

- Fine-tuning: After the initial pretraining, the model was fine-tuned on specific downstream tasks, such as language understanding and generation tasks. Fine-tuning helps adapt the general language model to perform well on specific tasks by exposing it to labeled data and optimizing task-specific objectives.

- Continual learning: The researchers employed various learning techniques to enable PaLM 2 to update its knowledge and adapt to new data continuously. It ensured the model remained up-to-date and could handle evolving language patterns and concepts.

- Evaluation: The model’s performance was evaluated on various benchmarks and datasets throughout development to measure its accuracy and generalization capabilities.

- Iterative refinement: The researchers conducted multiple iterations of the training, fine-tuning, and evaluation process to improve the model’s performance. Each iteration involved tweaking hyperparameters, exploring novel techniques, and analyzing results to achieve state-of-the-art language understanding capabilities.

PaLM 2 surpasses its predecessor, PaLM, through several crucial enhancements and optimizations that significantly elevate its performance and capabilities:

- Compute-optimal scaling: PaLM 2 uses compute-optimal scaling, which involves proportionally adjusting model size and training dataset size. This approach shrinks PaLM 2 compared to PaLM while boosting efficiency and performance. It also means faster inference, fewer parameters to maintain, and lower serving costs.

- Improved dataset mixture: The first version of PaLM mostly used English text for pre-training, while PaLM 2 improved that aspect by including a diverse mix of languages, programming, math, science, and web content in its corpus.

- Updated architecture: PaLM 2 boasts an updated architecture and underwent training across various tasks. This comprehensive approach empowers PaLM 2 to grasp various dimensions of language with excellent proficiency.

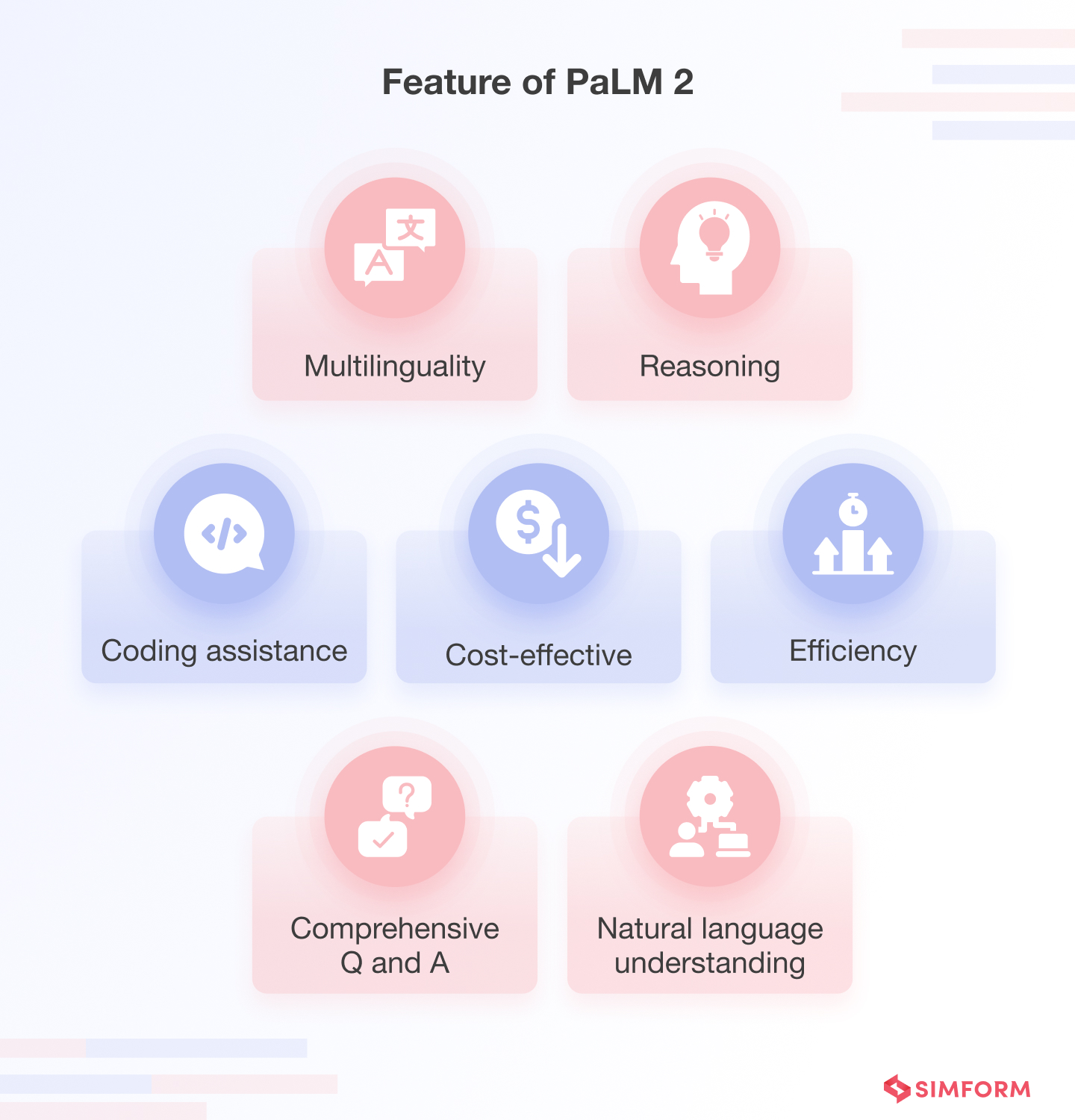

PaLM 2 features and capabilities

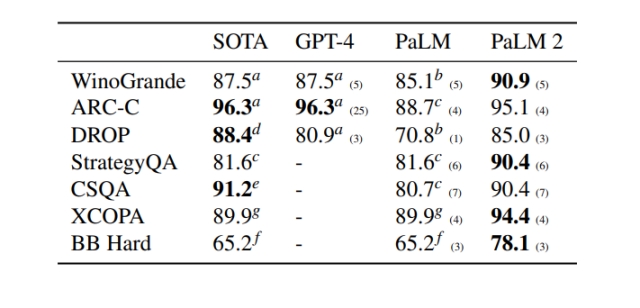

PaLM 2 is faster, highly efficient, and has a lower serving cost than other LLMs such as PaLM and GPT-4. In the 92-page PaLM 2 technical report, researchers have compared PaLM 2, PaLM, and GPT-4 on various reasoning tests, such as WinoGrade, ARC-C, DROP, StrategyQA, CSQA, XCOPA, and BB Hard.

They found that:

- PaLM 2 outperforms PaLM in every reasoning test conducted by researchers, showing that it is more capable and advanced than its predecessor.

- Secondly, PaLM 2 also performs much better than GPT-4 on all the reasoning tests, barring ARC-C, where GPT-4 has a slight edge.

Let’s look at the features and capabilities of PaLM 2 in more detail.

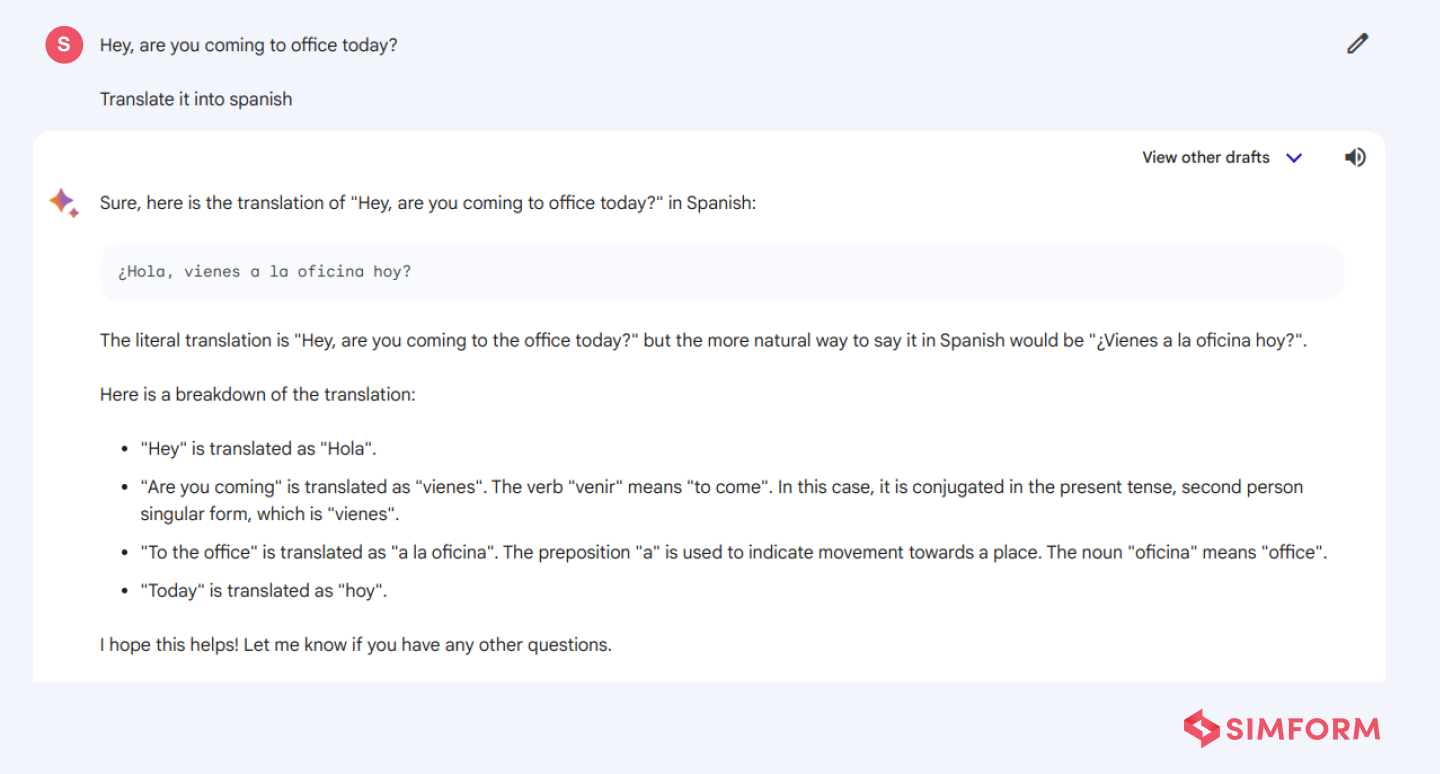

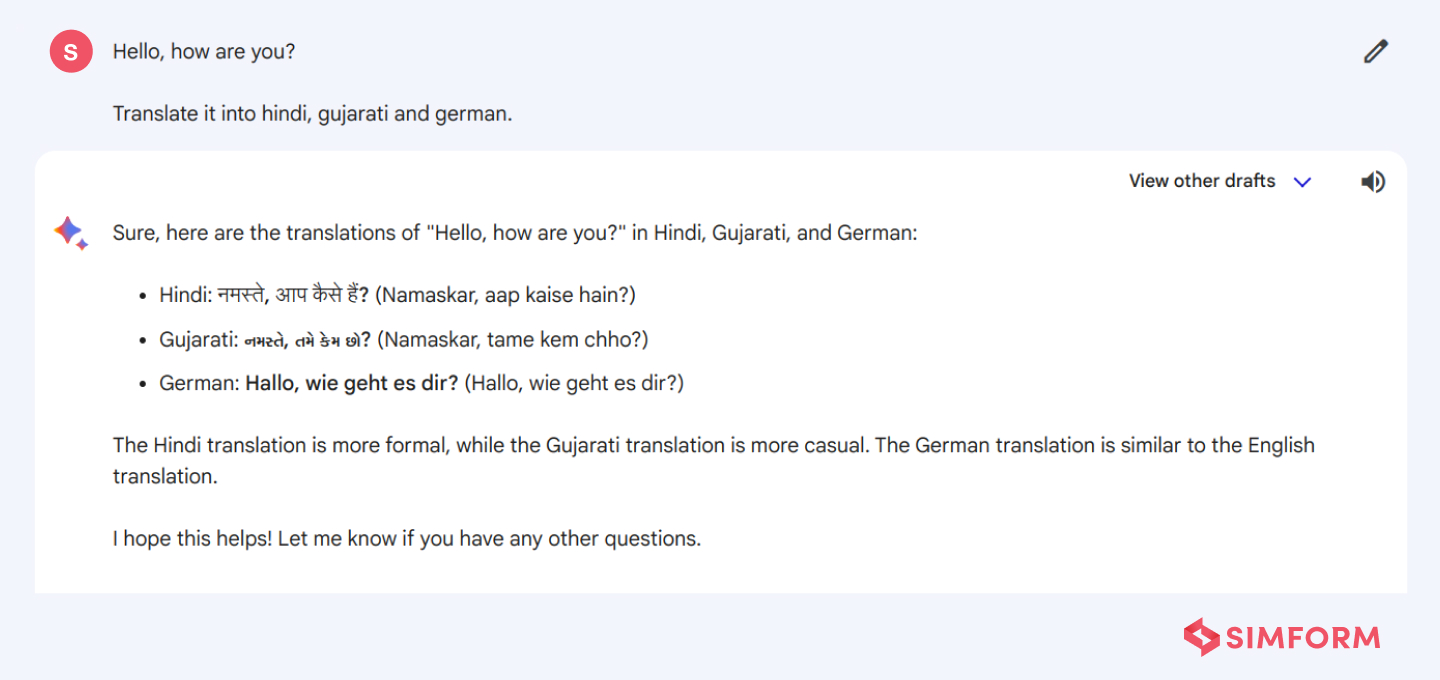

1. Multilingualism

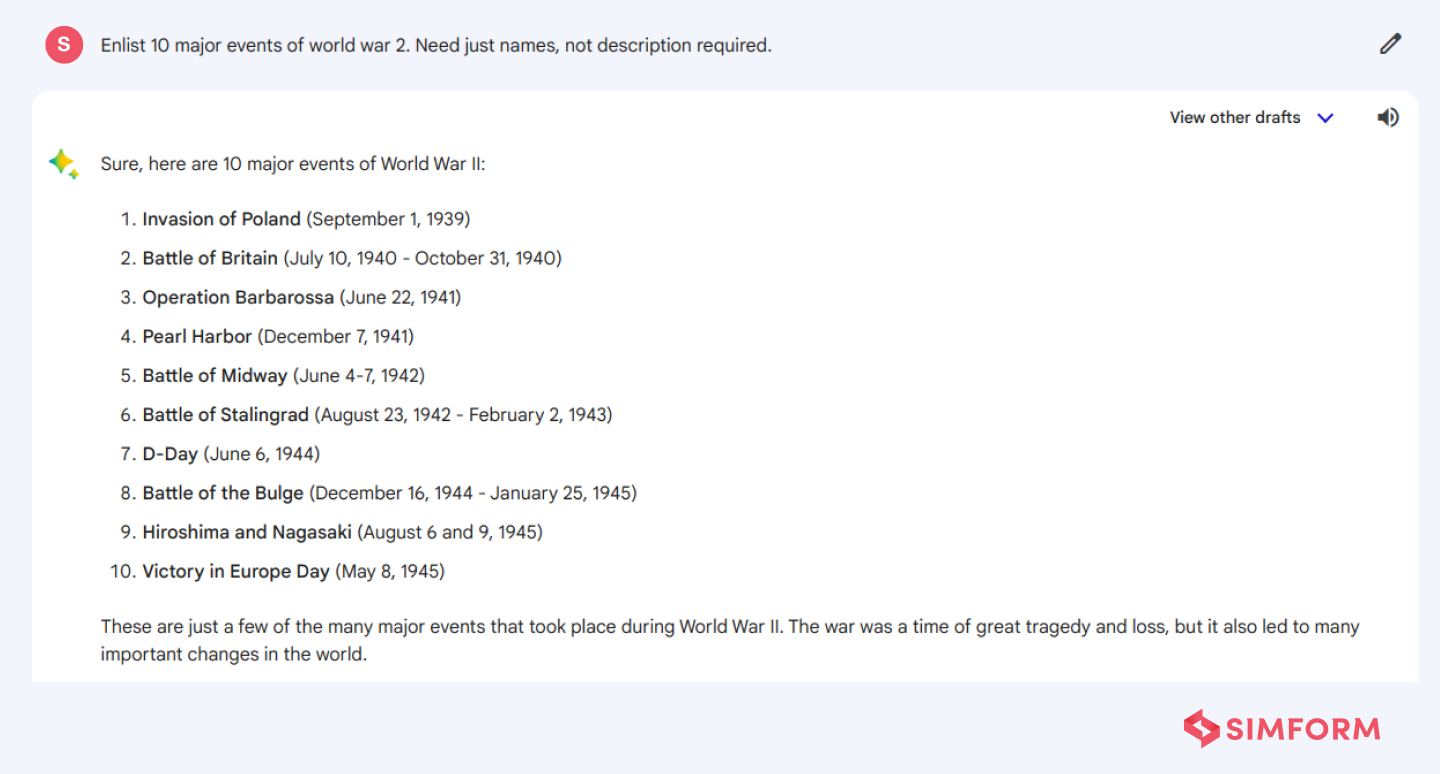

PaLM 2 exhibits multilingual capabilities, meaning it can understand and generate text in multiple languages. Because it has been trained on diverse language data, it can adapt to different linguistic contexts and generate coherent text in various languages.

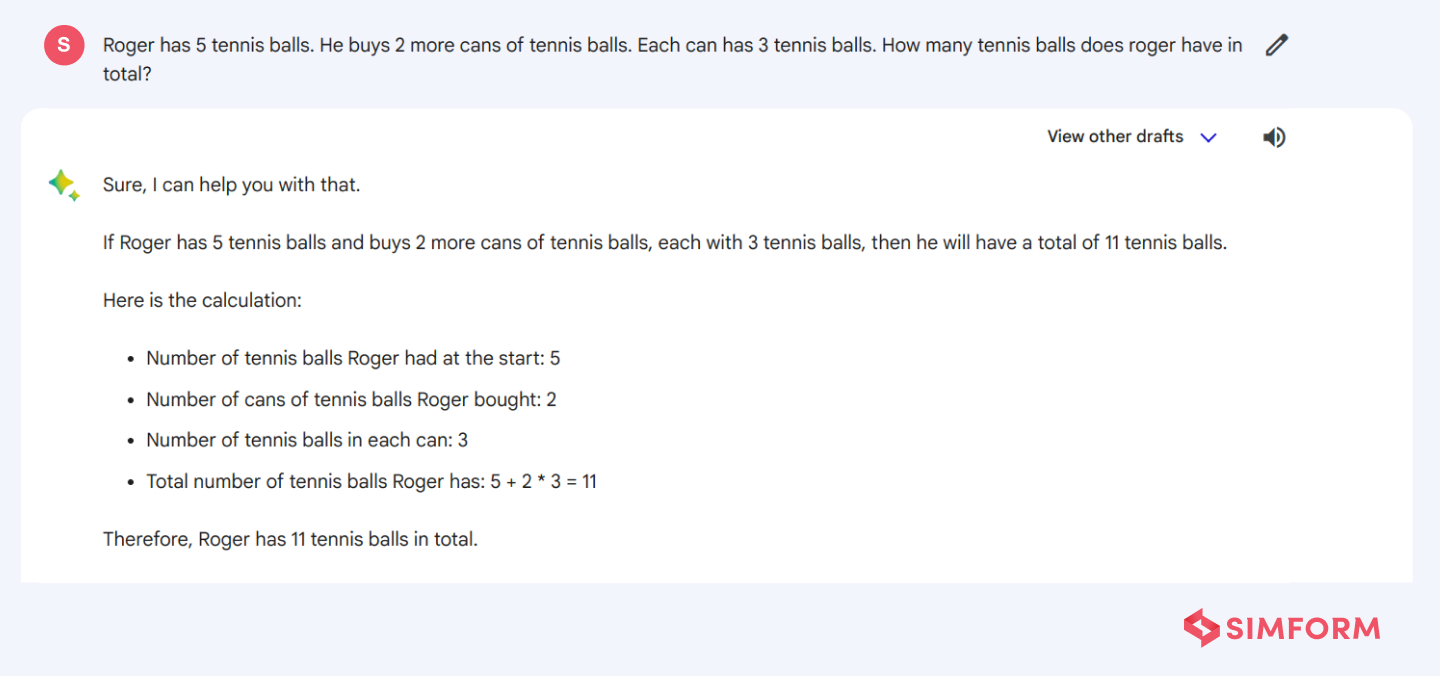

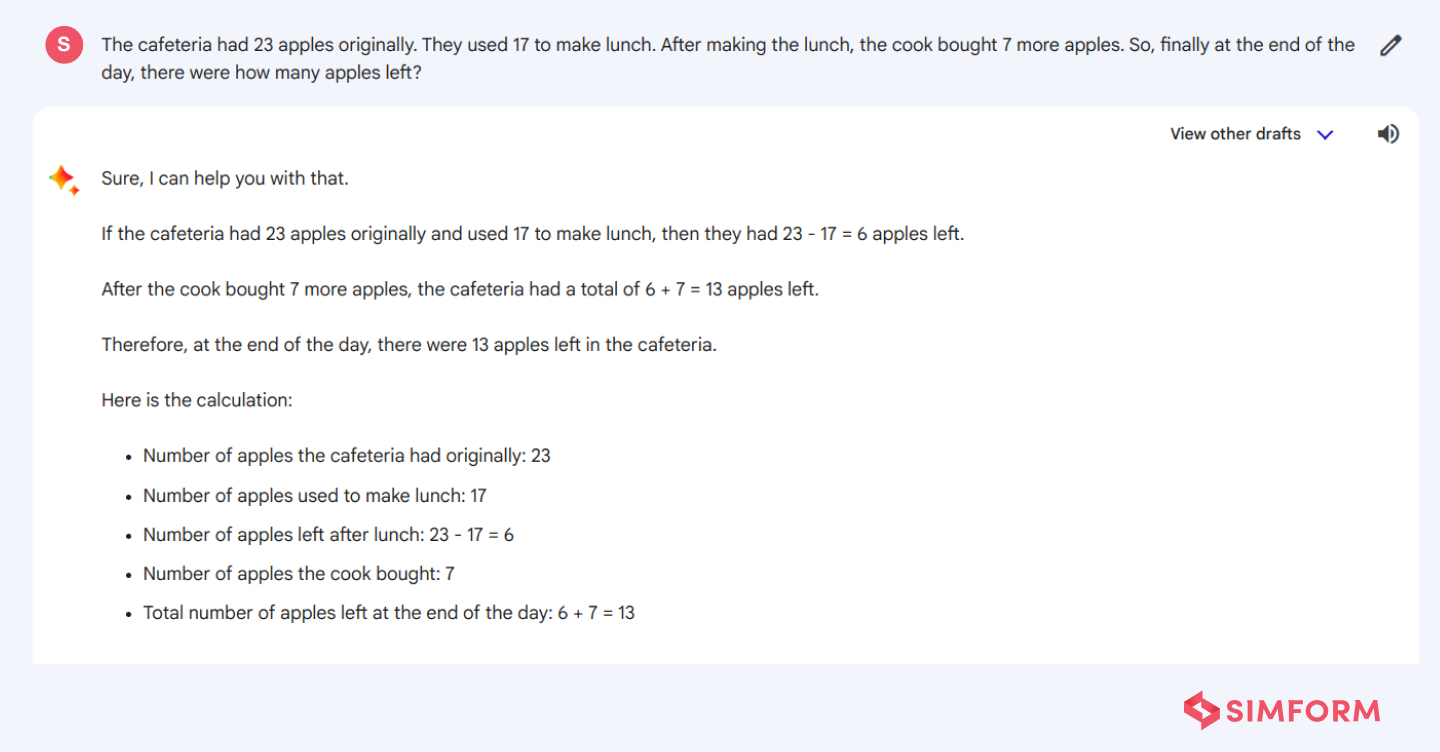

2. Reasoning

PaLM 2 demonstrates a form of reasoning through its understanding of context and logical inference. While not equivalent to human reasoning, it can use its learned patterns and knowledge to make deductions, provide explanations, and generate coherent text that follows logical progressions.

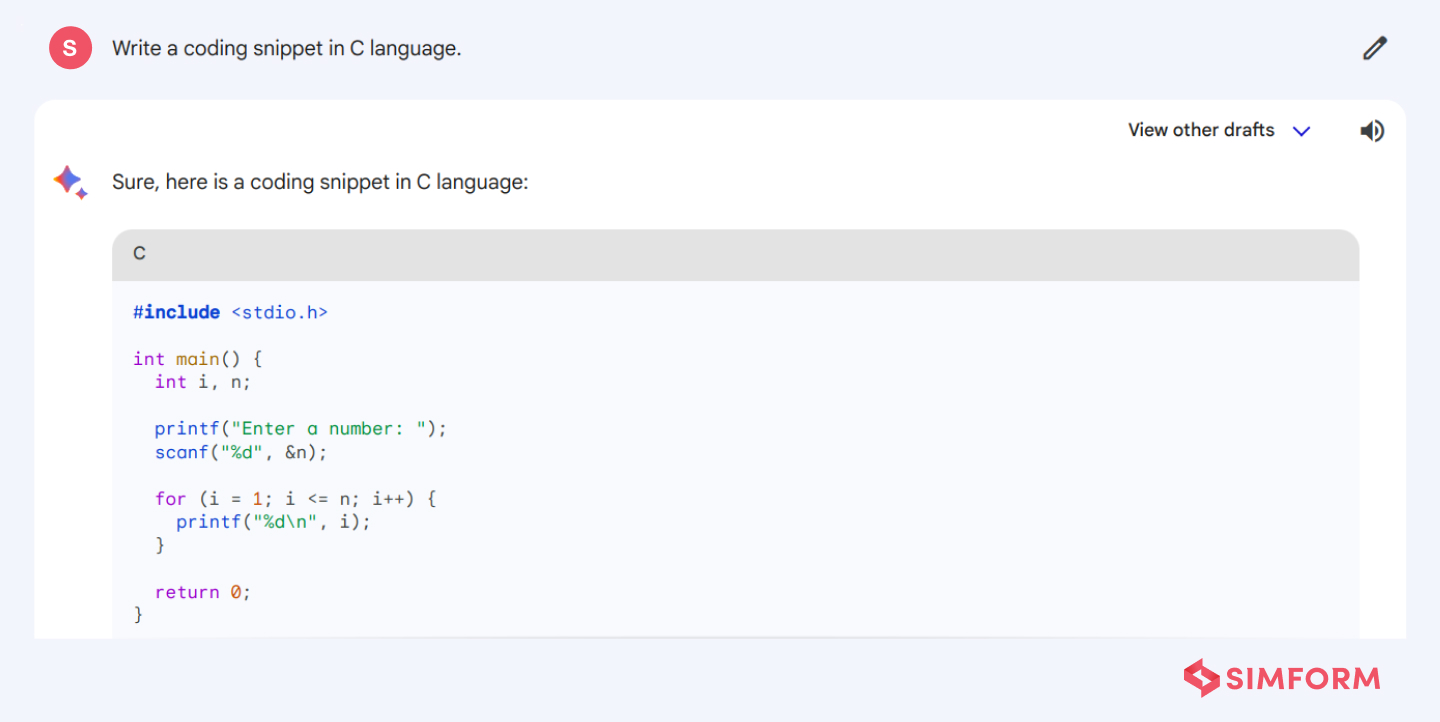

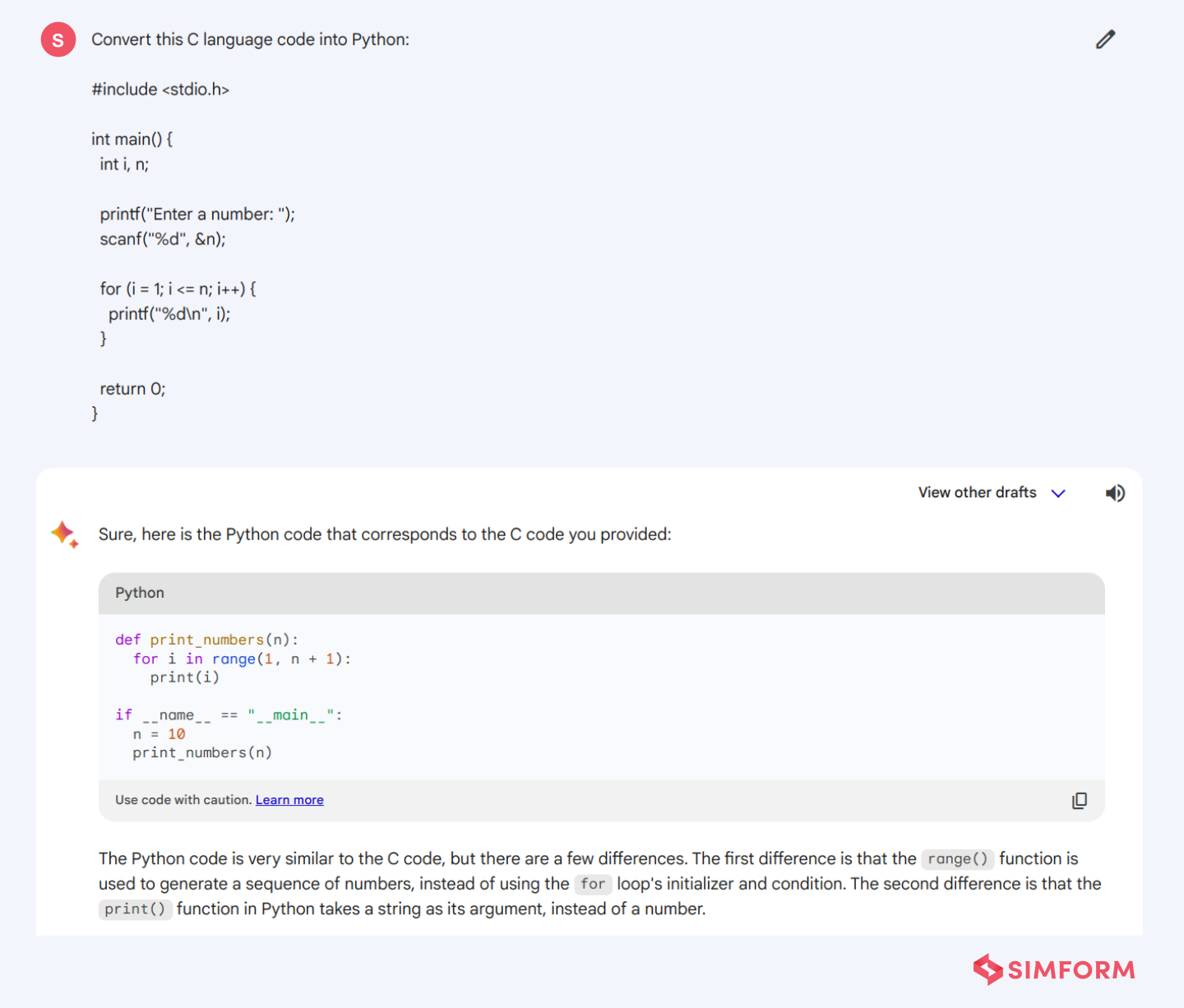

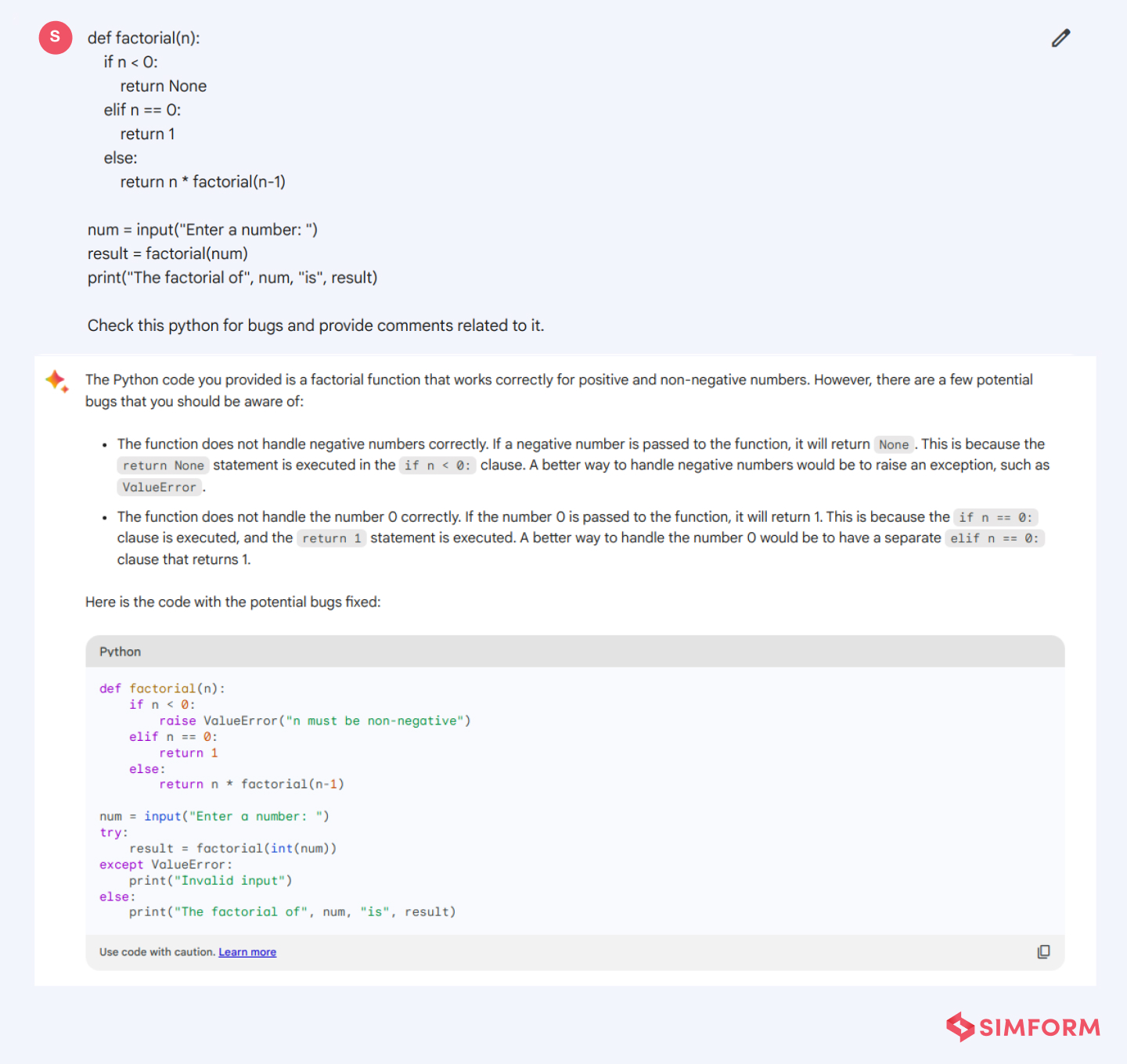

3. Coding assistance

PaLM 2 can also offer assistance with coding tasks. When provided a prompt with partial code or describing a coding problem, the model can generate code snippets that fulfill the desired functionality. This feature is useful for tasks like code completion, generating code from natural language descriptions, and suggesting programming solutions.

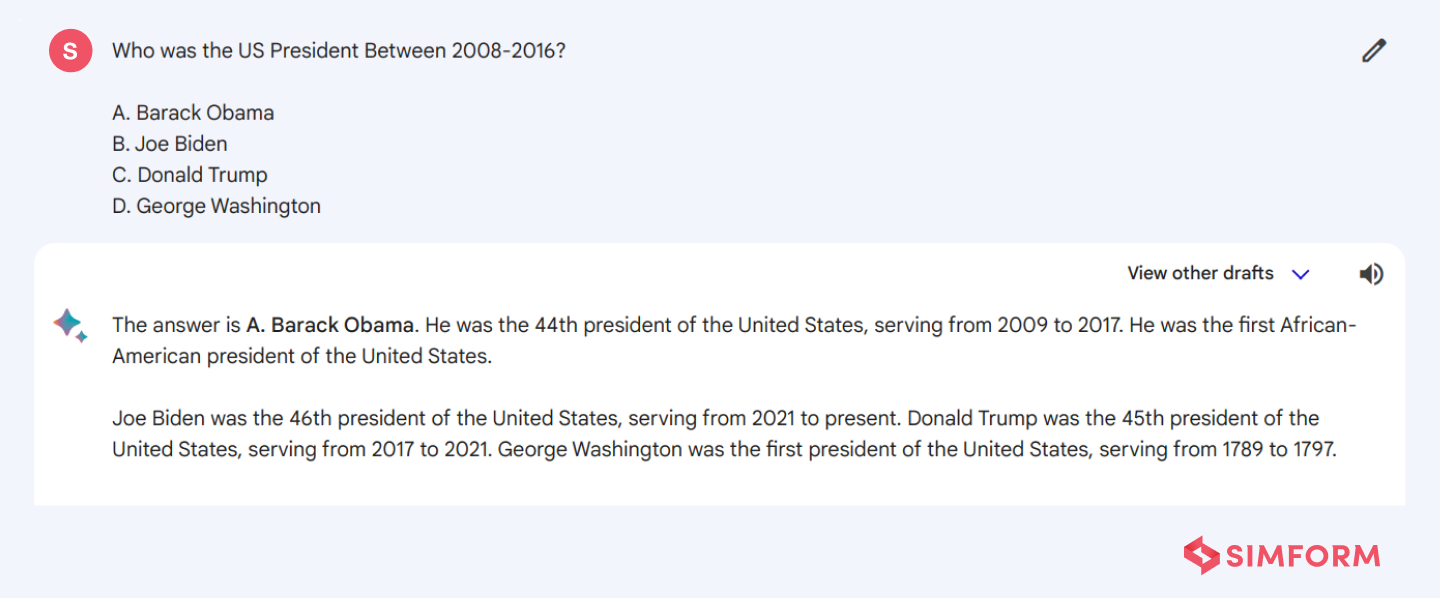

4. Question-answering

PaLM 2 excels at question-answering tasks as well. Given a question and a relevant context, the model can generate text that answers the question based on its learned knowledge and contextual understanding. This feature has applications in information retrieval, providing concise answers to user queries, and assisting in research.

These impressive features of PaLM 2 have made it a versatile model that can be used for various applications. It’s no surprise, then, that PaLM 2 is at the core of many Google products like:

PaLM 2 vs. PaLM

Although PaLM 2 and PaLM use similar technology developed by the Google AI team, there are some differences between the two large language models based on generative AI. Let’s see how PaLM 2 fares against its previous version PaLM.

| Parameter | PaLM 2 | PaLM |

| Data used for training | 3.6 trillion tokens (size of the parameters is unknown) | 780 billion tokens and 540 billion parameters |

| Compute-model size | Smaller | Larger |

| Applications | Multiple applications, including 25 products of Google. | Limited applications |

| Multilingualism | Support for 100+ languages. | Support for fewer languages |

| Logical reasoning capability | Supports chain-of-thought prompting | Supports standard prompting |

| Inference speed | Faster | Slower |

| Speed and efficiency | Fast and more | Small and less |

PaLM 2 vs. GPT-4

Open AI and Google AI are significant players in the generative AI development. While Open AI has developed ChatGPT, which takes its basis on the GPT-4 LLM, Google AI has developed Google Bard, which takes the basis on PaLM 2 LLM. Let us compare these two popular LLMs.

| Feature | PaLM 2 | GPT-4 |

| Model size | Unknown | 175 billion parameters |

| Training data | 560 trillion words | 500 trillion words |

| Performance | Superior at answering questions comprehensively | Capable of providing accurate answers but not as well as PaLM 2 |

| Internet access | Connected with Google, which means access to all current events | Connected with Bing AI, which has limited access to current data |

| Image processing | Can describe images from URLs. | Lacks image processing capabilities |

| Availability | Not available in European countries such as Germany, Austria, Switzerland, and Sweden | Available in all countries |

| Available models | Gecko, Otter, Bison, Unicorn | 1.3B, 175B, 1.3T |

| Pros | Smaller models, open-source, enhanced reasoning capabilities, etc. | Large training dataset, good performance, etc. |

| Cons | Not as large as GPT-4, not as many applications | Paid, not open-source as PaLM 2 |

How does PaLM 2 work?

PaLM 2 is built on the Transformer architecture and has been trained using the Pathways system. Pathways is a new ML system that enables highly efficient training across multiple TPU Pods. This is important for training large language models like PaLM 2, which require a lot of computational resources.

Component 1: Pathways system

Pathways in PaLM 2 makes it a general-purpose intelligent model capable of managing multiple tasks and using its existing abilities to quickly and efficiently learn new tasks. This means that knowledge gained from training on one task, like understanding how aerial images predict landscape elevation, can help the model learn another task, like predicting floodwater flow through that terrain.

Pathways has facilitated easy training of the model on a massive scale for various types of tasks. This large dataset allows PaLM 2 to learn the relationships between a wide variety of words and symbols, which makes it more powerful and versatile than previous LLMs.

Component 2: Transformer architecture

The Transformer architecture is a deep learning architecture that relies on the self-attention mechanism. The attention mechanism helps the model learn the relationships between different tokens in the input sequence, even if they are far apart. This is important for understanding the meaning of natural language text, which often contains long-range dependencies.

PaLM 2 works by first tokenizing the input text into a sequence of words or symbols. Then, it uses the Transformer architecture to learn the relationships between different tokens in the input sequence. This allows PaLM 2 to understand the meaning of the input text and to generate appropriate responses.

Drawing from few-shot learning, PaLM 2 incorporates relevant information from a limited dataset and provides accurate responses even in niche contexts.

Because of the efficiency in how PaLM 2 works and performs, it has diverse applications in various industries. Let’s explore them briefly in the next section.

Applications of PaLM 2

From medicine to security, market research, HR, and marketing, various industries can benefit immensely from PaLM 2.

1. Med-PaLM 2

Med-PaLM 2 is a medical large language model developed by Google. It is designed to assist healthcare professionals in tasks such as medical text analysis, clinical decision-making, and other medical applications.

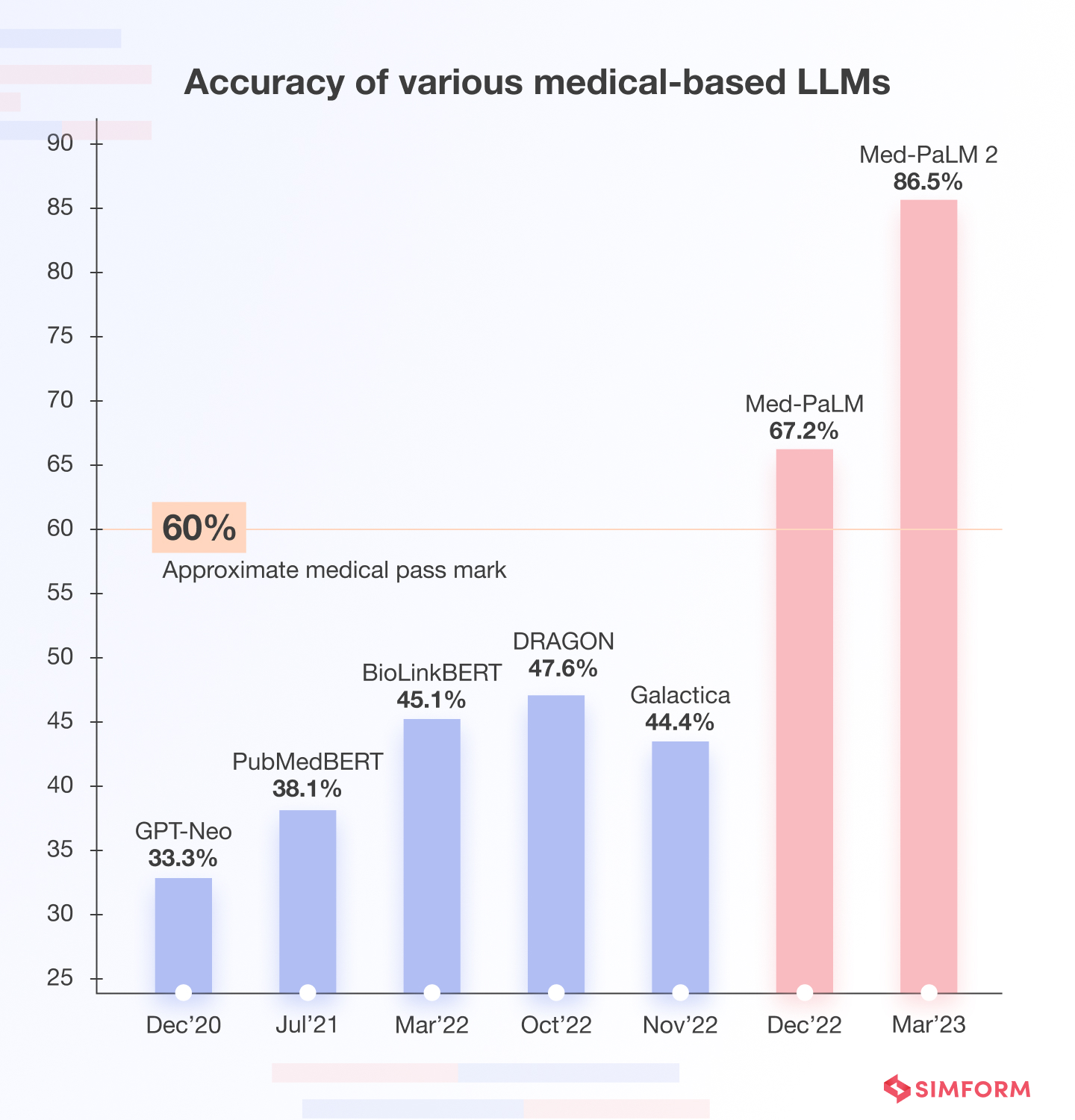

In late 2022, Google preprinted the initial version of Med-PaLM and published it on Nature in July 2023. This AI system became the first to surpass the pass mark on US Medical Licensing Examination (USMLE) style questions. Panels of physicians and users deemed Med-PaLM’s long-form answers to consumer health questions accurate and helpful.

Use cases of Med-PaLM 2 in real-world

- Medical research: It can assist researchers in analyzing vast amounts of medical literature, generating hypotheses, and providing insights for drug discovery, disease analysis, and treatment development.

- Medical education: As a powerful educational tool, Med-PaLM 2 can support medical students by answering questions, providing explanations, and offering interactive learning experiences.

- Clinical care: It can aid healthcare professionals in diagnosing and treating patients by offering evidence-based recommendations, keeping up-to-date with the latest medical advancements, and assisting in decision-making processes.

- Public health: The tool can contribute to public health initiatives by analyzing health trends, predicting disease outbreaks, and suggesting preventive measures and interventions.

- Patient interaction: It can enhance patient engagement by providing personalized health information, answering questions about conditions and medications, and offering lifestyle recommendations.

- Electronic Health Records (EHR): It can streamline EHR documentation by generating accurate and detailed medical notes, saving time for healthcare providers.

- Telemedicine: The tool can be integrated into telemedicine platforms, providing real-time support to remote healthcare consultations.

- Medical image analysis: It can assist in interpreting medical images, aiding radiologists and other specialists in detecting abnormalities and making diagnoses.

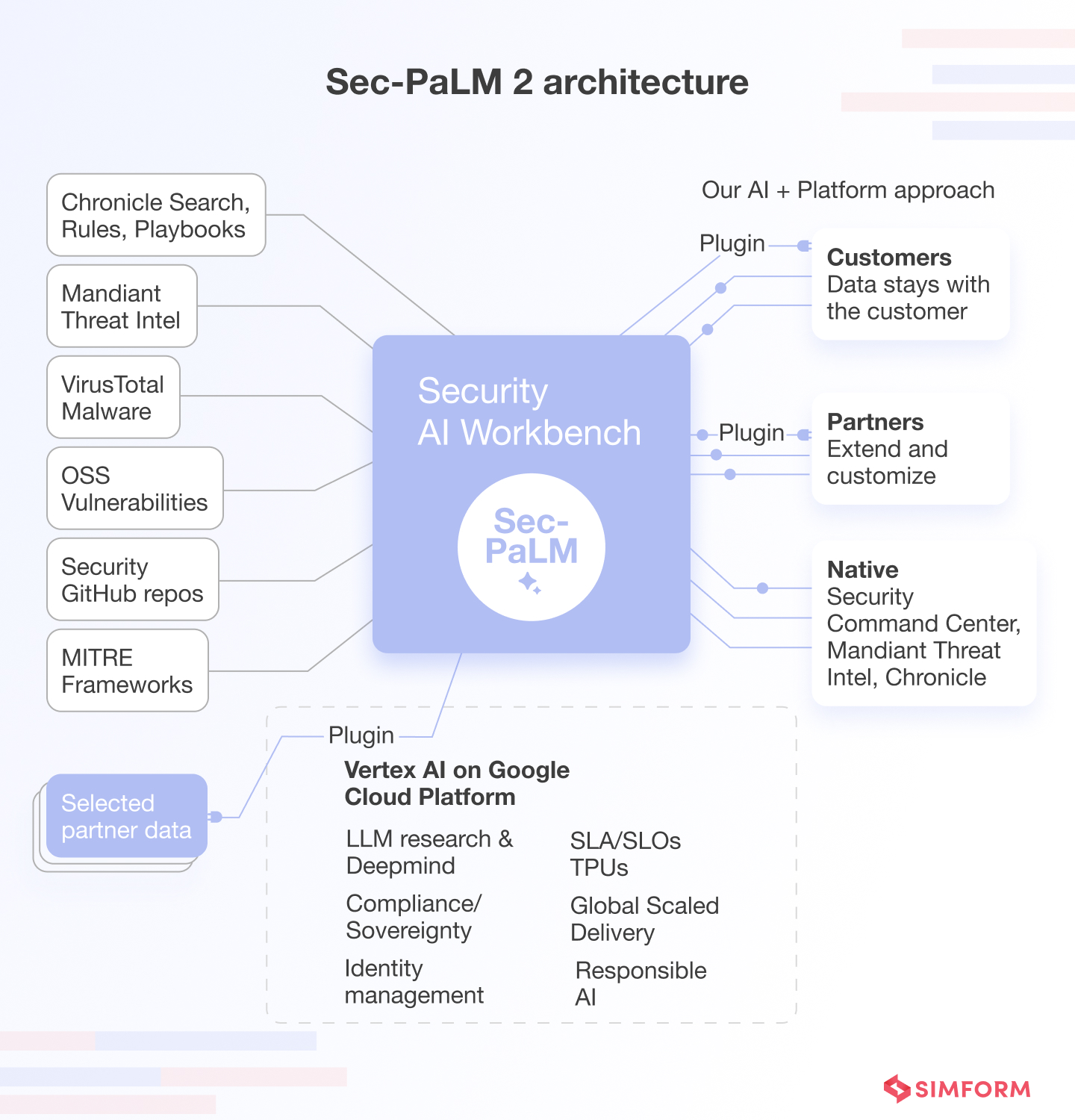

2. Sec-PaLM 2

Sec-PaLM 2, short for Secure PaLM 2, is a variant of PaLM 2 designed to enhance the security and privacy of language models. You can access Sec-PaLM 2 through your Google Cloud account.

Sec-PaLM 2 incorporates additional security measures to safeguard sensitive data and prevent potential privacy breaches. The principle behind Sec-PaLM 2 is differential privacy techniques, which inject controlled noise into the training process. This noise addition makes sure that individual data points can’t be extracted from the model’s responses and protects user privacy.

Using differential privacy also ensures that the model does not memorize or overfit specific data points during training. This mitigates the risk of potential attacks on individual data records.

Use cases of Sec-PaLM 2 in real-world

- Malware analysis and threat contextualization: Sec-PaLM 2 can analyze and categorize malware, providing context to understand its behavior, origins, and potential impact on systems.

- Threat intelligence: It generates actionable threat intelligence reports by processing vast amounts of security-related data. This helps security teams identify emerging threats and vulnerabilities.

- Security research: Researchers can leverage Sec-PaLM 2 to explore complex cybersecurity topics, conduct data-driven analyses, and uncover new insights in the volatile landscape of digital threats.

- Security operations: The tool can streamline security operations by automating tasks such as log analysis, anomaly detection, and incident response.

- Anomaly detection: Sec-PaLM 2’s natural language processing capabilities enable it to identify irregular patterns and potential security breaches, enabling early detection and mitigation of cyber-attacks.

- Phishing detection: It can analyze suspicious emails and messages to help organizations identify phishing attempts and thwart social engineering attacks.

- Threat hunting: With its ability to process vast datasets, Sec-PaLM 2 can aid in proactively searching for signs of potential threats within a network.

- User behavior analysis: By understanding typical user behavior, it can recognize deviations that might indicate unauthorized access or insider threats.

- Vulnerability assessment: Sec-PaLM 2 can assist in identifying potential weaknesses in systems and applications, facilitating proactive security measures.

- Security awareness training: It can contribute to creating customized security awareness programs and educate users about the latest threats and best practices for safeguarding data.

3. Market analysis

PaLM 2’s capability to analyze and comprehend vast amounts of textual data helps IT teams stay informed about market trends, consumer preferences, and competitor strategies. It can swiftly process news articles, social media conversations, and industry reports to extract actionable insights.

4. Customer support and assistance

With PaLM 2, companies can take customer support to the next level by deploying advanced chatbots and virtual assistants.

These AI-powered assistants can engage in natural language conversations to provide instant and accurate responses to customer queries.

5. Content generation and curation

PaLM 2’s prowess in generating coherent and contextually relevant content proves invaluable for businesses creating marketing assets like articles, blog posts, and product descriptions. It can automate processes involved in content creation and also serve as a writing assistant.

6. HR recruitment and screening

PaLM 2 simplifies the laborious task of resume screening by swiftly parsing through CVs and cover letters. With language understanding capabilities, it can identify relevant skills, experiences, and qualifications to help HR teams shortlist candidates more efficiently. If used smartly, PaLM 2 expedites recruitment and ensures a higher likelihood of selecting candidates who align with the organization’s needs.

7. Legal document analysis

PaLM 2 helps with legal research by analyzing and summarizing intricate legal texts, contracts, and case law. Legal teams can quickly extract relevant information, identify precedents, and formulate more robust strategies.

Limitations of PaLM 2

While PaLM 2 represents a significant advancement over prior models related to language, it still exhibits certain constraints worth examining.

- Limited data for training: As an AI model, PaLM 2 requires vast data to get trained effectively. However, it might still have limitations due to the scarcity of diverse and high-quality data, affecting its generalization and performance in some domains.

- Struggles with out-of-distribution data: PaLM 2 might encounter difficulties in understanding and generating appropriate responses when faced with data that significantly differs from its training set. It cannot adapt well to unseen or rare scenarios, leading to potential inaccuracies.

- Prone to biases in training data: PaLM 2 could inherit biases in its training database like many language-based models. As a result, it might generate responses that inadvertently reflect societal or cultural biases, which can be problematic.

- Longer inference times: PaLM 2’s AI model architecture may lead to longer inference times, making real-time applications challenging. The increased computational requirements might hinder its usability.

- Limited explainability: PaLM 2’s decision-making process can be challenging to interpret and explain as a deep learning AI model. This lack of transparency might raise concerns about accountability and trust.

- Resource-intensive: Training and maintaining PaLM 2 require substantial computing resources, making it less accessible for researchers or developers with limited computational capabilities or budget constraints.

- Lack of domain-specific knowledge: PaLM 2 may lack an in-depth understanding of specific domains or industries, as its training data might not cover all possible knowledge domains.

Despite these limitations, PaLM 2 can offer valuable benefits to businesses if used effectively.

How to use Google PaLM 2 effectively?

Here are a few techniques to make the most of this versatile large language model:

- Refine by option: Enter your search query in the search bar. Once you get the initial results, narrow your search using the “Refine by” option. It allows you to filter results based on specific criteria.

- Filter option: Use the “Filter” option to customize your search results further. You can filter by date, location, relevance, and other parameters to get more precise information.

- Sort by option: To organize your results, use the “Sort by” option. Depending on your preferences, you can sort results by date, relevance, or popularity.

- Related topics feature: This feature displays related topics to your search query, helping you explore additional areas of interest and gather comprehensive information.

- Do more feature: Use the “Do more” feature for in-depth actions. It may include translating content, accessing additional resources, or performing advanced searches.

- Share feature: Easily share relevant information with others using the “Share” feature. You can directly share the link to the search results or specific content through various communication channels.

- Save feature: Keep track of valuable information using the “Save” feature. You can save search results, individual pages, or articles for future reference.

- Set reminder feature: To get timely updates on a specific topic, utilize the “Set reminder” feature. This option allows you to set reminders for yourself and receive notifications when new content related to your search query becomes available.

- Help feature: If you encounter difficulties or have questions about using this AI model, access the “Help” feature. It provides you with relevant documentation, FAQs, or access to support resources.

- Research feature: For academic or extensive research purposes, use the “Research” feature. This option may include integrations with citation managers or access to scholarly databases.

The role of PaLM 2 in the advancement of AI

Palm 2 is a powerful language model that comprehends and generates human-like text so well that it can significantly improve natural language understanding and generation tasks for businesses. With its enhanced capabilities, PaLM 2 can power various AI applications, such as chatbots, language translation, content generation, and sentiment analysis.

In the future, PaLM 2 is likely to reshape AI by enabling more sophisticated and context-aware interactions between machines and humans. Its ability to grasp complex nuances in language will drive advancements in AI-driven decision-making and automation.

Not just PaLM 2, many new age large language models are rapidly and significantly shaping the AI landscape. If you want to understand the workings and applications of LLMs in detail, check out our blog – How Do Large Language Models Work?