What is mobile application testing?

Mobile application testing refers to the process of how mobile app testers examine functionalities, usability, stability, performance, security, etc. With testing, app developers can know bugs and errors, and before the final launch, they can work on them to make the app robust. You can either conduct testing manually or take the assistance of any mobile app testing automation tools or software.

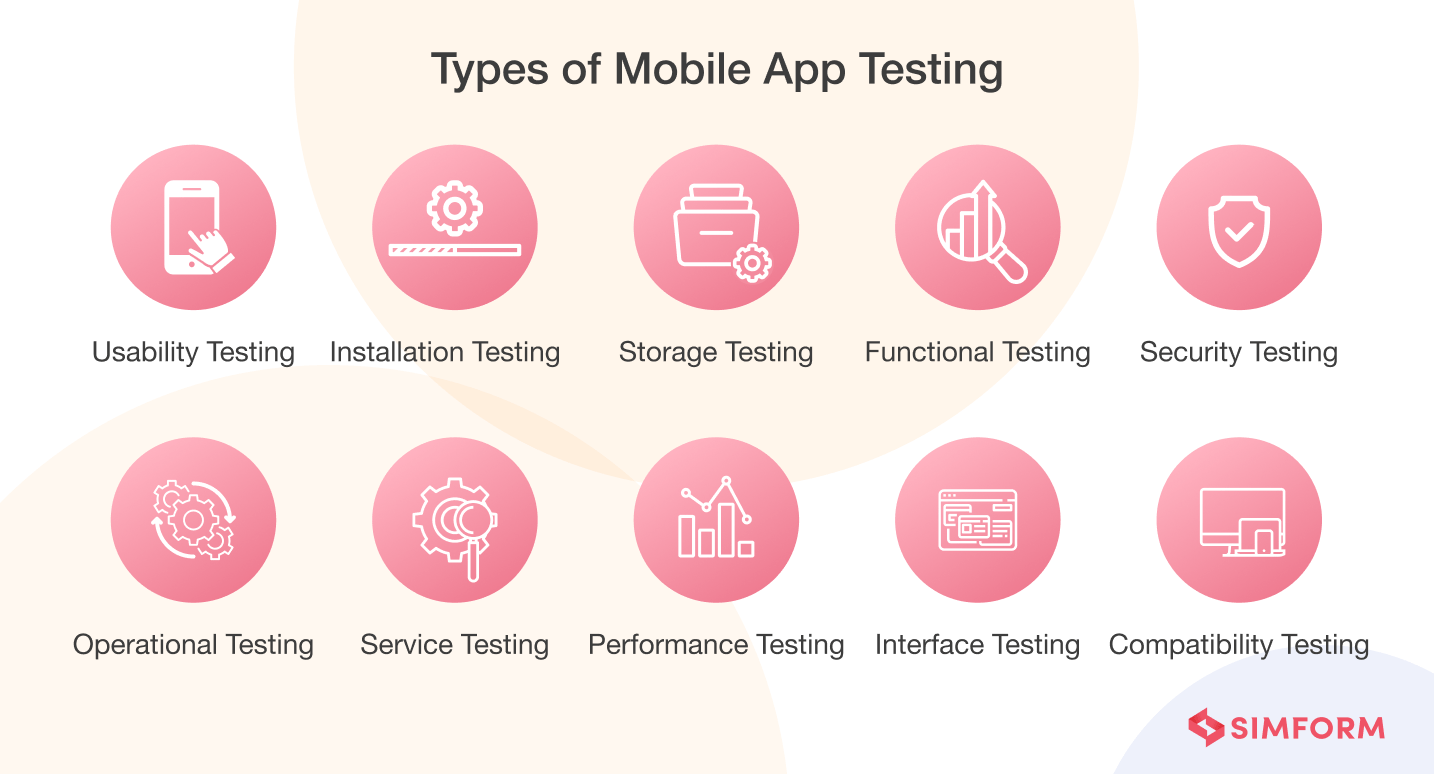

Types of mobile app testing

1. Usability testing: It checks the user-friendliness of your mobile app in terms of intuitiveness, navigation, and usage. Identifying customer experience-related bugs is a mobile app usability testing example that helps you provide a satisfactory experience to the end-users.

Since its launch in 2013, Canva has become the synonym for the design world, with 35 million active users in 190 countries that have created over a billion images. The widespread application adoption posed the challenge of customizing the user experience according to their geographical region. They partnered with Global App Testing, which focused on localization testing and helped them know the elements needed to localize the user experience. Canva engineers worked on their suggestions which helped them cover an audience outside of English users, which had reached 60%.

2. Compatibility testing: It’s a non-functional mobile application testing that verifies whether your application can run smoothly under various operating systems, browsers, mobile devices, screen resolution, network environments, and hardware specifications.

3. Performance testing: Conducting native app testing or hybrid mobile app testing to ensure the application performs at peak level under various circumstances such as different loads, mobile connectivity (3G, 4G, WiFi), document sharing, and battery consumption, etc.

4. Interface testing: It involves all the aspects related to the user interface of an application, such as menu options, buttons, bookmarks, history, settings, and navigation flow. You can also test connectivity between two operating systems with the help of interface testing.

5. Operational testing: It involves checking the operational readiness of a product, service, or system before it goes into production. Checking backups, assessing the recovery time in case of data loss, and verifying disaster recovery mechanism comes under operational testing.

6. Service testing: It helps you know whether your services work correctly online and offline. So, it should be a part of your checklist for mobile app testing. It’s also one of the mobile app testing techniques that checks an API’s functionality, reliability, performance, and security.

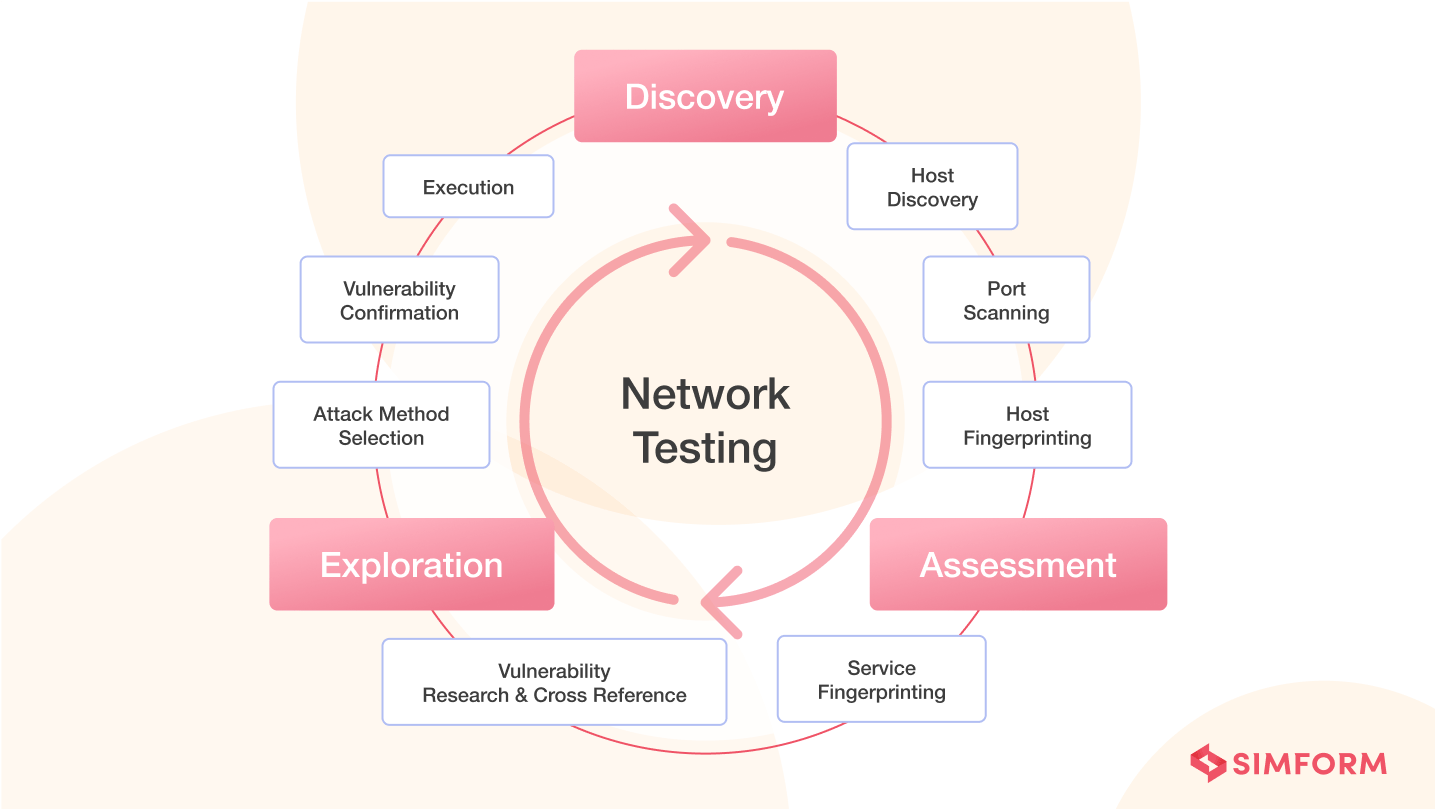

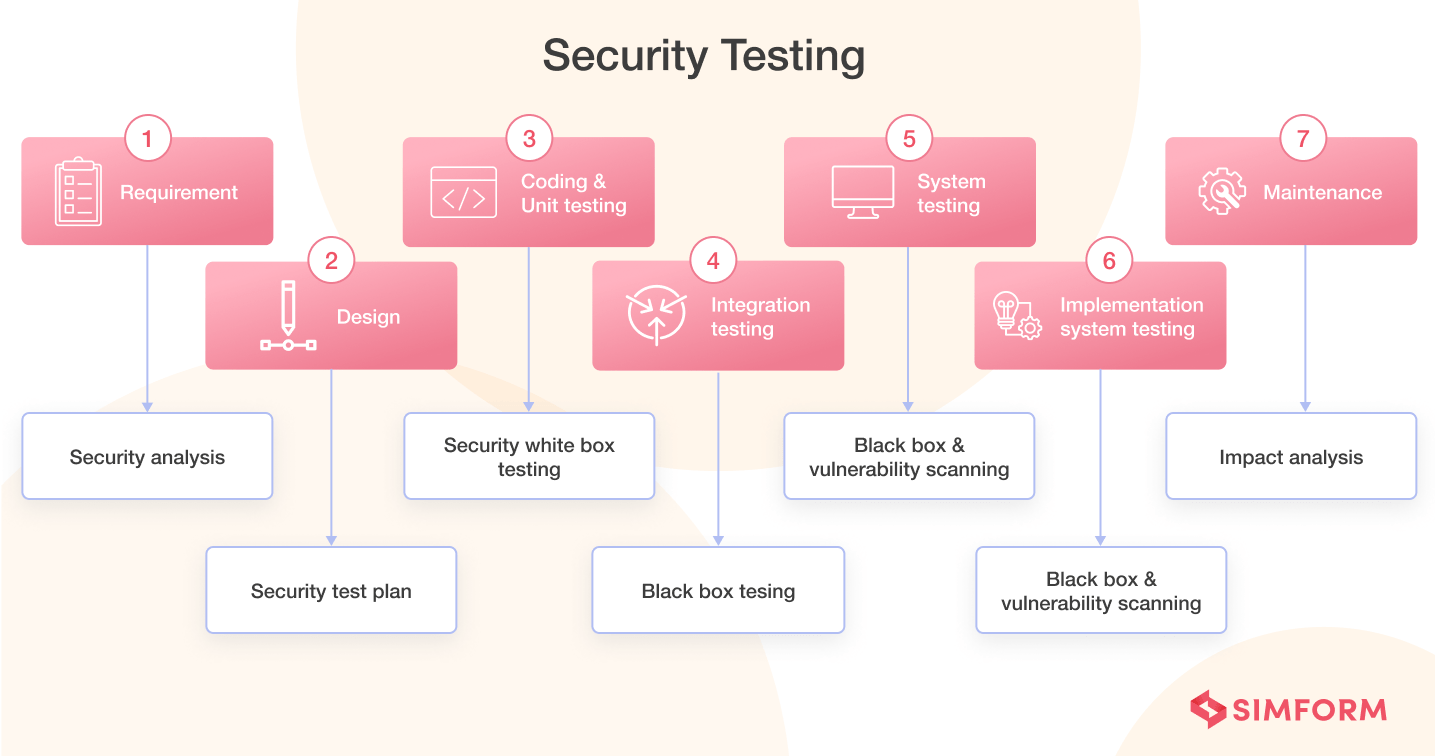

7. Security testing: One of the mobile app testing methods checks whether your application’s data is secure under various device permissions. Mobile app vulnerability testing is also a part of security testing as it uncovers vulnerabilities, threats, and risks associated with a mobile app.

8. Functional testing: Mobile app functional testing verifies whether all the mobile application functionalities get executed as needed. The principal purpose of functional testing is to validate the mobile application against the initial functionalities or requirements laid out.

9. Installation testing: It verifies whether the application gets installed or uninstalled properly. It also helps you to know whether the app updates get applied correctly or not. Mobile app testing companies can also verify whether the app installed has the features enlisted in the document.

10. Storage testing: It checks whether the application performs storage-related functionalities well, which involves storing and retrieving data from the storage, adding, updating, or deleting data on the hard disk, etc. It also checks the application’s behavior in case of low or no storage.

These were some widespread mobile testing that apps need. While performing any of these tests, you’re likely to encounter challenges. Let us explore them in our upcoming section.

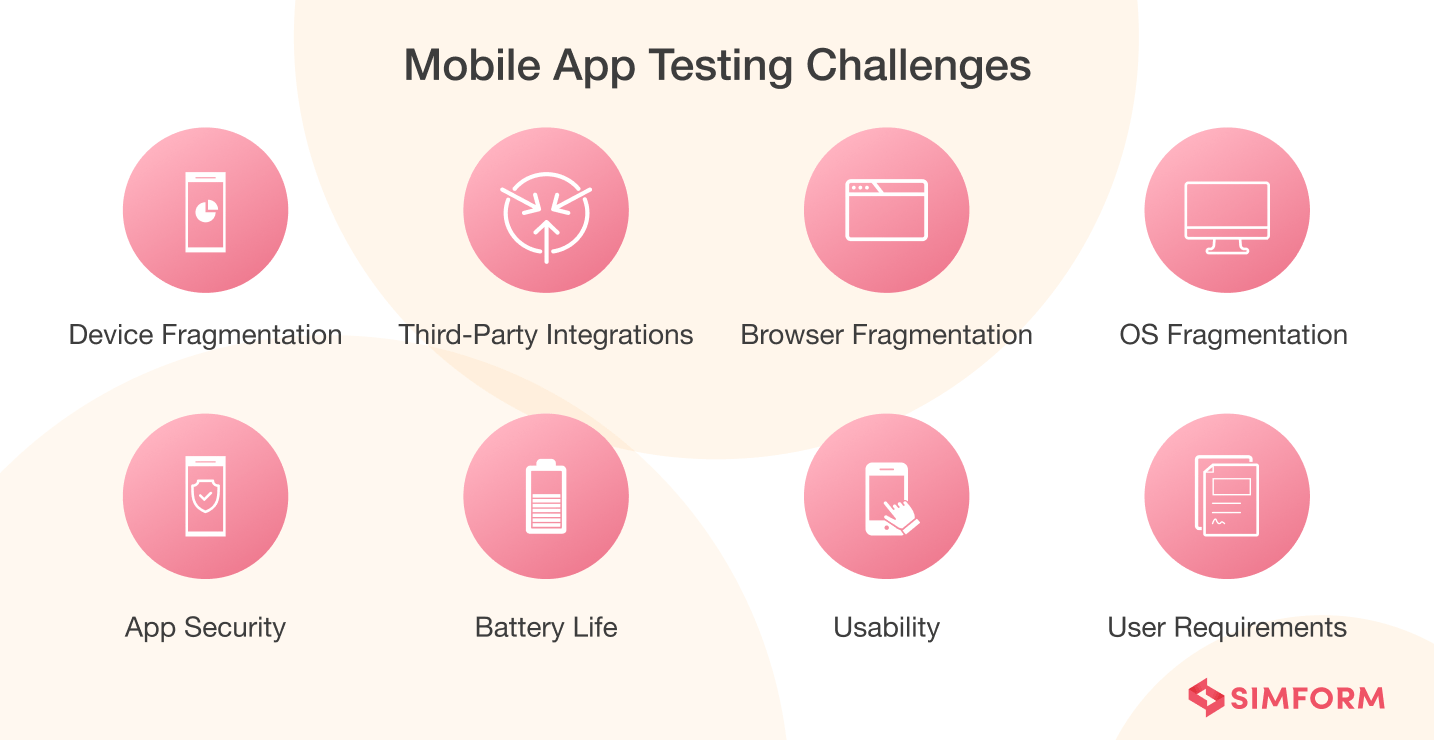

Mobile app testing challenges

1. Device fragmentation

Users will use your application on various devices with different screen sizes, carrier settings, OS, and forms. Therefore, implementing a mobile app test strategy without solving the device fragmentation would be a significant obstacle.

Here are some solutions that you can implement to overcome this issue:

- Conducting mobile app testing on emulator and simulator

- Buy a limited number of devices and test your app on them rigorously for a limited budget

- If you have got an extensive budget, create an in-house lab that includes a range of devices

2. Third-party integrations

Most mobile app testers integrate third-party extensions without verifying their impact on the current app environment. Instead, you should always test the working of third-party integrations because they’re not part of the source code you have written. They also bring a different set of dependencies you need to manage and check whether it works with your current ecosystem.

3. Browser fragmentation

Testing your application on various browsers is critical. You should test your mobile application on major mobile browsers such as Google Chrome, Mozilla Firefox, Opera, Safari, etc. Sometimes, your mobile app can be a progressive web app that operates through browsers.

4. OS fragmentation

Different mobile phones have different operating systems installed. So, if you haven’t tested your app on them, it could bite you at the time of production and release. In addition, Android and iOS are major mobile platforms, which have 13 and 16 versions, respectively. Thus, covering all the mobile app testing strategy bases could prove challenging.

5. App security

During the initial phase of an app release, the QA team should conduct in-depth security testing of the application as security threats have become more dangerous. The nature of your app, OS features, phone features, etc., plays a vital role in forming an app security test plan.

Here are some ways to mitigate challenges caused during app security testing:

- Conducting threat analysis and modeling

- Perform vulnerability analysis

- Check out the topmost security threats at that point of time

- Watch out for security threats from hackers

- Watch out for security threats from rooted and jailbroken phones

6. Battery life

The usage of batteries has also increased significantly. So, optimizing the battery consumption for highly demanding apps is the major obstacle for QA teams. Most applications today are highly complex and consume a significant portion of the battery life. Therefore, testing battery life consumption for various application scenarios is the only way to deal with it. If the app consumes too much power, users are more likely to uninstall your application.

7. Usability

The mobile app test plan often involves testing functionality but neglects usability or exploratory testing. You can provide hundreds of functionalities in your app, but if the user interface and user experience are poor, customers won’t like it. Striking a balance between functionality and usability is challenging for developers and testers.

CrayPay is a mobile app aiming to ease payment processing in retail stores. As the application was related to online shopping, you expect a lot of visitors. The challenge for us was to retain a significant portion of users. Our engineering team configured Firebase A/B testing to know the user behavior. Based on this usability testing, we tweaked the app’s user interface, which helped CrayPay increase user engagement and retention ratio.

8. Changing user requirements

The users’ needs keep changing with time, and developers must develop new features. But unfortunately, these new features would also bring new bugs or errors. So, the challenge for the QA team is to have first-hand knowledge of each update and carry out a holistic mobile app regression testing.

These were some of the significant challenges involved with mobile app testing. However, with challenges comes solutions, and in the next phase of this blog, we will explore some of the best practices for mobile app testing that will help you overcome the challenges.

Mobile app testing best practices

1. Define the scope of testing: Some testers like to focus on what they see, while others are more focused on the background. For example, you can’t change camera permissions in the Android version below 6.0 or can’t have a call kit in the iOS version below 10.0.

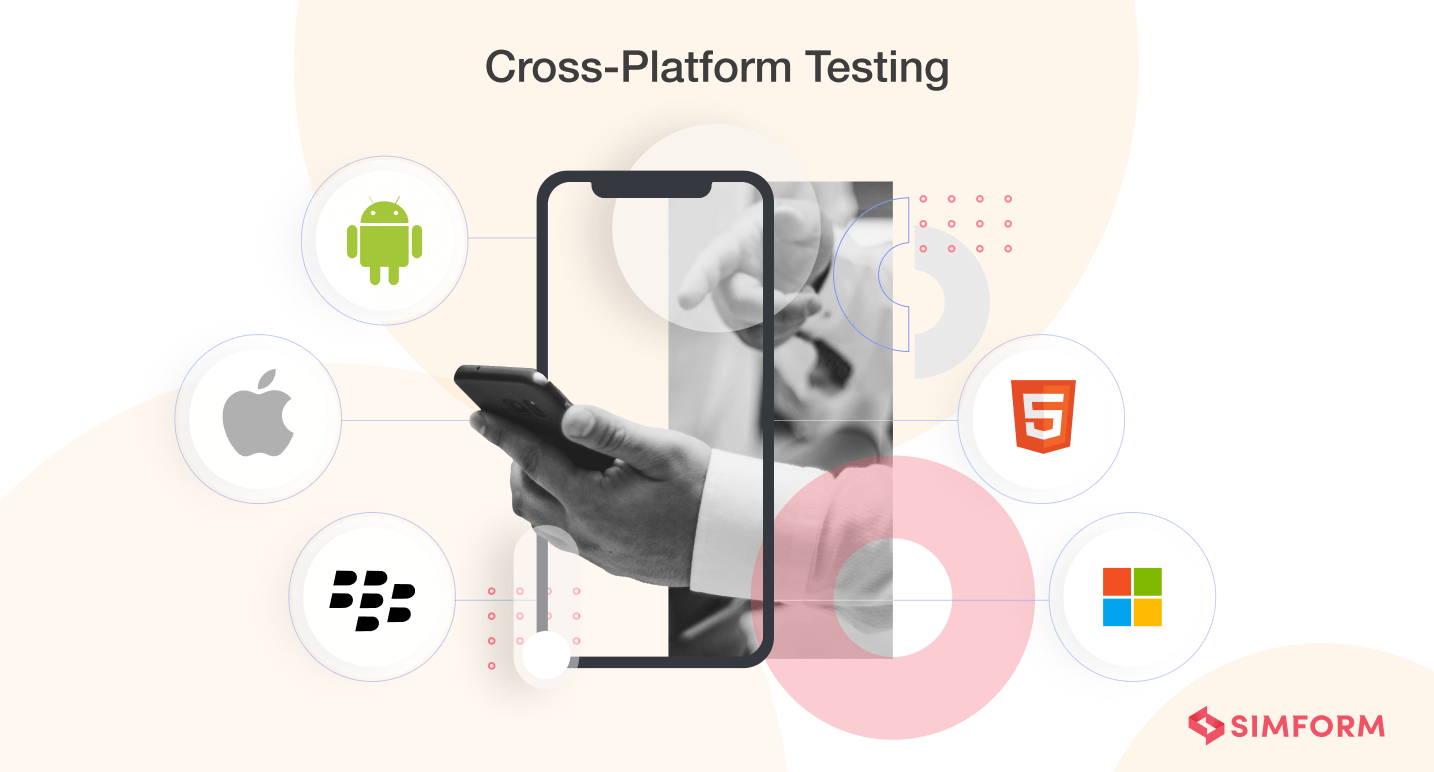

2. Conduct cross-platform testing: This test help you get rid of various compatibility issues by testing on multiple browsers, operating systems, iOS or Android devices, environments, etc.

3. Carry out app permission testing: Testers often forget to test the combinations of permissions, harming the user experience. For example, a chat app where you are permitted to share images and audio files. However, storage permission is set to NO, which means the user can’t open the camera. Thus, testing all permissions is vital.

4. Conduct connectivity-related testing: App performance is highly dependent on the network connectivity. Therefore it is recommended to conduct mobile app network testing before launching the app in production. You can use mobile app testing automation tools for network simulation.

5. Deal with fragmentation: Mobile app testing has various fragmentation such as device fragmentation, OS fragmentation, browser fragmentation, etc. So, as a QA lead, you should develop a mobile app testing platform that covers all these aspects, like

- Running apps on various physical device configurations and OS.

- Conducting manual and automated tests across emulators.

- Providing access to cloud-based platforms to carry out testing on a real device.

6. Carry out test automation: Test automation can help teams reduce the load on testers and increase efficiency. Although you can’t automate everything with test scripts, you can reduce a fair share of manual testing.

ABN AMRO is one of the most renowned banks that created ten solutions for a varied customer base. However, adding new features to it while ensuring privacy, security, and accessibility proved a pain point. So they partnered with Kobiton and used HP Unified Functional Testing (UFT) for test automation, which helped them create end-to-end testing from a single integration point. It also made the deployment and release process easier and increased efficiency, simplicity, and collaboration.

Mobile app testing process

In this day and age of fierce competition, you can’t just decide to test a mobile app and go with the flow. You need a test management process that puts you ahead of the competition. So, defining a roadmap for testing is vital. A typical mobile app testing process is as below:

Step 1: Gathering test cases

Before starting the testing process, you need to list which test cases need to get tested. So, briefly outline all test cases you need to explore, the aim of running those test scenarios, and the results you expect after testing. It will help you define a roadmap.

Step 2: Deciding manual vs. automated testing

Once you write tests, the next step is to decide whether to run them manually or take the assistance of test automation services. Here are some suggestions to determine what is best for you.

Choose automation testing when:

- You need to run a test case frequently

- The test case has a predictable outcome

- You want to test for device fragmentation

In general, small test cases become efficient when automated. Otherwise, it’s good to get assistance from manual testing when testing various systems back to back.

Fitcom is a platform that any health and fitness entity can leverage to build customized applications and cater to their audience. The platform generally launches multiple apps weekly, so manually testing them was impossible. That’s where we helped adopt the test automation approach through scripting that helped them execute 500 test cases through parallel testing. We also helped them accomplish functional, performance, and UI testing. As a result, automated scripts helped Fitcom reduce time to market and get ahead of competitors.

Step 3: Preparing test cases for multiple functionalities

To prepare test cases, you can take either of the two approaches mentioned below:

- Requirement-based testing: Testing the performance of a specific app feature

- Business-based testing: Assessing system functionality from a business perspective

In addition, the test case approach also depends on the type of test you want to run. You can divide your test cases into two broad categories:

- Functional testing: Unit Testing, Integration Testing, System Testing, Interface Testing, Regression Testing, and Beta testing.

- Non-functional testing: Security Testing, Stress Testing, Volume Testing, Performance Testing, Load Testing, Reliability Testing, Usability Testing, and API testing.

Step 4: Conducting manual testing

Most testers nowadays will vote for automated testing. However, it’s good to use a combination of both manual and automated testing when it comes to agile development. The upfront cost of manual testing is next to none, so you should start with this approach. Next, build up a team of manual testers as it will help you simultaneously conduct manual testing.

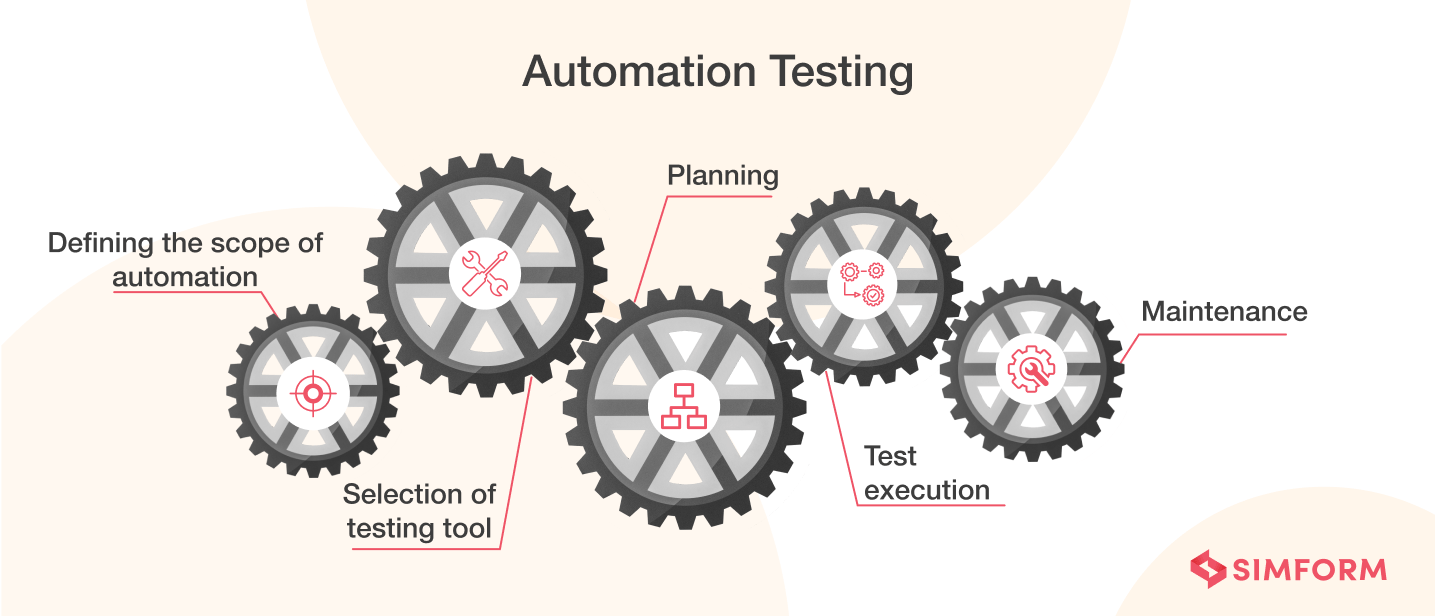

Step 5: Conducting automated testing

After a few manual testing sessions, the focus should be on automated testing. The results from manual testing would help you know which test cases you should automate. When it comes to automation testing, selecting the tools for mobile app testing is paramount.

Here are some suggestions for choosing the best mobile app testing tools:

- The tool should support various platforms

- It should have rich features

- The test cases are re-usable and change-resistant

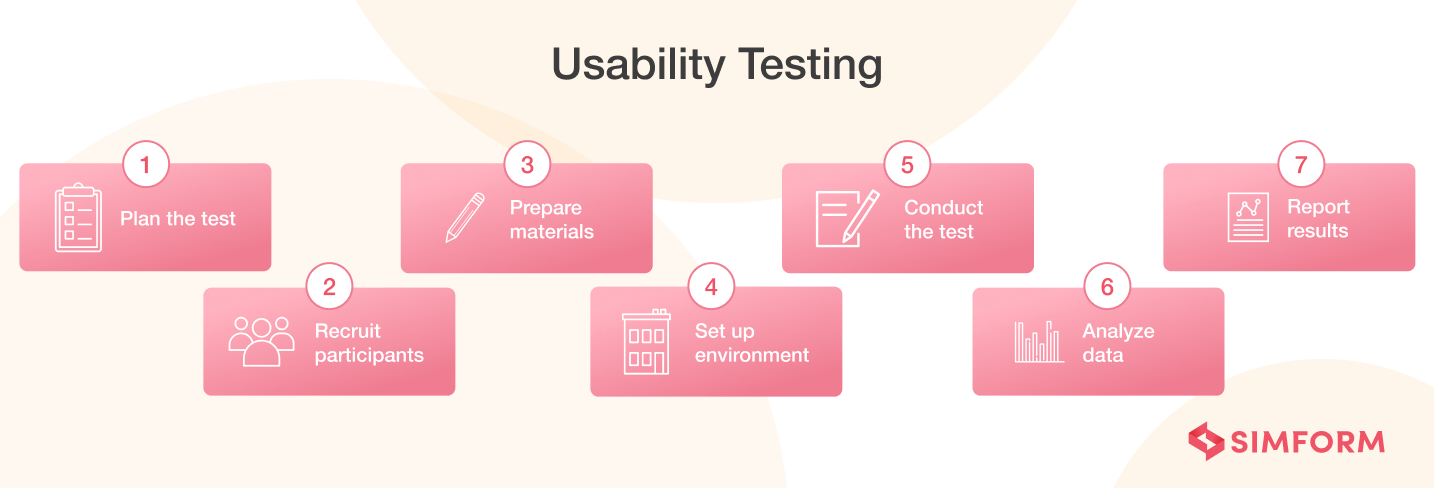

Step 6: Performing usability and beta testing

Usability testing will allow developers to know which features are well-received by users and which they need to discard. On the other hand, perform beta testing when the product is ready so that you can get feedback from users about the system before the final launch. While usability testing identifies if features work correctly, mobile app beta testing will help you know whether the feature is helpful from the end-users perspective.

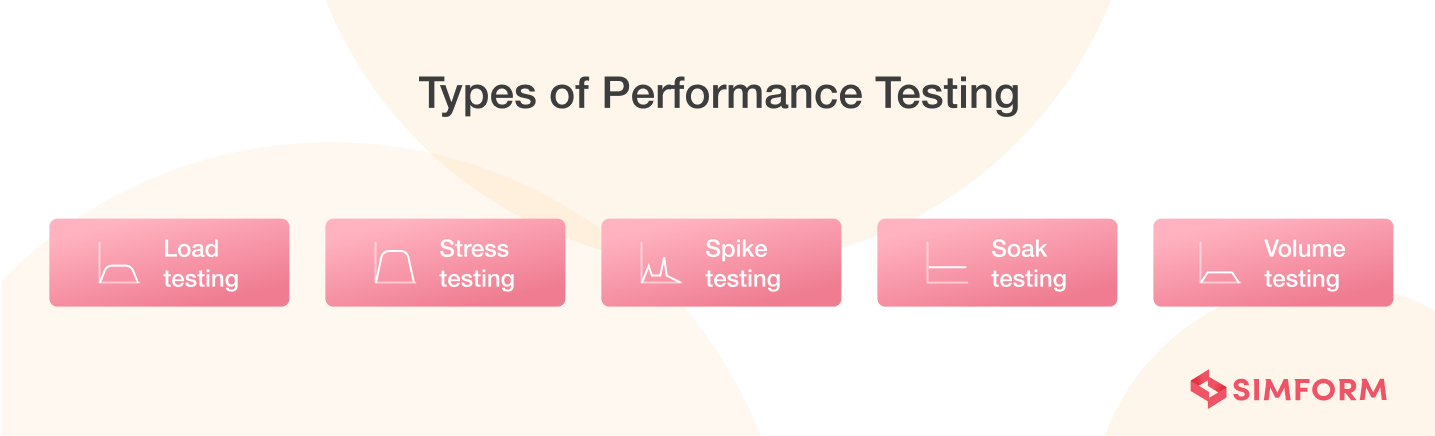

Step 7: Conducting performance testing

It will allow you to understand the entire application’s performance. It comprises mobile app load testing, stress testing, errors/bug ratio, etc. Generally, you should carry out mobile app performance testing at the end of the mobile app testing framework. However, if you conduct this test from the beginning and mix unit testing with that, it would give you a first-hand view of app efficiency.

Step 8: Carrying out security and compliance testing

There are a lot of security and compliance standards that mobile app development has to meet to get launched on the app store. You can take the assistance of certification testing for this purpose. Here are some common security standards that certification testing covers:

- HIPPA: security practices for healthcare applications

- PCI-DCS: security requirements for payment processing

- FFIEC: security practices for banking and financial-related apps

- GDPR: security guidelines for protecting personal data

Step 9: Releasing the final version

After completing all the stages, testers should run the application one final time and check whether it works properly on the back-end server. If no bugs or errors get found, it’s time to release the app on the Google Play Store or App Store.

These are the significant steps one needs to take to test mobile applications successfully. However, automated testing is the way forward, so to conduct all these tests, you need to select the best mobile app testing tools, which we will analyze in our next section.

Mobile app testing tools

If you search on the web about mobile app testing tools, there will be a massive list of open-source tools. Choosing the best out of that would be a task. We have enlisted some of the best mobile app testing tools and their pros and cons to make that process easier.

| Tools | Pros | Cons |

| Appium |

|

|

| TestComplete |

|

|

| Robotium |

|

|

| Espresso |

|

|

| Kalatan Studio |

|

|

| Selendroid |

|

|

Want to test your mobile app?

Joybird is an online furniture store providing customized furniture and personalized experiences. The store was looking to add new features and components, so there was a need for continuous testing. Our team conducted component testing to verify its functionality and integrated testing to check the system’s overall performance. We used TeamCity to implement CI/CD approach and ensure continuous app testing across multiple devices, browsers, and screens. As a result, it provided faster performance and a seamless UX.

From this case study, two things are pretty straightforward. First, mobile app testing is not easy; it requires a dedicated effort, and therefore, you would need a software testing team with years of experience in testing enterprise applications. So, if you want to test your mobile app, look no further beyond SIMFORM. Our team of experienced, dedicated, and knowledgeable testers would help you ensure customer satisfaction by delivering high-quality products.