Aging systems often become rigid, harder to scale, and expensive to maintain, especially as user demands and business needs evolve rapidly.

One of the most effective ways to break this rigidity is re-architecting the legacy software to adopt modern, scalable paradigms like microservices or event-driven architecture. For instance, Netflix was able to minimize the frequency and severity of code errors and expand its services across 130 countries by re-architecting its legacy software.

However, deciding to re-architect isn’t straightforward. It requires evaluating various modernization approaches, such as refactoring, rewriting, or rehosting, and choosing one that aligns with both technical feasibility and business goals.

In this blog, we’ll explore the key factors that influence this decision and why re-architecting is often the most strategic path forward for long-term agility and performance.

What is legacy software re-architecting?

Legacy software re-architecting is a process of redesigning an existing software application or system to improve its functionality and scalability. This can involve the creation of new code, modifications to the existing codebase, or a combination of both.

Re-architecting can be done to speed up the development cycle, reduce maintenance costs, and improve overall performance. Software re-architecting can be done in various ways, depending on the project’s specific needs.

SOA vs. Microservices vs. Serverless: Which architecture is best?

Selecting the right way to re-architecture is essential. For instance, service-oriented architecture(SOA) divides the software into multiple services that work independently and communicate through communication protocols.

Another option is going serverless. This approach is granular, and development teams can create many self-contained units for the apps that communicate through APIs.

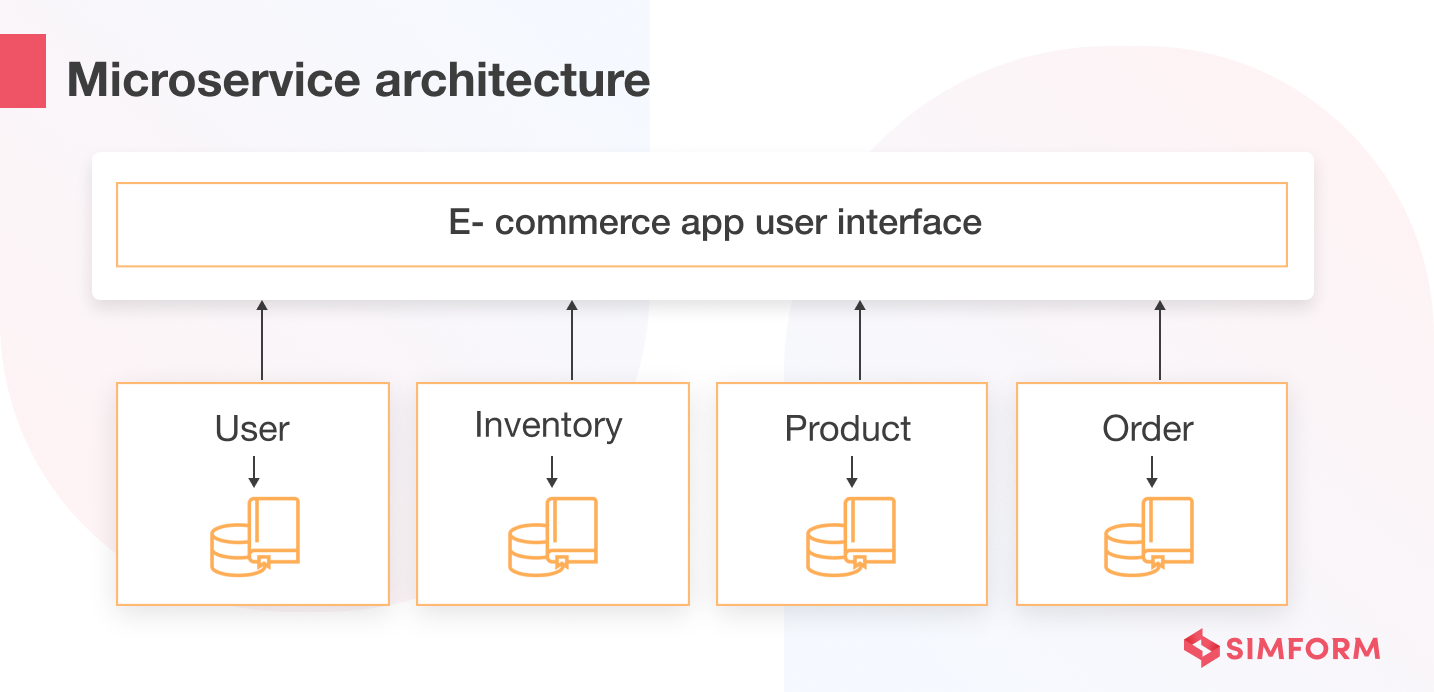

However, our focus will mainly be on microservices – a decoupled structure with several independent services. Re-architecting monolith to microservices can reduce the reliance on hardware and help integrate cloud services.

Benefits of legacy software re-architecting

Re-architecting legacy software provides a sense of freedom for businesses. Imagine customizing your app without worrying about downtime! However, customization is one of the many benefits that re-architecting provides.

Reduced cost of ownership

The total cost of ownership for legacy software increases as businesses scale. Re-architecting reduces the total cost of ownership through decoupled structure and cloud migration. For example, implementing microservices architecture through a containerization approach can help reduce costs.

Further, containers provide isolation of processes and OS-level virtualization that reduces business infrastructure costs, lowering the overall ownership cost.

Enhanced business agility

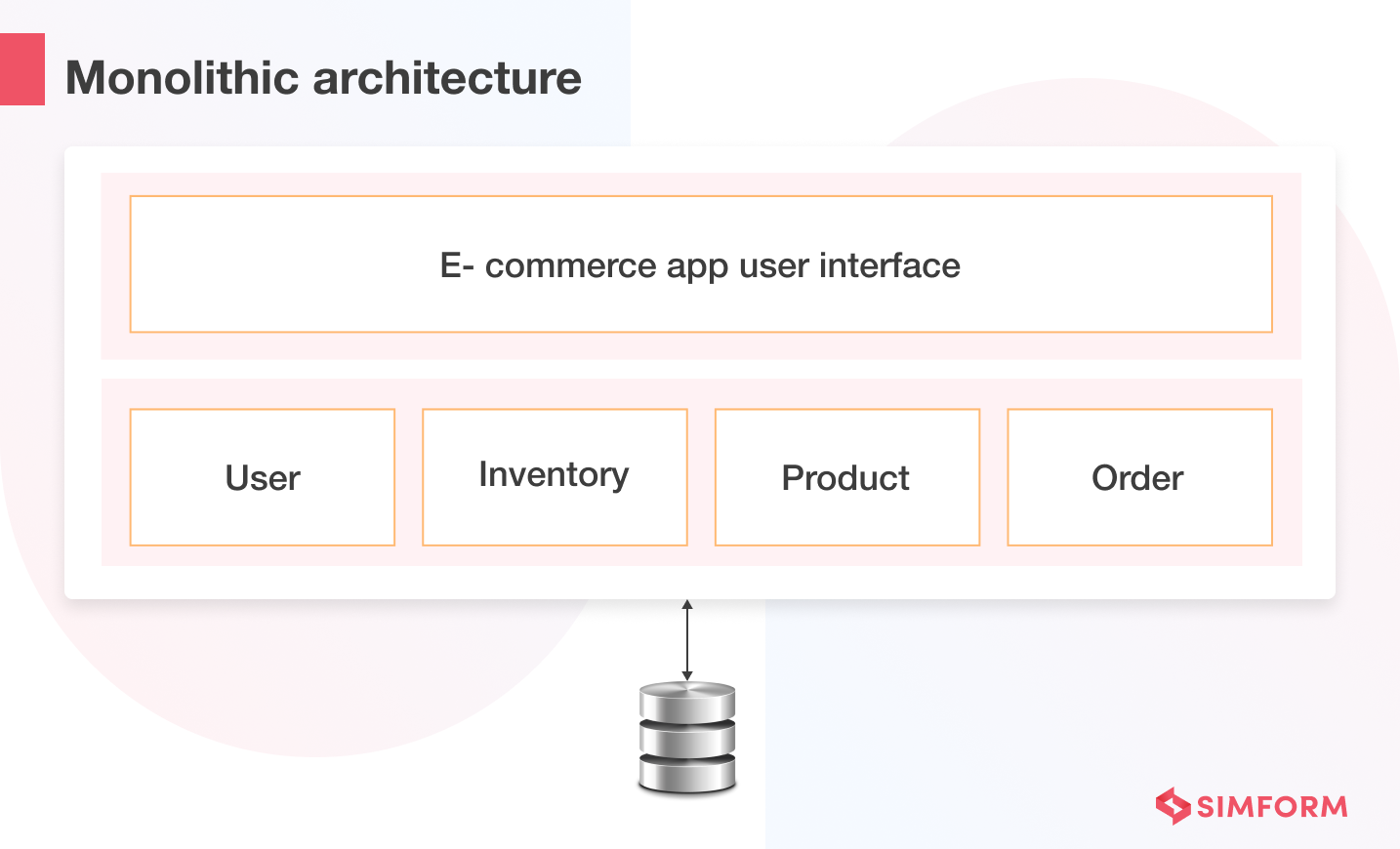

Re-architecting can help businesses rebuild architecture and add features as per market demand. Conventional monolith architecture is coupled, and adding features according to market demand affects the entire application.

Re-architecting the app to microservices means adding new features without disrupting the operations.

Better security monitoring

Monitoring the data at scale requires a massive effort, especially with a monolithic architecture. Re-architecting can help you overcome monitoring issues through the integration of cloud security monitoring tools like

- Microsoft Azure Monitor

- Amazon CloudWatch

- Cisco Cloudcenter

- Cloudflare WAF

- Okta

Improved scalability and resilience

Re-architecting your applications can improve the scalability and resilience of systems by breaking it down into independent services. Legacy monolithic apps have a bounded approach where services lack extensibility. So, the entire app gets disrupted if you want to add more services or scale a single service.

If you re-architect the app into independent services, you can scale any service without disrupting the app. Re-architecting also improves the system’s resilience when you switch to microservices architecture.

All the services are decoupled, so the system has better resilience to failures. In other words, you can quickly recover failed services without affecting the app’s availability.

Integration of DevOps culture

The development and operations teams must collaborate to create efficient systems. By embracing the DevOps culture, you can create a collaborative environment where the development and operations teams work together.

DevOps breakdown the siloed approach and boosts collaboration. However, integrating the DevOps practices into your process flow requires flexibility. Monolithic architecture offers more flexibility as all the services are coupled.

On the contrary, microservices have independent services, which helps embrace the DevOps culture. In addition, organizations have autonomous teams working on independent services.

So, the development and operations team can focus on a single entity. Collaboration on several coupled services can be overwhelming if both teams work on a monolithic application. Instead, it’s far more efficient to have autonomous teams and embrace DevOps culture.

Like all other approaches, legacy software re-architecting has its benefits and risks. Therefore, you must consider all the risks of re-architecting before deciding to go for it.

What are the risks of legacy software re-architecting?

Re-architecting legacy software can have several risks, including app disruption, performance issues, customer experience(CX) impact, and more.

Operational disruption

Re-architecting the application architecture can disrupt the operations and development lifecycle. For example, re-architecting 10-15 years old software requires detailed documentation of all the processes. However, essential documentation may need to be included due to non-existent code standards ten years back.

Besides the documentation, engineers who worked on the monolithic architecture may not be available for consultation. So, developers will need more time to understand the existing architecture, leading to delayed releases. Ultimately, operations may suffer downtime due to delayed releases, which is risky for many businesses.

The best way to avoid operational disruption during re-architecting is to have proper documentation for existing architecture.

Performance bottlenecks

The re-architecting process restructures the architecture and business processes. Consider an eCommerce application with an existing monolithic architecture. The increasing user base and scaling make the monolithic approach a bottleneck.

The reason is tightly coupled monolithic architecture. So, each time you add a feature or try to scale the app, it can cause performance issues as all the services are tightly coupled

So, you should take into account a few things before switching from a monolithic architecture to microservices, such as

- Total number of services required for re-architecting

- Performance issues while you run the monolithic app parallelly developing microservices

- Database bottlenecks which are also monolithic at their core

- Management of data transactions across microservices

Migrating from monolithic to microservices is not enough, as there can be performance bottlenecks if the implementation is not optimized. One way to ensure optimized microservice implementation is to leverage containerization.

Each container will have a separate database minimum configuration required to implement microservices. The best part is that you can run the containers and terminate them as needed.

Impact on CX

Legacy software re-architecting is not just about the backend. The front end of the software will also see changes impacting the user interface. Customers are used to your user interface, and when you make changes, they need to relearn it. Therefore, each interaction with the new app interface will be a learning curve initially for customers.

So, there is no denying that re-architecting the application can impact the customer experience. One possible solution is to integrate user feedback at each stage of the re-architecting process. You can leverage agile practices to ensure feedback integration with each iteration.

Lack of communication among teams

When you re-architect the application from monolithic to microservice architecture, some legacy services will run parallel to the newly developed services. So, you have to split the teams to work on either or both of them together.

Breaking your development team can lead to problems with collaboration and communication. One way to ensure human resource optimization is to analyze how many professionals you need to re-architect the app and run legacy components parallelly. Based on the analysis, you can manage human resources and, if required, hire skilled professionals.

Tech stack redundancy

One of the key reasons to re-architect legacy software is the redundant tech stack. However, the same tech stack redundancy can lead to issues like

- Lack of compatibility with cloud architecture and modern services

- High cost of replacing outdated technology

- Need for skilled professionals

- Resource requirements.

Such issues can risk the re-architecting project by increasing the time and resources needed to overcome them. So, you need to be mindful of the tech stack redundancy before re-architecting the legacy software and planning it accordingly.

Re-architect vs. Refactor vs. Rewrite: Why re-architecting stands apart?

Modernizing the legacy software requires consideration of different aspects like code structure, compatibility of new integrations, and system stability.

The refactoring approach involves minor changes to the code structure without changing its core functionality. It allows developers and programmers to reduce complexity.

On the other hand, rewriting is a hardcore overhaul where most of the legacy code is scrapped and rewritten.

Rewriting the code and re-architecting are two terms that are used interchangeably by many developers and programmers. However, re-architecting overhauls the code structure creating a new architecture for better efficiency, readability, and flexibility.

Re-architecting focuses on making the app code flexible and stable despite integration services or applications. So, if you want to add more functionality to your application by integrating services, choose to re-architect legacy software.

Here’s the comparison showing how re-architecting provides better service integrations, minimal bugs, system stability, and other benefits.

| Differentiators | Rewriting | Refactoring | Re-architecting |

| Code changes | Complete overhaul | Minor changes | Moderate changes |

| Changes in functionality | New functionality | Code functionality remains the same | Functionality can stay the same or change as per need |

| Integration of services | Needs custom code for integrations | Integrations are complex | Facilitates integration of internal and external services |

| System stability | Depends on code quality | Lower stability | Higher stability of the system |

So, there is no denying that it is an attractive modernization approach for many businesses, but like every other approach, you need to analyze it.

Best practices to leverage legacy software re-architecting

Getting the implementation of re-architecting the legacy software right is essential. You can create a framework for re-architecting, reverse engineering, and code refinements.

Proof of architecture approach

Legacy software re-architecting requires running the new architecture and old architecture in parallel. So, ensure that your architecture approach works, or you will waste time and resources.

One way to have proof of architecture is to create a minimum viable version. For example, you can build specific services and test their efficiency. You can migrate from monolithic to microservices if the test results for specific services meet your pre-defined requirements.

Build a re-architecture framework.

You can build a legacy software re-architecture framework by

- Identifying the core functionality of the legacy software system. It will help you focus efforts on improving specific areas and minimizing disruption or loss of data.

- Determining the modernization path for your application. You can choose an approach from options like a replacement, refactoring, or others based on your desired objectives.

- Developing comprehensive plan specifications for detailed plan documentation, including requirements documentation and system-level design diagrams.

- Not all legacy code needs to be rewritten; sometimes, updating or modernizing certain features or components can be helpful. The re-architecture framework will enable you to identify such components.

Extract the legacy codes

Extraction is crucial to understand procedural abstractions. It allows you to analyze the existing structure for a redesign.

So, while extracting, analyze the source code on different levels of abstractions like system, program, module, pattern, etc. Also, evaluate old architecture and extract specifications from the source code to understand how the database connects with the front end or other processes.

Reverse engineer the extracted code

Reverse engineering is a process where engineers distill the abstract representations and reverse engineer the code. It allows you to create a new system design, especially on the architecture level.

However, reverse engineering has several purposes – auditing the system for security, API customizations, and feature addition. So, you need skilled professionals with knowledge of multiple programming languages, security best practices, and code refinement.

Further, you can also use tools like

- Disassemblers convert the binary code into assembly code, extract strings, import and export functions.

- Debuggers to edit the assembly code and set specific breakpoints.

- Hex editors to view binary code in a readable format and make changes as per software requirements.

- PE and resource viewer to run the binary code on a Windows-based machine and initialize a program.

Refine the reverse-engineered code

Code refinement is a process of identifying, rectifying, and reducing redundancies and broken elements in the code. It involves

Code parsing for reverse engineering purposes and identifying key errors

Performing reverse engineering process to update the class model from selected files

Updating the class model and refining the code requires consideration of different functions. You can use the Algebraic Hierarchical Equations for Application Design (AHEAD) model for code refinement. It is a mathematical approach where an equation is used for each code representation of a service or program.

Legal & General brought stability and speed with code refinement

Legal & General, one of the UK’s largest insurers, was operating on 60-year-old mainframe systems. To modernize critical services without disruption, the teams adopted a service-by-service re-architecture approach using Microsoft Azure.

They built minimum viable versions of core services using Azure App Service, Azure SQL Database, and Event Hubs, running them in parallel with the mainframe to validate performance.

Legacy COBOL logic was extracted, reverse-engineered, and refined using domain-driven design. Redundant code paths were eliminated, and workflows were optimized to support cloud-native patterns.

Azure DevOps pipelines accelerated delivery with automated builds, tests, and deployments. Azure Monitor provided visibility into application health and performance across all environments.

Lee Taylor, Technology Director at Legal & General, says, “With Microsoft Azure, we’ve moved from four rigid release windows per year to continuous delivery, reducing technical debt and focusing our teams on innovation.”

60 years of legacy logic is now being re-architected into modular, maintainable services with faster release cycles and greater system reliability.

Visualize the changes

Legacy software re-architecting brings many changes to your system, and visualizing those changes allows you to control the new system better. Some visualization tools that provide a dynamic system analysis are:

- Tableau provides hundreds of data import options and mapping capabilities for your systems. Further, it has an extensive gallery of infographic templates and visualizations to make your data easy to understand for the operational teams.

- Infogram is a drag-and-drop visualization tool that allows even non-designers to create data visualizations with infographics, social media posts, maps, dashboards, and more.

- D3.Js is a JavaScript library that allows the manipulation of documents using data for visualizations. However, it needs a little JavaScript knowledge. So, you can also choose alternatives like NVD3, which offers reusable charts for data visualization.

- Google Charts is a free data visualization tool that you can use to create interactive charts. It works for both static and dynamic data outputs. You can use it for maps, scatter charts, histograms, and more.

- FusionCharts is also a Javascript-based option that enables the creation of interactive dashboards for data visualization. It provides more than 1000 types of maps and allows you to integrate the maps and charts into popular JS frameworks.

Measure re-architecting success through metrics

- Data visualizations do not add value if you don’t have specific parameters to analyze them. So, define the metrics for better visualization and system tracking. Some key metrics include:

- Cyclomatic complexity is a quantitative measure of linearly independent paths in a code section. In other words, it shows the total number of different ways a code may execute to implement business logic.

- Cohesion is a metric that allows you to measure the focus level of each class. If a class does not focus on the activity they are created for, it will create a confusing system.

- Coupling allows you to measure how related or dependent two classes or modules are on each other. The higher coupling means code maintainability suffers, making it difficult for the organization.

- Efferent and afferent coupling helps measure external and internal packages on which many classes depend.

- Abstraction and instability allow you to measure the distance of the assembly’s sequence from the main sequence. Further, the distance less is the effectivity of assemblies.

A framework for re-architecting legacy software enables you to have stable and efficient systems.

Why do tech leaders re-architect into microservices?

Microservices is an architecture preferred by giants like Netflix, Amazon, and others. The question is – why do tech leaders choose microservices over monolithic architecture?

Microservices: the remedy to monolith legacy!

Microservices aren’t always the first choice, but they quickly become the cure for monolithic ailments. Take UBS’s journey from a 2 PB mainframe archive to microservices on Azure.

UBS inherited ELAR–a Mainframe Db2 system with 200 billion records across 50,000 tables–through its acquisition of Credit Suisse. Every time users needed to search, index, or retrieve documents, the monolith throttled performance batch jobs that ran nightly, and any single failure could halt the entire archive.

So, UBS re‑architected the ELAR service by service on Azure, achieving:

- Freedom to pick the right tools.

They containerized ingestion, indexing, and retrieval functions on Azure Kubernetes Service and Azure Functions, and moved metadata into Azure SQL Database Hyperscale. - Flexibility to evolve quickly.

With Azure DevOps pipelines, each microservice follows its own CI/CD path, and updates that once took months now roll out in days. - Fault isolation without fallout.

Using Azure Service Bus for messaging and Azure Monitor for telemetry, failures stay contained to a single service rather than cascading across the system.

Daniel Tanner, Stream Technical Lead at UBS, says, “Transforming a legacy mainframe archive into a modern cloud solution unlocked continuous delivery and cut TCO by 60%.”

Microservices on Azure gave UBS the agility to innovate faster, cut costs, and keep the archive humming, even under peak load.

Re-architecting the legacy software with Simform

Like UBS, many organizations are shifting from legacy systems to cloud-native architectures to improve scalability, reduce costs, and accelerate development. But re-architecting platforms built on mainframes or monoliths often demands deep technical rethinking.

Take, for example, an edutech company delivering business intelligence to school boards, principals, and administrators. With a tight 12-month timeline to rebuild their analytics platform, they partnered with Simform to modernize their architecture.

Our engineers re-architected the legacy system into a microservices-based platform, enabling stable, scalable deployment across 2,000 schools. The new setup now supports faster data delivery, simplified rollout of new features, and reduced operational overheads.

Similarly, if you’re considering re-architecting your system or adopting microservices, sign up for a 30-minute session with our experts!