We humans create a humongous amount of data every day. The following are just some of the mind boggling facts about data created every day.

-

2.5 quintillion bytes of data is created every day, that’s 18 zeros after 2.5!.

-

5 billion Snapchat videos and photos are shared per day.

-

Users send 333.2 billion emails per day.

According to IBM, most of the data produced in the digital domain is unstructured data. Modern data analytics tools help you make sense of this data and gain some tangible benefits out of this data. Amex GBT, the global business travel company has reduced its travel spending by 30% through the efficient use of big data analytics. Such success stories have inspired businesses all over the world.

Are you a businessperson looking to leverage the power of big data analytics? If yes, then you will need to solve some database challenges first. This is because a robust database is a prerequisite for successful data analytics. AWS provides a plethora of solutions to help you.

At Simform, while working with clients on developing big data analytics solutions, we came across certain common database challenges faced by organizations. We decided to assimilate our knowledge in this blog.

Top 5 Modern Data Challenges

Before we proceed towards discussing the big data challenges, understand that first you must choose the right AWS database for your requirements. By doing so, you will nip a lot of data issues in the bud.

#1 Data quality

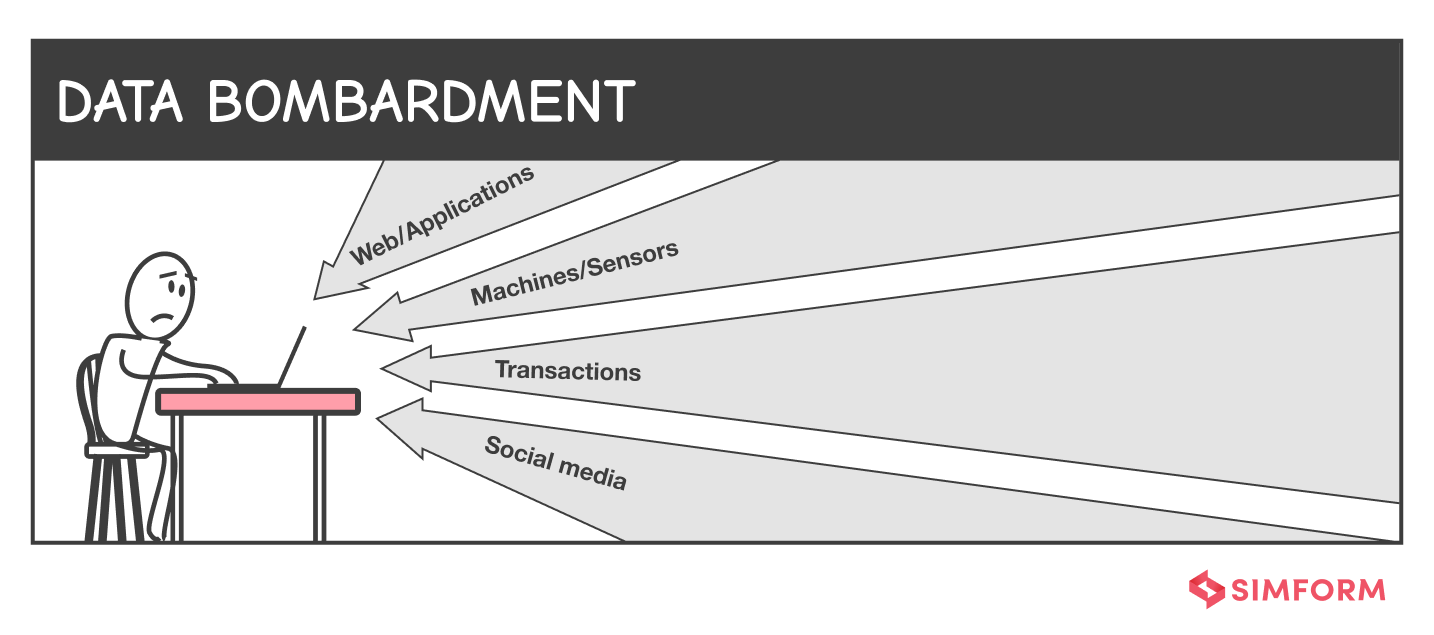

Data disparity is one of the major challenges that one needs to overcome to achieve better data quality. Data quality can prove difficult to maintain especially when you have heterogeneous sources of data. To use this data you have to consolidate data and make it consistent.

This is because a data analytics system can only process the data in a single format. Moreover, it’s not possible to hire people to manually format the data when big data is involved. Hence you require a solution that can streamline all the data inputted into the system into a single format.

Understand that in most cases you will be relying on third-party software companies for these tools. Hence there is a high chance that each of these different companies will be inputting and updating data in different data formats. For instance, one team might enter the date as 26-7-2022 while the other team might enter the same data in the format 26th July 2022. This disparity in data formats can create problems when you want to collate the data into one source.

Mentioned below are just a few of the sources from where your business will need to collect data.

Confused? AWS can help, let’s see how.

Amazon EMR

Amazon EMR is one tool that helps you improve data quality. Instead of sending raw data directly to log analytics tools, you can first send it to a data lake and then filter the data using Apache Spark. Amazon EMR happens to be the best place to run Apache Spark. With Amazon EMR, you can quickly build managed Apache Spark clusters.

Using Spark Streaming on EMR, you can process real-time data from Amazon Kinesis, Apache Kafka, or other data streams of your choice. Amazon EMR allows you to perform streaming analytics in an error-free way. You can even write results to S3 with Amazon EMR. The service consistently monitors the health of cluster instances. Amazon EMR even replaces the failed or poorly performing instances automatically.

Salesforce DMP used Apache Spark with Amazon EMR along with other tools like AWS Data Pipeline to increase its efficiency by 100%.

AWS Glue

AWS Glue is a fully managed ETL solution that can help you solve the data disparity problem. Using AWS Glue, you can load data from disparate static or dynamic data sources into a data lake or a data warehouse. By doing so, you can easily integrate information from different sources and provide a common data source to your applications. AWS Glue easily integrates with AWS data services like Amazon Athena, Amazon EMR, and Amazon Redshift. AWS Glue also integrates easily with a lot of third party applications.

You can combine different tools with AWS services to refine your data quality. PyDeequ is an open-source Python tool that helps you test data quality. Amazon has shown how you can combine PyDeequ with AWS Glue to help users monitor data quality in a data lake.

By combining PyDeequ with AWS Glue, you can easily check for anomalies in your data over time within your ETL workflows. The following are the use cases of PyDeequ with AWS Glue

-

Count and identify mismatched schema items

-

Review incoming data with standard or custom data analytics

-

Track changes in data quality

-

Identify and create constraints based on data distribution

-

Immediately correct the mismatched schema items that the system identifies

Simform used AWS Glue when one of our clients was facing data disparity issues as its product managed data from hundreds of school districts spread across the country. We used the well architected framework to build a scalable ETL architecture using AWS Glue, AWS Athena and AWS Redshift using Apache Airflow.

AWS Glue DataBrew

AWS Glue DataBrew is a tool that helps you clean and normalize data up to 80% faster. With AWS Glue Databrew you can easily find data quality issues like

-

Outliers in the data

-

Missing values

-

Duplicate values

DataBrew also allows you to set data quality rules so that you can perform conditional checks based on your business requirements. For instance, if you own a manufacturing business and want to ensure that there are no duplicate values in a specific column(like part ID), then you can do so with AWS DataBrew. Once you create and validate rules, you can then use the following AWS services to create an automated workflow.

-

Amazon EventBridge

-

AWS Step Functions

-

AWS Lambda

-

Amazon Simple Notification Service (Amazon SNS)

These services will notify you once a particular rule fails. This way, it will be easier for you to maintain the data quality of your database.

Before you write custom code for data preparation, you should try AWS Glue DataBrew. This is because AWS Glue DataBrew provides you with over 250 pre-built transformations which help you automate your data quality checks.

#2 Robust security needs

The cost of an average cloud data breach is 4.35 million in 2022. According to a report, one in four organizations operating on the cloud infrastructure have reported a security incident within the last 12 months. If your organization plans to deploy cloud infrastructure, then you need to ensure that you have a robust cloud security mechanism. With lax database security measures, you not only lose face in front of your customers in case of a security event, you can also get in the bad books of security agencies. This can lead to millions in damages along with the prolonged legal cases in various countries that your organization might need to handle.

As an AWS user, you get access to AWS Lake Formation. It’s a cloud-native AWS service that helps you automate a lot of tasks required to build secure data lakes. Thus taking human error out of the equation. The AWS Lake Formation acts as a safe and centralized data repository for all kinds of data. You can also enforce security policies pertaining to your product/service.

Data privacy

With the advent of laws like the GDPR(European Union General Data Protection Regulation) and The California Consumer Privacy Act, the way in which global companies handle data has changed drastically. If your business is a global one that handles data coming under the purview of these acts, then you need to take extra care in the way you collect, process and store the personally identifiable information of your users.

This is because the cost of failing to do so is very high. There are harsh penalties if your organization is found guilty of breaching these regulations.

AWS has internationally recognized certifications like

-

ISO 27001 for technical measures,

-

ISO 27017 for cloud security

-

ISO 27018 for cloud privacy

-

SOC 1, SOC 2 and SOC 3

-

PCI DSS Level 1

-

BSI’s Common Cloud Computing Controls Catalog (C5)

All these certifications ensure that the AWS services are GDPR compliant.

Amazon offers a GDPR-compliant Data Processing Addendum (DPA). This helps you as an AWS customer comply with GDPR contractual obligations. The AWS services are compliant with the CISPE Code of Conduct. This means that the AWS customers can fully control their data. The CISPE Code of Conduct compliance of the AWS services fulfills the adherence to a “code of conduct” clause in the GDPR regulations. Moreover to help its customers, AWS continuously conducts training sessions on navigating the GDPR compliance using AWS services.

Amazon provides the following services to enhance the security of your application

Amazon Macie

Amazon Macie is a completely managed data privacy and security service. Macie uses machine learning and pattern recognition algorithms to detect and protect sensitive data like names, credit card information and addresses . Thus, Amazon Macie can help you become compliant with GDPR. Amazon Macie also helps you define your own custom sensitive data types. This way you can protect sensitive data that is unique to your business.

AWS KMS

The AWS Key Management Service (AWS KMS) is an AWS service that helps you create and manage cryptographic keys for a wide range of AWS services. AWS KMS uses hardware security modules which are either validated or are under the process of being validated under FIPS 140-2. You can get 20,000 requests per month free in the AWS free tier. Using AWS KMS you can

-

Perform digital signing operations

-

Encrypt data in your operations

-

Ensure compliance

-

Achieve centralized key management

-

Manage the encryption for AWS services

AWS Secrets Manager

The AWS Secrets Manager is a service that allows you to easily manage secrets needed to access your applications and IT resources. These secrets can be credentials, API keys and other secrets. The users and applications that want to gain access to these secrets can do so via a call to the secrets manager APIs. The secrets manager has built-in integration for Amazon RDS, Amazon Redshift and Amazon DocumentDB. You can even use fine-grained permissions to control access to these secrets.

#3 Data processing speed

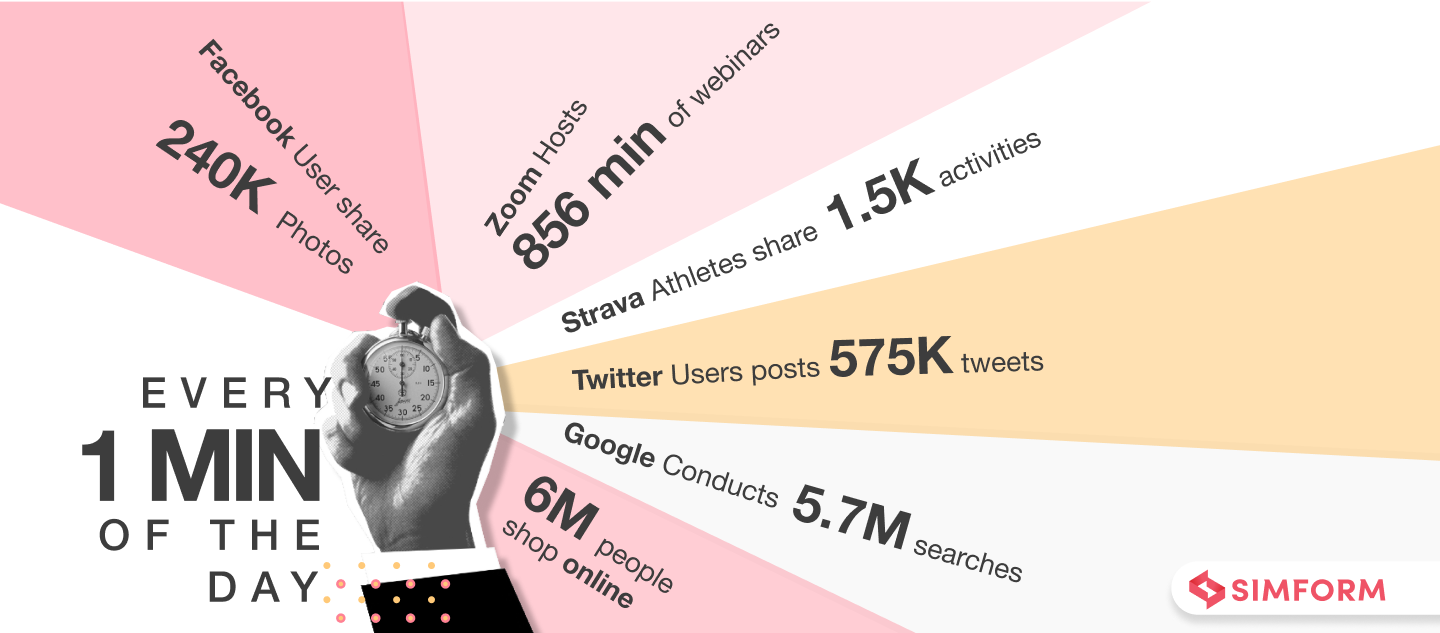

Understand that one of the biggest modern data challenges is to process data at lightning speeds, because it comes into the system at same speeds. The following are just a few facts that will show the blazing speed at which data is being generated every single minute.

Just 5 years ago, it was possible to use a batch process to analyze data. The batch process approach is feasible when the speed at which data comes into the system is slower than the batch processing rate. The batch process fails when data streams into the server at higher speeds than the batch processing rate. This is seldom the case with modern day applications who need to process data in real time. The example of such applications would be Multiplayer games like PubG.

You will require tools that can collect, analyze and manage all this data in near real time.

Amazon EMR

Amazon EMR is a service that allows you to run

-

Large-scale distributed data processing jobs

-

Machine learning applications

-

Interactive SQL queries

Amazon EMR does this by using open-source analytics frameworks like Apache Spark, Hive and Presto.

Using Amazon EMR, you can process petabyte-scale databases up to 2X faster. You can easily process data from streaming data sources in real-time using Amazon EMR. It also helps you build efficient streaming data pipelines that are both long-running and highly available.

Integral Ad Science (IAS) is a digital ad verification company. The job of the company is to ensure that the digital ads put up by companies are viewed by real people and not bots. The company deploys Amazon EMR to process over 100 billion web transactions per day. The company responds to API calls in 10-50 milliseconds.

Amazon Athena

Amazon Athena is an easy to use and interactive database query service. Using Amazon Athena, you can easily analyze data in Amazon S3 using standard SQL. The major benefit of Amazon Athena is that it is serverless and you do not need to manage infrastructure. This helps you cut costs as you pay only for the database queries that you run. With Amazon Athena, you do not need to set up a complicated process to extract, transform and load the data (ETL). You can just find your data in Amazon S3, define schema and start querying.

Another benefit with Amazon Athena is that it executes queries in parallel automatically. This is why the results of most queries are generated within seconds.

Siemens mobility solutions uses Amazon Athena as a part of a comprehensive AWS services solution. The company connects its Railigent system to its trains using these AWS services. Earlier Siemens used batch-processing. The minimum compete latency was 15 minutes at that time. Now by using services like Athena along with other AWS services, the company plans to push this time in seconds rather than minutes.

Amazon Elasticsearch

The Amazon Elasticsearch is a distributed search and analytics engine. The engine is built on Apache Lucene. You need to send data in the JSON format using tools like Amazon Kinesis Firehose and Logstash. You can then search and retrieve the data using the Elasticsearch API. With Amazon Elasticsearch you can also use a tool known as Kibana. This is a visualization tool that helps you visualize your data to digest it easily.

Amazon Elasticsearch can easily process large volumes of data in parallel. This is due to the distributed nature of Elasticsearch which helps it in quickly finding the best matches for your queries. With Amazon Elasticsearch you can complete operations like reading and writing data within seconds. This makes Elasticsearch an ideal solution for near real-time use cases.

#4 Data backup

A lot of organizations all across the globe need to store data in a secure manner for the long term. Some examples of such organizations are

-

Government agencies

-

Financial institutions

-

Healthcare service providers

The organizations need to do this to comply with the regulatory and business requirements. The data stored as backup must be secure and immutable. One way of storing data in such a manner is to store data via a centralized immutable backup solution. Such a backup solution will create and store data in secure vault accounts.

AWS backup

AWS backup is a fully managed service that allows you to easily backup data across a wide range of AWS services and hybrid workloads using the AWS Storage Gateway. You can use AWS backup to centralize and automate the data backup processes using AWS resources like

-

Amazon EBS volumes

-

Amazon RDS databases

-

Amazon DynamoDB tables

-

Amazon EFS file systems

-

AWS Storage Gateway volumes

With AWS Backup you do not need to create custom scripts as the solution automates and consolidates the previously performed backup tasks. AWS backup is a cost-effective and policy-based service that simplifies the process of data backup and allows you to take backup at scale. With AWS backup, you can protect your system against malicious activities and ransomware attacks. You can fulfill your regulatory obligations with AWS Backup.

#5 Manage growing costs

According to a survey, enterprises are overspending on their cloud infrastructure by $8.75 million on an average. Around 33% of the survey respondents said that big data management in the cloud was one of their top priorities. You can save substantial database costs in the cloud by using AWS services like the Amazon S3 Intelligent-Tiering storage class.

Amazon S3 Intelligent-Tiering storage class

This is a cloud storage class that delivers automatic storage cost savings whenever there is a change in the data access patterns. The main benefit of the Amazon S3 Intelligent-Tiering storage class is that it does so without impacting the performance or the operational overheads. The Amazon S3 storage class charges you a small monthly fee and in return it automatically moves the objects that haven’t been accessed in a while to lower cost tiers.

If the objects are not accessed for 30 consecutive days, then it moves them to the infrequent access tier saving 40 %.

After 90 days the objects are moved to the Archive Instant Access tier saving 68% in the process

You can even choose to activate the automatic archiving capabilities in case your data is accessed in an asynchronous manner. Amazon S3 can also work with a Multi-region database architecture providing you the flexibility of deploying your database across various regions.

The Amazon S3 storage class is the ideal storage class in cases where the data has unpredictable access patterns. You can also choose serverless databases for handling unpredictable loads. You can easily use the Amazon S3 Intelligent-Tiering Storage Class for virtually any workload.

The following are the major benefits of the Amazon S3 Intelligent-Tiering Storage Class

-

All access tiers have same performance of S3 standard

-

The storage class is designed for a durability of 99.999999999% of objects across multiple Availability Zones for 99.9% availability annually

-

There are no operational overheads, lifecycle charges, retrieval charges and no minimum storage duration with the S3 storage class

Apart from Amazon S3, there are other cloud cost optimization strategies that can help you reduce your costs.

Overcome your database challenges with Simform

Simform is a cloud consulting provider with deep AWS expertise. When you hire us, you will in essence hire a company that has vast experience in providing business solutions using AWS services. We will ensure that your cloud database is free from all the above-mentioned challenges. Let’s build a better cloud solution for your business together, contact us to know more.