Serverless is one of the commonly trending technologies today. The days of storing data over a database server in-house are far gone. The choice of storing data on a serverless platform provided by various enterprises is numerous, starting with some prominent serverless services offered by AWS Lambda, Google Cloud Functions, and Microsoft Azure. However, choosing the right platform depends on various factors such as functionality, performance, pricing, and so much more. The choice you make will also have a significant impact on the software roadmap of your project.

Considering the long-term success of your project, platforms should be evaluated in terms of their service integrations. In addition to that, the potential backlash of choosing the wrong serverless platform is high with consequences including vendor lock-in, and data lock-in as Patrick Debois, CTO at Zender, mentions in his tweet.

So, how do you pick the right serverless provider? Which would be the right fit for your requirements? In this article, you’ll find information that has been carefully gathered to empower you to select a serverless model that matches your specific requirements.

Current Version- 2020

Each of these serverless platforms was introduced at different periods. AWS Lambda has been publicly available since 2015, Azure functions since 2016, and Google Cloud Functions was later submitted in 2018. Given the difference in their timeline, AWS Lambda holds an advantage over the other platforms as it provides scalability and fully automated administration with concurrency controls and event source mapping.

However, over the years, these serverless platforms have developed and upgraded themselves to a vast extent. While AWS Lambda proffers the best in-hand development possibilities for backend,the built-in AI functionalities by Azure presents futuristic opportunities and Google Cloud Functions manages the dependencies with novelty in scaling. The list goes on. Choosing any one of them, considering their prerequisites and evaluation criteria, is of utmost importance. Let us discuss some of them.

Language Support & Deployment

AWS Lambda renders native support for languages such as Java, PowerShell, Golang, Node.js, C#, Python, and even Ruby Code. In fact, its versatile Runtime API creates the possibility to use any programming language to develop customized functions. In other words, Lambda supports multiple languages by creating container images through the said Runtime APIs. It becomes easier to deploy a Lambda package by simply creating .zip file archives of the functional codes and dependencies. By integrating Amazon Storage Service (Amazon S3), you’re likely to deploy libraries other than AWS SDK with Lambda.

Azure provides two different levels of language support– generally, Available (GA) level, offering complete language support that’s ready for production. The preview level is not yet commercially released but is expected to be compatible with GA levels.

Unlike AWS Lambda, Azure supports C#, JavaScript (Node packages), Python, PowerShell, F#, Java version 8 and 11, and TypeScript based on the runtime versions 1.x, 2.x, and 3.x. Additionally, it also supports direct deployment from .zip file packages. It promotes deployment via FTP, Cloud Sync, Local Git, JSON templates, and Continuous deployment. Azure Repos is considered handy today to pick the latest Git updates.

Google Cloud Functions facilitates writing in Node.js, Golang, Java, Python, and .NET frameworks such as C#, F#, and Visual Basic. Runtime plays a vital role in Cloud Functions as it does in AWS Lambda. The only difference is that the environment executions of Cloud Functions vary based on the runtime model chosen. Packages from Cloud Functions are deployed from Local Machine, Cloud Source Repos, Cloud Console, Source Control, and APIs.

Key takeaway:

AWS Lambda and Google Cloud Functions provide multiple language support via their respective runtime capabilities. Azure, on the other hand, focuses more on supporting advanced levels of code writing.

Dependencies Management

In AWS Lambda, dependencies and environmental variables are effortlessly controlled through the deployment package within the required function. Lambda’s execution environment contains a wide range of libraries like AWS SDK that subtly introduces new functions and packages. Organizing code and usage of Dependency injection methods and Inversion of Control (IoC) frameworks like Dagger, Guice, Spring reduce the complexity of the dependencies.

Azure currently employs an extensible Application Performance Management (APM) service to measure, control, and manage dependencies. The SDK of APM services allows Azure to automatically track dependency calls for Http/Https, WCF, SQL, Cosmos DB, and many more by default.

Handling, monitoring, and accessing dependencies in Google Cloud Functions relies on the language chosen and the runtime module integrated. However, with Cloud Functions, you could request and include any system package to handle the dependency via Go modules’ execution environment conveniently. Though Go modules are experimental at this stage, they provide the required capacity to fetch and control dependencies automatically.

Key Takeaway:

All three serverless platforms distinctly handle dependencies with a level of complexity and simplicity based on your project’s requirement. But today, the countless documentations and vast community aid the process of handling dependencies, reducing your budget considerably.

Persistent Storage

The essence of serverless functions lies in its statelessness. An ideal function would be written in a stateless style with no affinity to the underlying computing infrastructure. This is an essential requirement for serverless functions to launch vast amounts of data and functional calls as needed to scale up the incoming rate of events and requests.

AWS Lambda sets a limit for computing and persistent storage resources. This quota method is applied to all the functional layers, deployment packages, container images, execution processes, test events among others. By default, it provides 1000 concurrency executions, 75 GB storage for functions, and layer storage. Despite the limit, the storage quota is increased to several terabytes upon submitting a manual request to AWS Console support for functional scaling. Amazon’s S3, EFS, and DynamoDB are some of the storage options that Lambda provides.

With advanced programming, handling storage gets a little overwhelming with Azure, but it has upgraded the storage services overtime. You can get about 40 TB to 500 TB worth of offline data storage transfer via its cloud solution called Data Box. Azure Blob and Azure NetApp are Microsoft’s file storage services for complete storage management. Google Cloud Functions provides straightforward control over the storage through their in-house Cloud Storage while it also offers the possibility to use Firebase.

Key takeaway:

Though the storage quota limit of AWS Lambda might seem restricted, they ensure high availability of quality storage services while keeping in mind your budget. Overall, the three serverless platforms provide in-house storage options except Cloud Functions, which also offers external storage per your need.

Identity & Access Management

Identity and Access Management (IAM) for serverless resources is a critical aspect for any development team. IAM system enables an authorization layer to exercise fine-grained access management for your functions. For example, what they can do with those resources (read-only or write-only access), and what areas they have access to (specific function or whole project).

AWS Lambda lets you create your own custom IAM policies and assigns specific roles according to user behavior. Lambda’s IAM has three important policies: Full-Access, Read-Only Access, and Role. However, for IAM computing services, Lambda allows only partial access to service-linked roles and does not give authorization-based tags.

You can control your function policies through Resource Based Access Control. At the moment, it provides three essential services, namely IAM for cloud and hybrid-based environment, Consumer Cloud IAM, and Virtual Machines for effective IAM.

Google Cloud Functions provide enterprise-grade level access control. It uses Google’s cloud resources to create a unified security policy as per your requirement and compliance processes. It offers a granular level of IAM via context-aware access, protecting attributes in every resource and IP address with a constant update on the security status.

Key takeaway:

IAM is taken very seriously by all three platforms at an equal level, and none of them prove to have security risks. Cloud Functions, currently, has taken it up a notch by streamlining the compliance with its built-in audit trail access to increase control and visibility.

Check Check How We Build an Angular App For the Food Truck Industry on the AWS Stack

Triggers & Types

Event sources (triggers) are custom events that invoke a serverless function. Talking of AWS Lambda, it has the necessary features for invocation of HTTPS. This is possible by defining a custom REST API and an endpoint using API Gateway. Other than that, AWS Lambda supports a plethora of other AWS services that are configured as an event source. Moreover, Lambda uses any AWS SDKs when it has the necessary permission for invocations.

Furthermore, it provides trigger functions through the queuing process, timer, event grids, or HTTP with Azure. Triggers and binding are closely related to it. Binding is how Azure connects two different functions to run upon a trigger. Its trigger capacity depends on the version of the runtime you choose. CosmosDB, Queue Storage, Service Bus, and Table Storage are some of the event sources it uses.

Google Cloud Functions handles triggers on two different levels: the HTTP functional level and the Background functional level. Cloud Storage, Cloud Pub/Sub, Stackdriver Logging, Firebase (DB, Storage, Analytics, Auth) are some event sources it supports.

Key takeaway:

AWS Lambda does not depend on event sources unlike Azure and Google Cloud functions. All three platforms deliver high-quality triggering service via their own set of rules and functionalities.

Orchestration

Applications with serverless architecture, where functions start, execute, and finish in a matter of milliseconds, do not preserve the state. Each function is completely independent. Thus, data cannot be stored in the container because they are demolished when the function completes its task.

To enable state in a stateless architecture and orchestrating functions, AWS creates Step Functions. This module logs each function’s condition used by subsequent functions or for root-cause analysis. As of November 2020, AWS supports API Gateway service integration.

With Azure, you orchestrate and automate tasks using Azure Logic and Durable Functions. This way, you easily integrate two or more different services of the cloud. At present, Google Cloud Functions supports integration with Cloud Composer through its built-in Apache AirFlow. It configures the functions in the form of Directed Acyclic Graphs(DAGs). New users also get $300 worth of free credits that can be spent on the Composer or any other Google Cloud products.

Key takeaway:

AWS Lambda supports responsive serverless applications and microservices via Step-functional based orchestration. Azure and Cloud Functions create their approaches in orchestration as well to ensure there is no performance hindrance.

Concurrency & Execution Time

Concurrency refers to the parallel number of executions that happen at any given time. You can estimate the concurrent execution count, but this count differs depending on the type of event source you have used. Moreover, the functions scale automatically based on the incoming request rate, but not all resources in your application’s architecture may work the same way. Hence, concurrency is also dependent on downstream resources.

Currently, AWS Lambda limits the total concurrent executions across the functions to 1000. You can control concurrency in two ways: at the individual functional level or the account level. The functional execution timeout is 900 seconds or 15 minutes.

At present, Azure supports multiple functions concurrently provided operations take place simultaneously within a single data partition. The number of concurrent activity and executions is capped at 10X depending upon the number of cores in the VM level. The execution time limit is 600 seconds or 10 minutes.

Google Cloud Functions, by default, receive only up to 80 concurrency executions and the execution time ranges between 60 seconds and 540 seconds, 1 minute, or 9 minutes.

Key Takeaway:

Google Cloud Functions provides a lower and slower concurrency execution as its execution time needs several enhancements. On the other hand, we have Lambda and Azure, whose execution time has improved over the years.

Scalability & Availability

AWS Lambda supports dynamic scalability with the response to the increased traffic. However, this is subject to individual account level concurrent execution limits. To manage traffic bursts, AWS Lambda predetermines the number of functions to be carried out depending on which region it’s executed. The range of functions that are executed lies between 500 to 3000 per region.

Currently, Azure Functions is available in two different plans. First, the Consumption Plan– it scales your function automatically when a function execution times out after a configurable period of time. Second, the App Service plan– it runs your functions on dedicated VMs that are allocated to every function app, which means the host of the functions are always up and running. With Google Functions, you have the novelty of automatic scalability. However, the background functions scale gradually, and it depends on the duration of functions. Also, maximum scalability is based on the traffic limits.

Key takeaway:

Scalability in Google Cloud Functions gets slower if they are heavier, whereas AWS Lambda has a limited quota for scalability as per the region of interest. However, the AutoScaling ability in Azure has the capability to keep running without any limits.

Logging & Monitoring

AWS Lambda monitors functions by reporting metrics through Amazon CloudWatch that includes a number of requests, latency per request, and number of requests resulting in an error. It automatically integrates with Amazon CloudWatch Logs and pushes them from your code to a CloudWatch group associated with the Lambda function. You may also leverage AWS X-Ray to provide end-to-end monitoring for functions. This feature comes with 100,000 free traces each month beyond which you will be charged.

Azure has a built-in integration called Azure Application Insights that monitors functions. This feature is optional and is often replaced by the built-in logging system.

Google Stackdriver by Google Cloud Platform is a suite of monitoring tools that helps you understand what’s going on in the Cloud Functions. It has in-built tools for logging, reporting errors, and monitoring. Apart from this, you can view execution times, execution counts, and memory usage in the GCP Console too.

Key takeaway:

The three serverless platforms have an abundance of tools for tracing errors, coding issues, and monitoring functions. But, when juxtaposed, Lambda and Google Cloud Functions have an edge over Azure.

Whether it is AWS Lambda, Azure, or Google Functions, we are here to help you build Serverless App

AWS Lambda vs Azure Functions vs Google Cloud Functions: Pricing Comparison

Generally, the pricing for serverless platforms in terms of functional invocations relies on two metrics: the number of invocations and the time taken by each invocation to execute. Before we jump to the exact dollars, let’s understand the execution environment.

Execution time is directly proportional to the execution environment. An execution environment is a hardware that is used to execute a function. The more powerful the hardware, the higher the prices.

For example, if you’re dealing with a computation-heavy function that takes a few milliseconds to execute, you might be prompted to opt for lower memory sizes. While this is an economical option, it is a double-edged sword, for the smaller the machine, the more time it takes to execute a function.

AWS Lambda has a free-tier that covers the first 1M function requests and a whopping 400,000 GB-secs per month after which you will be required to pay $0.20 per 1M requests or $0.0000002 per request and $0.00001667 for every GB-secs used.

Azure Functions’ free tier covers 5000 transactions, 100,000 requests per month with 64 GB SSD and 5 GB file storage. You can opt for free one year of service or choose a plan between $ 0.02/hour and $ 1.41/hour. Azure also provides $200 free credit for 30 days.

Google Cloud Functions is priced a bit differently. This includes the execution time, number of invocations, and how many resources you procure for the function. You can access all Cloud Platform Products worth $300 free of cost for 90 days. They provide a variety of free products with different limits based on the service chosen. The free-tier includes the first 2M requests and $0.40 beyond that. Compute time is measured in two units: GB-secs and GHz-secs. Moreover, networking outbound data is charged flatly at $0.12/Gb after the expiry of the initial free 5GB.

Key takeaway:

AWS Lambda, Azure, and Cloud Functions, all three, provide free service until a certain limit and then charge based on their standard policies.

Read more: For a detailed analysis of serverless pricing refer to this blog. If you’re keen on what it takes to run serverless applications, here is an in-depth resource.

AWS Lambda vs Azure Functions vs Google Cloud Functions: Performance Comparison

At USENIX Annual Technical Conference, a few years ago, an academic report was published about the performance of various serverless platforms. The research was conducted on a single “measurement function” which performed two basic tasks:

- Collect invocation timing and runtime information of function instance

- Run specified tasks (measuring local disk I/O throughput, network throughput) based on received messages.

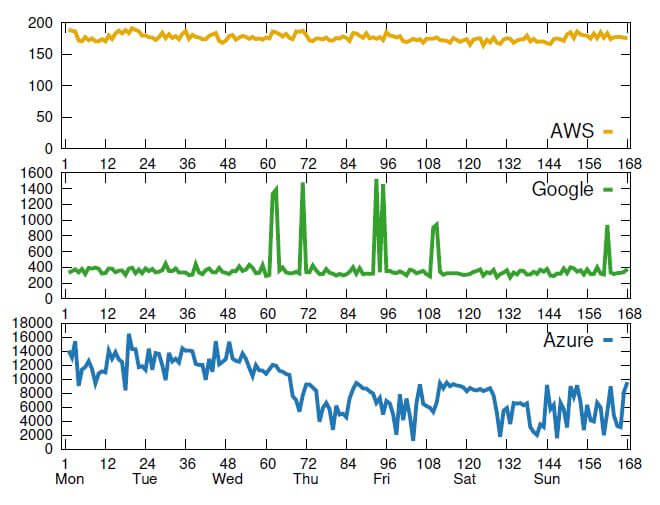

#1. Concurrency & Scalability

For AWS, 10240 MB is the maximum aggregate memory that can be allocated across all function instances on any VM. This, perhaps, suggests that AWS Lambda tries to place a new function instance on top of the existing VM to increase the memory utilization rates.

Although Azure Functions claims to scale 200 instances for a single Node.js function, it fails to do so in practice. When put to test, it was only able to scale 10 function instances concurrently for a single function.

With Google Functions, only half of the expected number of instances, even for a low concurrency level, could be launched at the same time. The remaining requests were queued.

#2. Cold Start

AWS Lambda didn’t see any major difference between the launching of existing and new instances. In fact, the median cold start time i.e. latency between the two cases was only 39 ms. On the contrary, it took a long time for the Azure instances to launch despite 1.5 GB of memory being allocated to them. Median cold start latency was observed at 3640 ms in Azure.

Surprisingly, with Google Functions, the median coldstart latency ranged from 110 ms to 493 ms. It allocates memory proportionally to the CPU, but in Google, memory size has more impact on cold start latency than in AWS.

#3. Instance Maximum Idle Time

This can be loosely defined as the maximum time an instance can stay idle before it shuts down. Here are the results:

An instance in AWS stays active for 27 minutes. Notably, in 80% of the cases, instances were shut down after 26 minutes. Whereas no consistent idle time was discovered in Azure Functions, the idle time in Google Functions was as huge as 120 minutes. Even after 120 minutes, in 18% of the cases, the instances remained active.

However, this data may contradict when you put your specific needs in practice. In fact, the platform’s internal security practices also play a major role.

Recently, we came across an opinion where a company had to move a large SaaS application from AWS to Azure. The tech stack included 80% Linux/OSS and 20% .NET.

The organization switched to Azure due to its affinity for security compliance and data sovereignty. After the switch, they realized that Azure is stronger in support and in its ability to interact with Azure product teams to guide the platform.

It is evident from the recent reports Azure is keeping up with the face in the serverless landscape and there is no way to neglect them.

Conclusion

Gartner estimates that between 2020 and 2025, 20% of organizations globally will migrate to serverless computing.

Arun Chandrasekaran, Distinguished VP Analyst at Gartner says, “Serverless, specifically, is commonly applied in use cases pertaining to cloud operations, microservices implementations and IoT platforms.” He further adds, “Each of these virtualization technologies will be relevant for CIOs in the foreseeable future,”.

The 2-year head start (launched in 2014), quick implementation of new features, and performance are the major factors in AWS Lambda’s pole position. However, today all three serverless platforms hold an equal standing. The best choice for your project entirely depends on factors such as your project budget, flexibility, and the development time in hand.

If you are diving into serverless with no platform preference, we would encourage you to explore each of these services. Understand the use cases and why and when serverless can help you.