Amazon DynamoDB is an excellent database service by AWS that supports varied business-critical workloads. More and more businesses are using it, including Zoom, Netflix, Disney+, Dropbox, Nike, Capital One, and more.

DynamoDB is purpose-built to support modern applications that can run on high performance at any scale. But while it is a fully managed service, it comes at a premium. And as your application needs grow and scale, you want to focus on innovation and not on paying for increasing costs.

With Amazon DynamoDB, designing for cost efficiency from the start is always an option. But what about optimizing the existing workloads for cost? This blog post discusses effective techniques to optimize your DynamoDB usage and reduce costs. Let’s start with an overview of DynamoDB pricing.

How DynamoDB pricing works

Amazon DynamoDB primarily charges you for the amount of read/write operations in your tables and storage. Additionally, it charges you for optional features you may choose, such as data transfer, backup and restore, Global Tables, Streams, etc.

DynamoDB has two capacity modes with specific billing options for processing reads and writes on your tables.

On-demand capacity mode

- It charges you only for the number of data reads and writes your application performs on tables.

- DynamoDB automatically accommodates workloads as traffic increases or decreases.

- It requires no capacity planning, provisioning, or reservations.

- It eliminates trade-offs of throttling, overprovisioning, or underprovisioning.

On-demand capacity mode is ideal to choose if you:

- Have unpredictable application traffic

- Create new tables with unknown workloads

- Prefer the ease of pay-for-what-you-use model

Provisioned capacity mode

- It requires you to specify the number of reads and writes per second you expect your application to need.

- It allows you to set read and write levels separately.

- You can also use Auto Scaling to adjust capacity based on specific utilization rate for further cost optimization.

- Throttling is possible if traffic spikes suddenly or if you have not accurately provisioned your tables.

- Capacity will be wasted if not used.

Provisioned capacity mode is ideal to choose if you:

- Have predictable or consistent application traffic

- Run applications whose traffic ramps gradually

- Can forecast and plan capacity requirements for cost control

AWS also offers a free tier for DynamoDB, enabling you to gain free hands-on experience with the service. For detailed information and cost breakdown, you can refer to the official DynamoDB pricing page.

Determining which capacity mode best suits your business and application needs is the first step in optimizing your DynamoDb costs.

The below section discusses cost optimization techniques to help your DynamoDB bills and usage in check.

8 ways to optimize DynnamoDB usage and reduce costs

1. Use on-demand capacity mode wisely

On-demand mode instantly changes capacity settings to adapt to the changing requirements of your workloads. It can also scale to zero for intermittent workloads. Thus, it is recommended for new workloads so you can identify the traffic patterns initially. You can also determine the amount of capacity used via Amazon CloudWatch metrics.

Once you have evaluated the capacity usage and traffic patterns, you can switch to provisioned mode as it is priced lower than on-demand mode for the equivalent capacity.

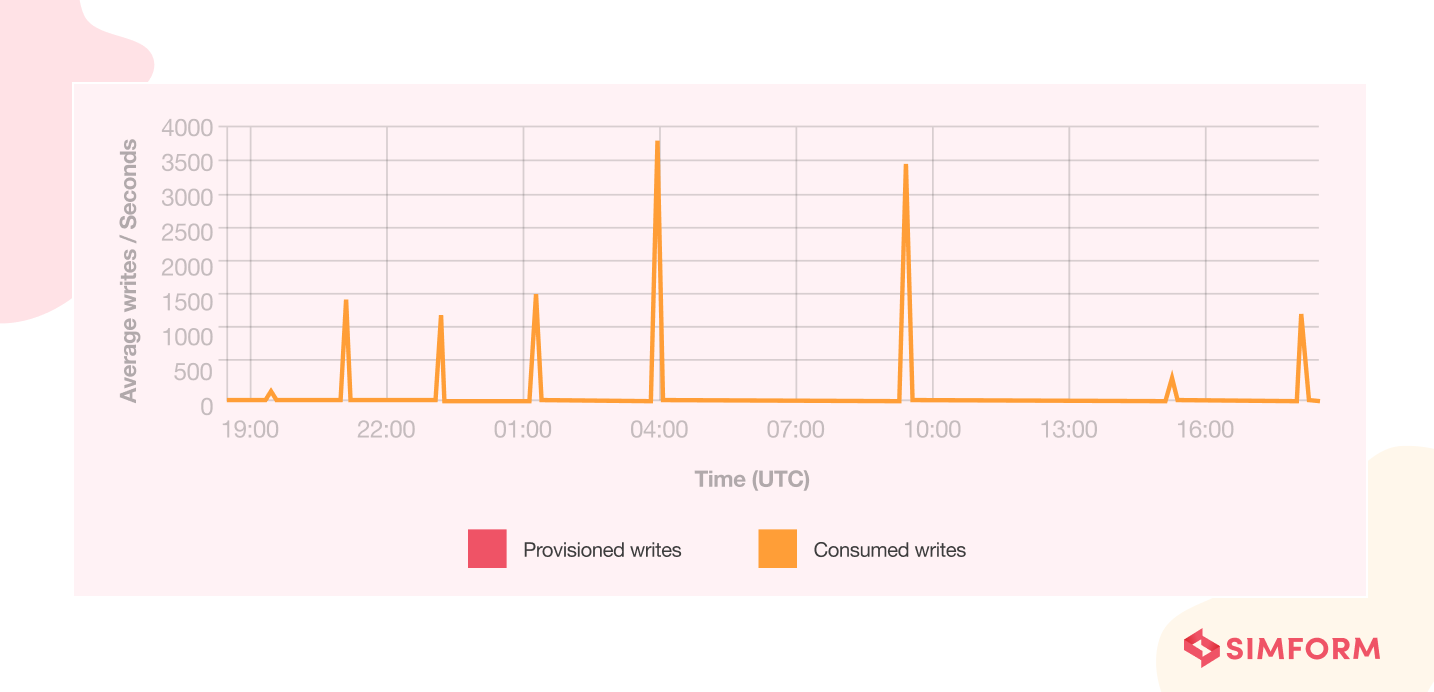

If your traffic patterns are unpredictable with spikes in demand, keep using the on-demand mode. A great candidate for on-demand capacity is shown below. Here, using provisioned mode will result in overprovisioning by quite a large margin.

On-demand mode can also be used to safely reduce the costs of unused tables. Enabling it against unused tables does not lead to unnecessary charges as these tables do not have any I/O activities. Plus, it does not introduce risks of irreversible disruptions against any applications dependent on these tables.

2. Use Auto Scaling for your provisioned capacity tables

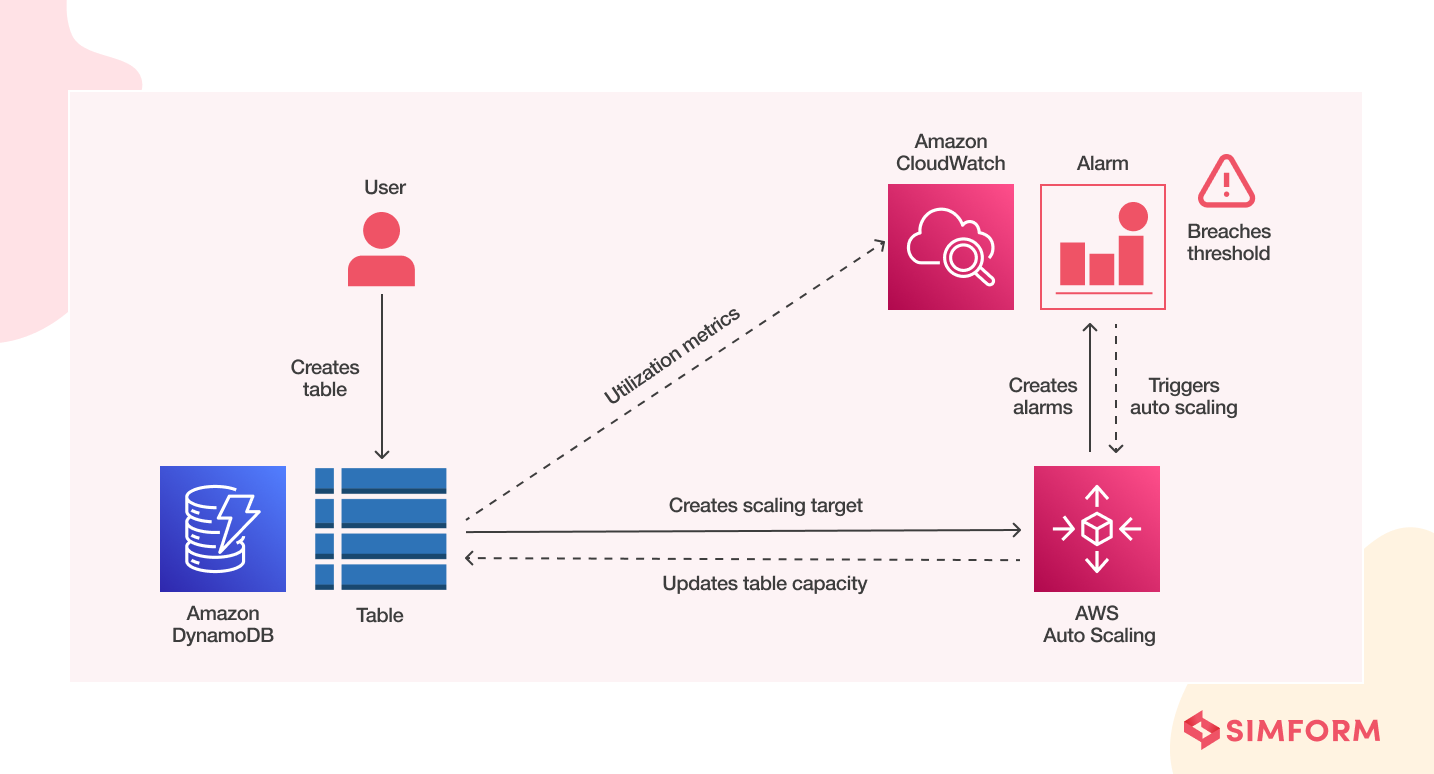

You can further optimize your capacity usage on provisioned mode with DynamoDB Auto Scaling. It automatically changes the provisioned capacity settings based on your table’s actual capacity usage. Thus, it avoids manual capacity management as your workloads change. Moreover, it avoids unnecessary costs as auto scaling ensures your tables are not overprovisioned.

You can easily enable Auto Scaling for DynamoDB using the AWS Management Console, AWS CLI, or AWS SDKs. Below would be an ideal situation for provisioned capacity mode.

Here’s how Auto Scaling creates CloudWatch alarms and manages throughput capacity. You can set specific target utilization as well as minimums and maximums. And Auto Scaling will try to follow the curve of your traffic, allowing you to find a comfort zone between throttling and cost efficiency. Read more about AWS Auto Scaling here.

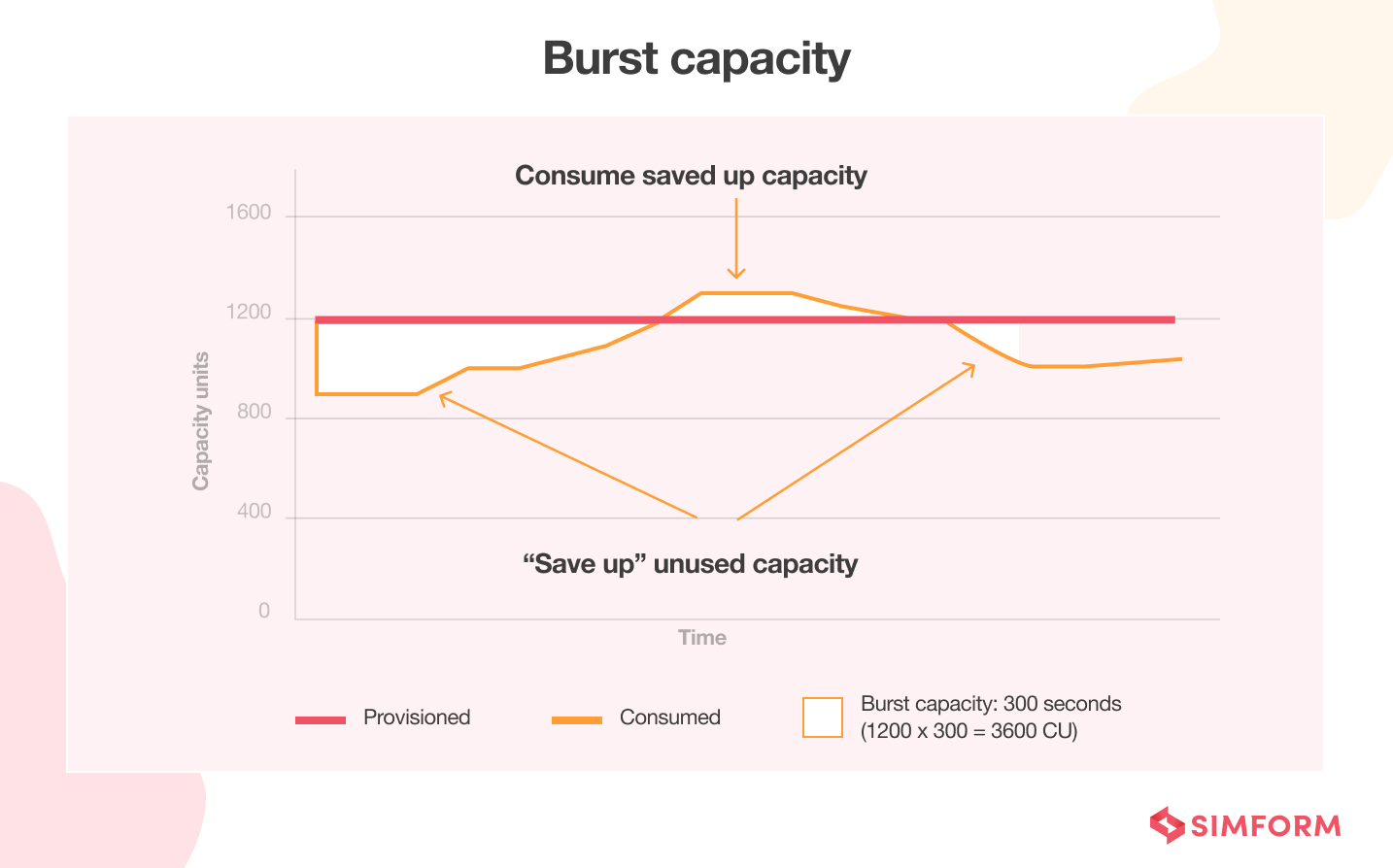

Another thing to keep in mind is the in-built burst capacity in DynamoDB. Sudden spikes happen! So AWS does not charge you for a minute of a sudden occasional spike. Instead, it keeps a bucket with five minutes of capacity available. Assuming you haven’t used more capacity than you provisioned for the last five minutes, you have five minutes of capacity available to serve a sudden spike. However, sustained traffic above the provisioned capacity levels will be throttled.

3. Enable tagging for table-level cost analysis

AWS Cost Explorer’s default configuration gives you aggregated DynamoDB costs by usage type. But it does not exhibit individual table costs. To enable table-level cost analysis, you can tag your tables eponymously (by their own name).

For instance, when you create a DynamoDB table, you can place business tags. For example, which tables belong to which part of your organization, teams, service, or application. So if your usage spikes, you can figure out what has contributed the most to the costs. You can even go to the tables and see if you can do something differently to optimize the costs.

For more tips on designing DynamoDB tables for better performance and cost efficiency, refer to this article on DynamoDB best practices.

4. Choose the right table class

DynamoDB offers two table classes to help you optimize costs:

- Standard table class:

It is the default table class. It is designed to balance storage and read/write costs.

- Standard-Infrequent Access table class:

It offers up to 60% lower storage costs and 25% higher read/write costs than the Standard table class.

You must choose the table class that best fits your applications’ balance between storage and throughput usage. However, the table class you select can affect other pricing dimensions, such as Global Table and GSI costs.

You can use the Table Class Evaluator Tool to choose the right table class. It identifies the tables that may benefit from the Standard-IA table class. For more information, refer to considerations when choosing a table class.

5. Find unused tables or GSIs

Unused DynamoDB tables and GSIs (Global Secondary Indexes) can generate unnecessary costs even when not actively used. To identify unused resources, you can:

- Check CloudWatch metrics console for tables or GSIs with no consumed read/write capacity usage

- Regularly check your tables for tables or GSIs to ensure they are still in active use

- Periodically scan your database for orphaned data.

Deleting unused resources can reduce costs, eliminate wastage and boost database performance.

6. Store large items efficiently

Storing large values or images can quickly shoot up your DynamoDB costs. But you can remedy that with the following strategies:

- Compressing large attribute values

You can consider using compression algorithms such as GZIP or LZO to make the items smaller before saving them. It helps reduce your storage costs.

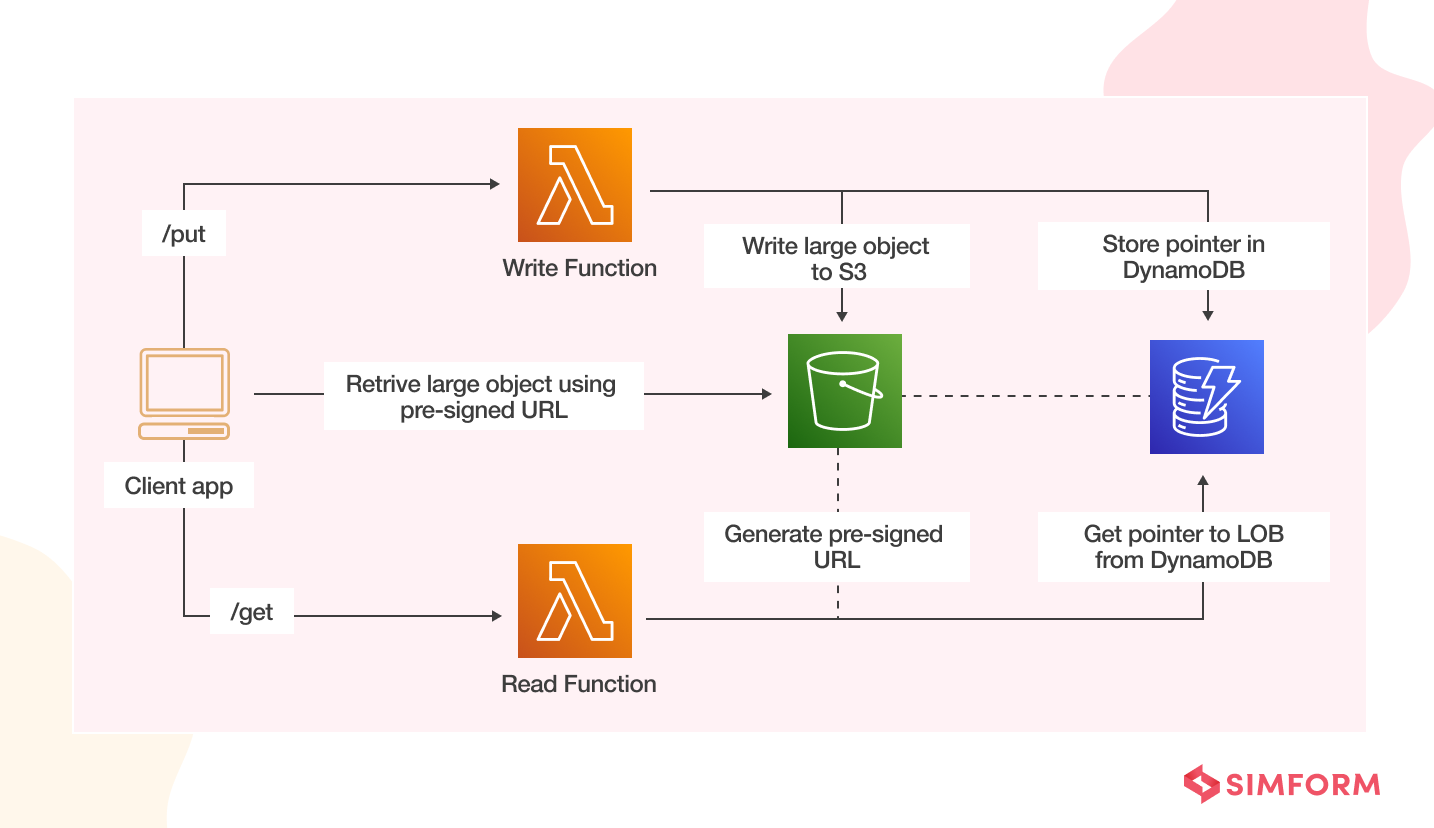

- Store large objects in Amazon S3

7. Identify sub-optimal usage patterns

Sub-optimal usage patterns can incur unintentional expenses. Evaluate how you are using your tables and determine if you have any of the below usage patterns:

- Using only strongly-consistent read operations

A strongly-consistent read operation consumes 1 RCU/RRU per 4kb, and an eventually-consistent read operation (the default) consumes 0.5 RCU/RRU per 4kb.

How to fix it: Talk to your developers to check if strongly-consistent reads are necessary for the workflow and use them only where your workload cannot tolerate stale data.

- Using transactions for all read operations

Transactional reads cost four times the cost of eventually-consistent reads. You can check your table utilization in CloudWatch to identify if everything is done as a transaction.

How to fix it: Ensure you only perform transactions when your application requires all-or-nothing atomicity for all items written.

- Scanning tables for analytics

Data analysis via scans leads to high costs as you are charged for all data read from the table.

How to fix it: Consider using the Export to S3 functionality of DynamoDB. And perform analytics or scan operations on data in S3.

- Using Global Tables for Disaster Recovery of a single region

Ensure that your global table usage is for the intended purpose or if Global tables are used just for data replication. They offer low RTO/RPO (Recovery Point Objective/ Recovery Time Objective). And there may be cheaper alternatives if you have more flexible RTO/RPO requirements.

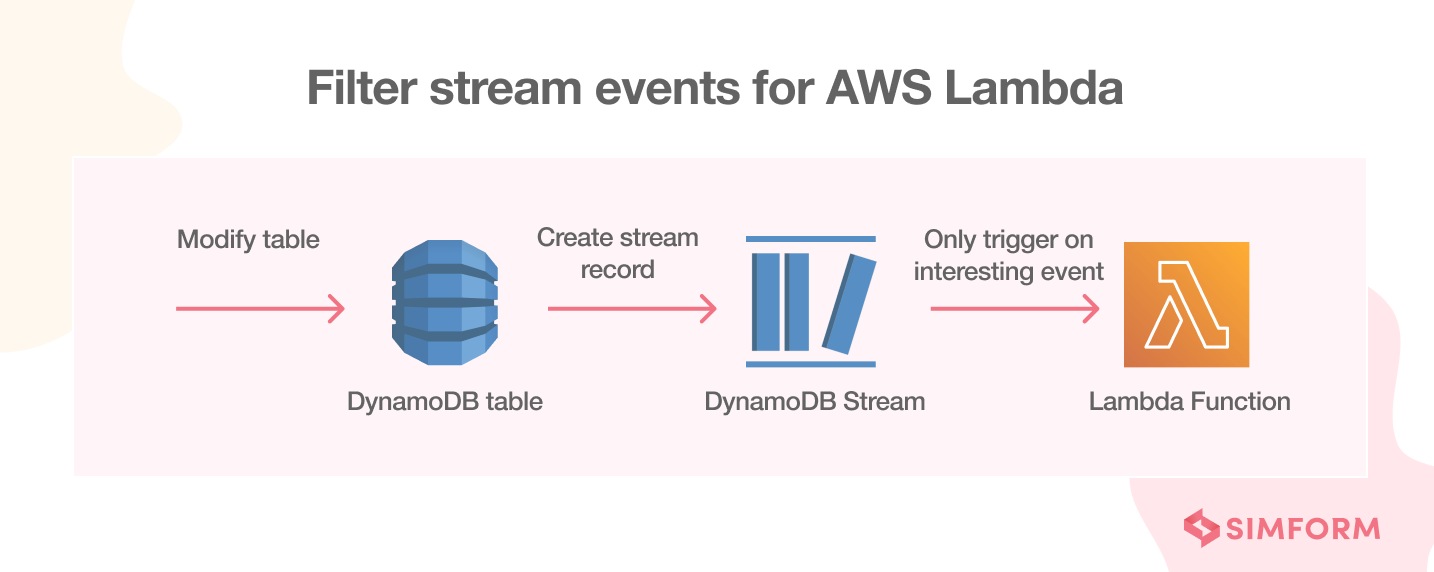

8. Lower your stream costs

DynamoDB Streams capture item modifications as stream records. So you can configure DynamoDB Streams as an event source to trigger Lambda functions. These functions can process stream records to perform tasks such as aggregating data for analytics in Amazon Redshift or making your data searchable by indexing with AWS OpenSearch.

Find out more cost optimization tips to achieve true savings in the cloud with this detailed guide on cloud cost optimization.

6 bite-sized tips for DynamoDB cost optimization

1. Leverage Reserved capacity

If your workloads have predictable traffic patterns, you can purchase reserved capacity in advance. You need to pay an upfront fee at hourly rates for the entire term of reserved capacity. But it offers significant cost savings over on-demand and provisioned capacity mode.

2. Use cheaper regions

Some AWS regions are cheaper than others. So you can opt for cheaper regions if:

- You are not restricted to a specific region

- It does not affect the read/write latency

- You do not need to meet any regulatory or compliance standards

Read more on multi-region databases and their benefits!

3. Limit the record sizes

Both capacity modes in DynamoDB use size-dependent billing. Plus, you pay for storage too. It is recommended that you use shorter attribute names. In addition, you can choose the epoch time format for storing dates instead of ISO dates.

4. Consider Queries over scans

You can use either query or scan methods to fetch data from DynamoDB. But using scan can be expensive at times as it searches your whole table. So you will be charged for all the rows scanned. Whereas queries use partition and sort keys to directly locate data, saving extra costs.

5. AWS Backup vs. DynamoDB Backup

AWS Backup offers a cold storage tier for DynamoDB backups. It can reduce your storage costs by up to 66% for long-term backup data. Plus, it offers lifecycle features and inherits table tags that can be used for further cost optimization.

6. Use TTL to remove unneeded items

Leverage DynamoDB’s Time-to-Live feature to automatically delete unneeded or expired items from your tables. Regularly removing such items will help reduce your storage costs. And the feature is free to use!

How we built an efficient database architecture to process millions of data points per day?

When building FreeWire, an EV charging platform, we used several AWS services to create a microservices architecture. To handle the extremely large volumes of data, we used a mix of SQL and NoSQL databases. We hosted the SQL database on Amazon RDS for database-level operations like automated backups, creating replicas, etc. And we used DynamoDB for storage and retrieving non-relational data.

Read the full cases study on FreeWire

To sum up, there is no silver bullet to reducing DynamoDB costs. The key is to control DynamoDB costs by

- Optimizing utilization, not just design

- Focusing on efficient operations

- Choosing the right functionalities DynamoDB has to offer and taking advantage of them

- Scaling appropriately

- Actively monitoring and optimizing as it is an iterative process, not a one-time effort.

How can we help?

Simform’s team has years of experience addressing database challenges in the cloud. Our certified experts see to it that businesses pick the strategy that best suits their needs and approach them iteratively. We also help companies optimize their storage, performance, and cost efficiency so they can focus on other aspects of their business. If that is something you are looking for, speak to our experts today!