A few readers of my previous blog on Unit Testing had varied opinions on the utility of unit testing. Some went on to say that, in software testing, unit testing is a huge waste of time whereas functional testing is what really helps them to find real-world bugs.

I strongly believe that unit testing has its own place in the software development lifecycle but the results are implicit in the form of code quality. When we talk about delivering quality software, functional testing has the highest ROI since in this type of testing rigorous tests are done with real data. Functional testing verifies that the software performs its stated functions in a way that the users expect.

The process of functional testing involves a series of tests: Smoke, Sanity, Integration, Regression, Interface, System and finally User Acceptance Testing. Tests are conducted on each feature of the software to determine its behavior, using a combination of inputs simulating normal operating conditions, and deliberate anomalies and errors.

Thus after rigorous functional testing, you receive software with the consistent user interface, proper integration with business processes, well-designed API having robust security and network features.

What is functional testing?

Functional Testing is a type testing where each part of the system is tested against functional specification/requirements. For instance, seek answers to the following questions,

- Are you able to login to a system after entering correct credentials?

- Does your payment gateway prompt an error message when you enter incorrect card number?

- Does your “add a customer” screen adds a customer to your records successfully?

Well, the above questions are mere samples to perform full-fledged functional testing of a system.

Functional Testing Process with Example

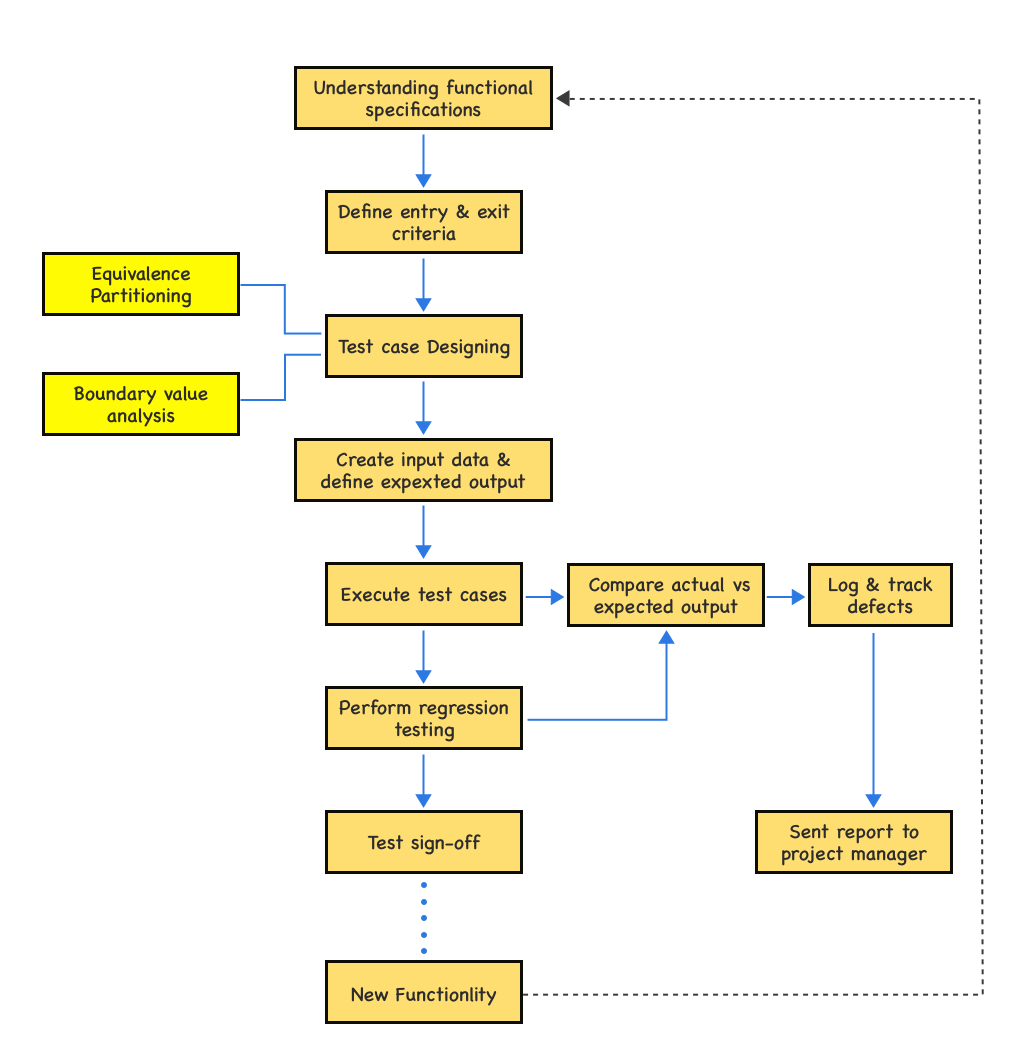

Functional testing aims to address the core purpose of the software and hence it relies heavily on the software’s requirements. Thus, like requirements, testing scenarios and test cases require a great deal of organization.

For any given scenario, like “login,” you have to understand the inputs and their expected outputs, and how to navigate the relevant part of the application. Let’s understand the step by step functional testing example of the online payment process. Here I’ve divided the whole process into 10 steps but it may vary depending on the functionality and testing process of the organization.

1. Testing Goals

In functional testing, we can describe goals as intended outputs of the software testing process. The main goal of functional testing is to check how closely the feature is working as per the specifications. For better understanding, we can divide functional testing goals into two parts: validation testing and defect testing.

Validation testing:

- To demonstrate the developer and client that the software meets the requirements.

- A successful test should show that the system works as intended.

Defect testing:

- To discover the defects in the functionality in terms of the user interface, error messages and text handling.

- A successful test should expose the defects when the functionality does not work as expected.

For example, from the testing of the checkout process, we can expect the following goals to be accomplished:

- Payment Gateway should securely encrypt sensitive information like card numbers, account holder name, CVV number, and password.

- This information is sent with the highest safety from the customer to the merchant.

- A system should show an error message when the wrong details get entered.

- A user should get the confirmation message on the successful transaction.

2. Team Member Assignments

The testing team must be properly structured, with defined roles and responsibilities that allow the testers to perform their functions with minimal overlap. One way to divide testing resources is by dividing features based on high to low priority. The testing team may have more role requirements than it has members, which should be considered by the test manager.

3. Scope

In this step, the QA team make a list of features which are going to be tested. In our functional testing example, we will test the following features:

- Payment gateway

- Debit/Credit Card Options

- Notification/OTP check

4. Selection of functional testing tool

At this stage, the QA team and project manager discuss to select the right functional testing tools based on project requirements.

In this step, we list down all the possible test scenarios for the given specification. A `test scenario’ is the summary of a product’s functionality, i.e. what will be tested. Based on these scenarios test cases are prepared.

Here is the list of possible scenarios for our payment gateway example.

- User Data transmitted to the gateway must be sent over a secure (HTTPS or other) channel.

- Some applications ask the user to store card information. In that case, a system should store Card information in an encrypted format.

- Check for all mandatory fields validation. The system should not go ahead with the payment process if any data for any field is missing.

- Test with Valid Card Number + Valid Expiry Date + Invalid CVV Number.

- Test with Valid Card Number + Invalid Expiry Date + Valid CVV Number.

- Test with Invalid Card Number + Valid Expiry Date + Valid CVV Number.

- Test all Payment Options. Each payment option should trigger the respective payment flow.

- Test with multiple currency formats(if available).

- Test with a Blocked Card Information.

- Try to submit the Payment information after Session Timeout.

- From Payment Gateway, the Confirmation page tries to click on the Back button of the browser to check Session is still active or not.

- Verify that End user gets a notification email upon successful payment.

- Verify that End user gets a notification email with proper reason upon payment failure.

- Test authorization receipt after successful payment. Verify all fields carefully.

Out of all the above scenarios, we will consider only of payment functionality done via credit/debit card.

6. Create input data

You can have test data in an excel sheet which can be entered manually while executing test cases or it can be read automatically from files (XML, Flat Files, Database, etc.) by automation tools. There are mainly two types of input data.

Fixed input data

- Fixed input data is available before the start of the test, and can be seen as part of the test conditions.

Consumable Input data

- Consumable input data forms the test input

In our example, we’ve to create input data manually. We’ll require the following types of data:

- Card Type

- 16 digit Card Number

- CVV number

- Expiry date

- Name on the card.

You can use https://www.getcreditcardnumbers.com/ to generate card numbers for testing.

7. Design test cases to compare the output

| TC ID | Specifications | Steps To Execute | Expected Result |

| TC-01 | Check the Name on Card, Card Number, Expiration date and CVV. | 1. Select the card type | It should show the validation message for all the required fields |

| 2. Do not fill any information | |||

| 3. Click on Submit button | |||

| TC-02 | Check the error message when enter invalid input for mandatory fields | 1. Select the card type | It should show error message for invalid details |

| 2. Enter invalid card number [Check for CVV, Expiration date] | |||

| 3. Click on submit button |

8. Execute Test Cases

It is the comparison of Test Case expected results to Test Case actual results (obtained from the test execution run) that will determine whether the test has a ‘Pass’ or ‘Fail’ status.

Test Case status definitions are:

Passed (P): Test run-result matches the expected result.

Failed (F): Test run-result did not match the expected result. In some cases, the result did match as per expectation but caused another problem. Here, a defect must be logged and referenced for all failed test cases.

Not Run (NR): Test has not yet been executed. In a test case database, all tests start from a default status of ‘NR’.

In Progress (IP): Tests have been started out but not all the test steps have been completed.

Investigating (I): Test has been run but investigating on whether to declare as a passed or failed test.

Blocked (B): Test cannot be executed due to the blocking issue. For example, some test cases can’t be run because of hardware issues.

9. Defect tracking system

When defects are found, the testers will complete a defect report on the Defect tracking system,i.e. ‘JIRA’. The defect tracking system is accessible by Testers, Developers & all members of the project team. When a defect has been fixed or more information is needed, the developer will change the status of the defect to indicate the current state. Once a defect is verified as FIXED by the testers, the testers will close the defect.

Defect Categories:

Defects found during the testing can be categorized based on its severity. Below are the categories of the given example.

For the given example, I’ve divided the defects based on its severity.

- Major: After clicking the submit button, Authorization is not requested to the customer’s issuing bank which confirms the card holder’s validity.

- Blocker: User cannot select the card type and hence he/she can’t proceed further.

- Minor: It does not show “Invalid Card Number error message” when a user enters the wrong card number.

- Trivial(Cosmetic): Cursor is not moving to the next box when the user enters “tab” key.

- Enhancement: The color of the error message is black instead of “red”. It’s not related to business requirement and can be fixed in the next testing phase.

10. Test Status Reporting

This is a report of testing activities from a specific functional area to the project manager.

- For all Test Cases in the failed state, provide a total count of problems in each of the severity levels (1-5).

- For all Test Cases in the failed state, provide a description of each open problem with a severity level of 1 or 2 and current status.

- For all Test Cases in Investigating, Blocked, or Deferred, provide comments (indicating RT ticket, if applicable, a reason for block or reason for deferment).

Actual output i.e. the output after executing the test case and expected output (determined from requirement specification) are compared to find whether the functionality is working as expected or not.

Types of Functional Testing

QA team performs different types of functional testing during the software testing life cycle. Here I’ve listed some of the essential functional testing types.

Sanity Testing

Sanity testing is performed when testers don’t have enough time for testing. It is the surface level testing where QA engineer verifies that all the menus, functions, commands available in the product and project are working fine.

Smoke testing

Smoke Testing is a kind of Software Testing performed after all the software modules are built. It is done to ascertain that the critical functionalities of the program are working fine. It is executed “before” any detailed functional or regression tests are executed on the software build. The purpose is to reject a badly broken application so that the QA team does not waste time installing and testing the software application.

Regression tests

In regression testing test cases are re-executed in order to check whether the previous functionality is working fine and the new changes have not introduced any new bugs. This test can be performed on a newly built code snippet or program when there is a significant change in the original functionality that too even in a single bug fix.

Integration tests

The most common use of the concept of integration testing is directly after unit testing. A unit will be developed, it will be tested by itself during the unit test, and then it will be integrated with surrounding units in the program. Remember, the point of integration testing is to verify proper functionality between components, not to retest every combination of lower-level functionality.

Beta/Usability testing

Functionality testing confirms whether something works. Usability testing confirms whether it works well according to the user’s perspective. Functionality testing is a predecessor to usability testing in order to have valid feedback from the testers who are using the application. It is often the last step before feature/software goes live. Usability tests make sure that all the individual software modules of the software meets user needs.

Acceptance Testing

Acceptance testing is a quality assurance procedure used to evaluate how effectively an application satisfies user approval. Beta, app, field, or end-user testing are all forms of acceptance testing.

API Testing

Application programming interface (API) testing entails examining the API of software systems to make sure that data gets exchanged successfully and that the API performs as intended.

UI Testing

UI testing entails examining how the users interact with the software system’s user interface to make sure that actions taken on the UI result in the desired behavior.

Black Box testing

Black box testing, also known as Behavioral testing, is a method wherein the designated testers are not aware of the system’s internal code structure.

Difference Between Functional Testing and Non-functional Testing

Functional testing:

In functional testing, the testers evaluate the software against its functional requirements and specifications. Functional testing is focused on the results and ensures that each function of the software works according to the expected standards.

Non-functional testing:

During non-functional testing, the emphasis is on ensuring the quality of the non-functional components of the software. Here, the testers test all the aspects that are not examined in functional testing.

Here is a quick comparison highlighting the major differences between functional and non-functional testing methods.

| Functional Testing | Non-functional Testing |

| It tests whether the features act in accordance with the expected result. | It checks the non-functional aspects like the speed of loading and response time. |

| Testers carry out functional testing using the functional specifications. | Testers carry out non-functional testing using the performance specifications. |

| It is easy to define the functional requirements for comprehensive testing. | It is difficult to define all the requirements for non-functional testing. |

| It is based on what the customer wants. | It is based on what the customer anticipates. |

| Conducting functional testing manually is easy. | Manually carrying out non-functional testing is challenging. |

Functional Testing Best Practices

Start writing testing cases early in the requirement analysis & design phase

If you start writing test cases during an early phase of the Software Development Life Cycle then you will understand whether all requirement is testable or not. While writing test cases first consider Valid/Positive test cases which cover all expected behavior of the application under test. After that, you can consider invalid conditions/negative test cases.

Keep balance across testing types

You can’t automate all types of functional testing. For example, system tests and user acceptance testing(UAT) require manual efforts. The reality is that both manual and automated testing are usually necessary to deliver a quality product. You must balance our manual and automated testing to achieve both the deployment speed and software quality.

Automated functional testing

Automated tests are helpful to avoid repeated manual work, get faster feedback, save time on running tests over and over again. It is impossible to automate all test cases, so it is important to determine what test cases should be automated first.

However, it’s hard to determine the tests which needs to be automated as it is pretty much subjective depending on the functionality of an app or software. To get the best ROI, I’ve listed some of the common parameters to select test cases for a test automation framework.

- Test case executed with a different set of data

- Test case executed with a different browser

- Test case executed with different environment

- Test case executed with complex business logic

- Test case executed with a different set of users

- Test case Involves a large amount of data

- Test case has any dependency

- Test case requires Special data

You should test the thing that makes you money first and should test supporting functionalities later. The place where you make money is the place that will have the largest demand for new and changing functionality. And where things change the most is where you need tests to protect against regressions.

Understanding How the User Thinks

The main distinction between Quality assurance and dev is a state of mind. While developers write pieces of code that later become features in the application, Testers are expected to understand how the application satisfies the user needs.

Let’s take an example of eBay. In such a mature online platform, there are different types of users as sellers, buyers, support agents, etc. When planning tests, all these personas must be taken into consideration with a particular test plan for each.

Create a Traceability Matrix

Requirement Traceability Matrix(RTM) captures all requirements proposed by the client or software development team and their traceability in a single document delivered at the conclusion of the life-cycle.

In other words, it is a document that maps and traces user requirements with test cases. The main purpose of Requirement Traceability Matrix is to see that all test cases are covered so that no major functionality should be missed while doing software testing.

Conclusion

As a product manager, you need to understand that not every function or requirement needs to be tested, and actually not everything can possibly be tested. High-priority, “big rock” sort of things needs testing, preferably automation testing before release. “The rest” can be completed as time allows, and should be prioritized according to the importance of the functionality you are testing.

“At Simform, we believe – Not done testing? Don’t ship it.”

If you’re determined that a certain set of tests is necessary for release, then stick to it and hustle if you’ve not finished testing. Functional testing is basic and essential. We have expertise in all types of software testing methodologies and we use agile testing in our organization.

We have gained years of expertise in providing Software testing services across the globe. Thereby, helping our clients deliver the best of their deliverables.

RafaelV

thanks for the clear and nice example, this really helps us to understand the meaning and importance of the Functional Testing.

Swetang Patel

Awesome blog sir, very impressed with details provided.

william hruska

Great post. Thank you for sharing. To read more about functional testing please follow: https://www.testingxperts.com/blog/functional-testing

Sai Ram

Very helpful

Sandra

Excellent article. Even though that is not my field, but I enjoyed while reading. Simple and nicely presented.