Download the case study

SneakPeek- An AI-based Platform for Predicting User Preferences

Category: Social Networking

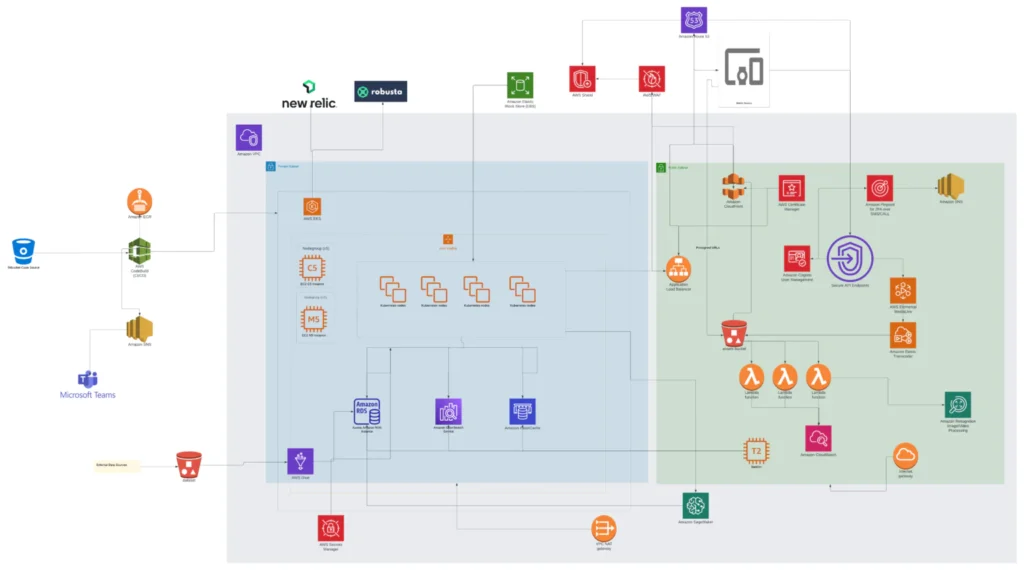

Services: Gen AI Development, Architecture Design and Review, Managed Engineering Teams

Category: Social Networking

Services: Gen AI Development, Architecture Design and Review, Managed Engineering Teams

SneakPeek is a location-based social networking platform that allows users to interact with their local community, discover new places, and follow others in their area. It offers customized content, location-specific tags, and filters. Brands can leverage SneakPeek to connect with their local audiences. SneakPeek wanted to build a generative AI platform that generated personalized user content based on preferences, location history, and engagement patterns.

Hiren Dhaduk

Hiren Dhaduk

Creating a tech product roadmap and building scalable apps for your organization.