Data Design: A Smart Data Reporting Solution That Empowers Schools

Category: Education

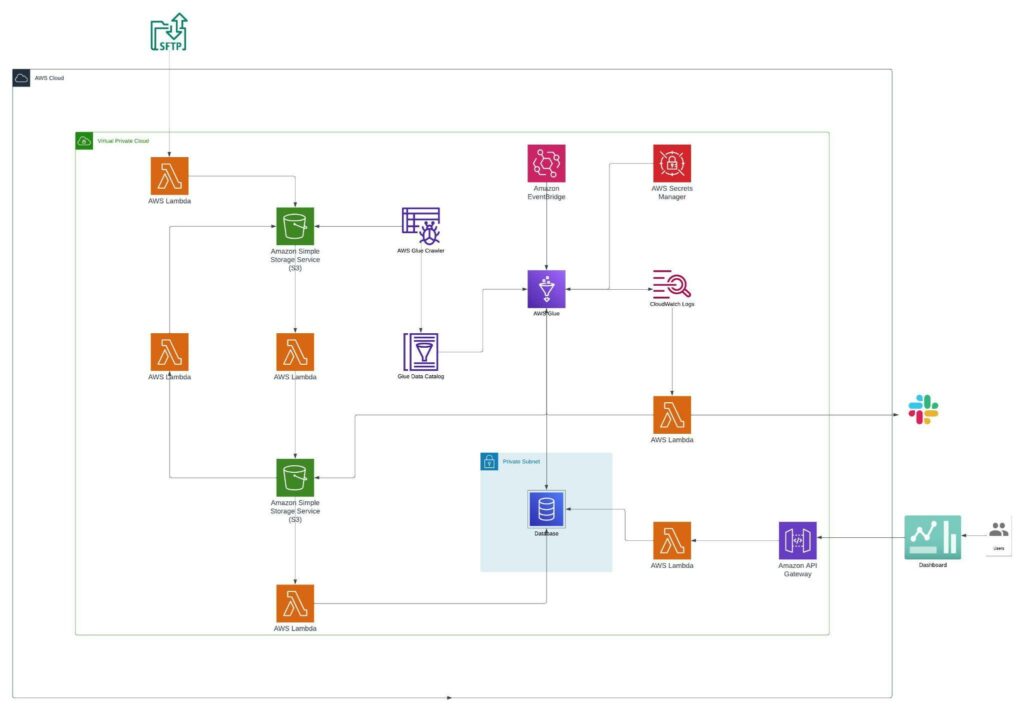

Services: Managed Engineering Teams, Cloud Architecture Design and review, Data Processing, Data Ingestion, and Data Governance

Category: Education

Services: Managed Engineering Teams, Cloud Architecture Design and review, Data Processing, Data Ingestion, and Data Governance

DataDesign.io provides data management and reporting solutions for educational institutions. It streamlines communication between students, parents, and teachers, saving valuable time.