Data Design: A Smart Data Reporting Solution That Empowers Schools

Category: Education

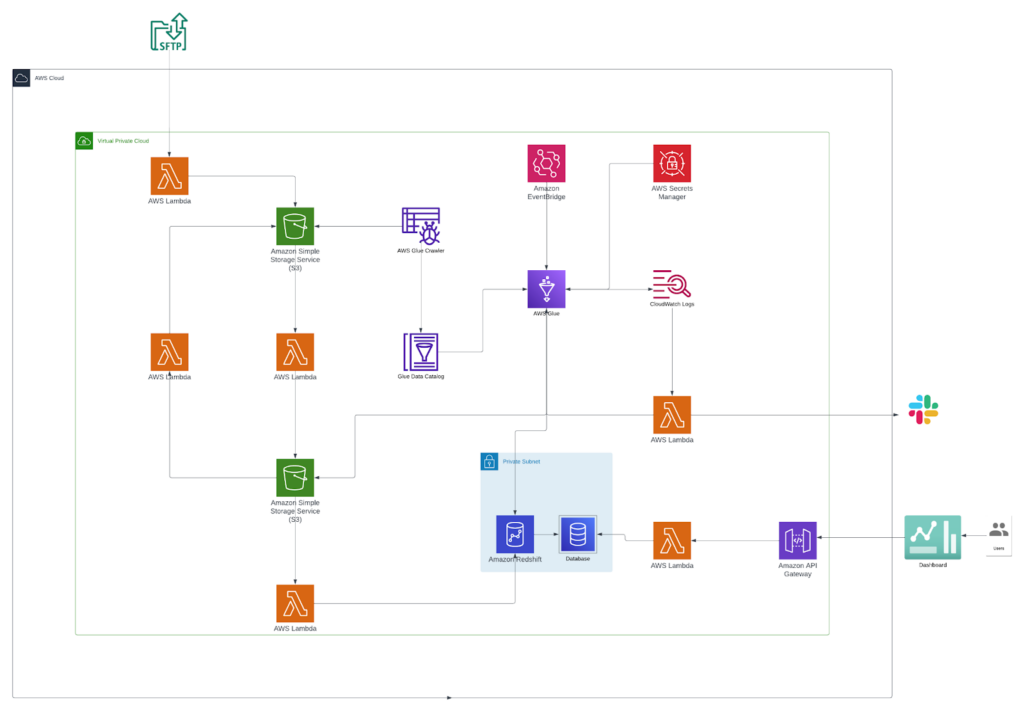

Services: Cloud architecture design and review, Resilience, Data recovery planning and implementation, managed engineering teams, Data Catalog, Data lake, Data warehousing, Data modeling and Schema design, Cost Optimization