2020 was particularly challenging for managing cloud usage as organizations approached cloud adoption due to the sudden shift to a remote workplace.

According to Gartner’s press release on cloud spending forecasts, it is projected to make up to 14% of the total global enterprise IT spending market in 2024, up from 9% in 2020. Another report from the firm states that the public cloud spending will exceed 45% of all enterprise IT spending, up from less than 17% in 2021.

Emerging technologies such as containerization, edge computing, virtualization are mainstreamed and playing a crucial role in increasing cloud spending. And the pandemic served as a multiplier for CIO’s interest in the cloud, says Sid Nag, research vice president at Gartner research firm.

Considering the growing inclination of organizations towards cloud-based technologies, you might want to start optimizing your cloud operations or formulating a plan for becoming a Cloud Center of Excellence.

You can control cloud costs to a great extent by understanding the technicalities required for cloud financial management and cloud economics.

So let get started observing your cloud bill and usage reports by establishing proper cloud governance in place and increasing visibility into the cloud migration costs.

AWS cloud cost optimization best practices for compute

The majority of spending for most users is on compute, making it a key area of focus for cutting costs.

1. Downsize under-utilized instances

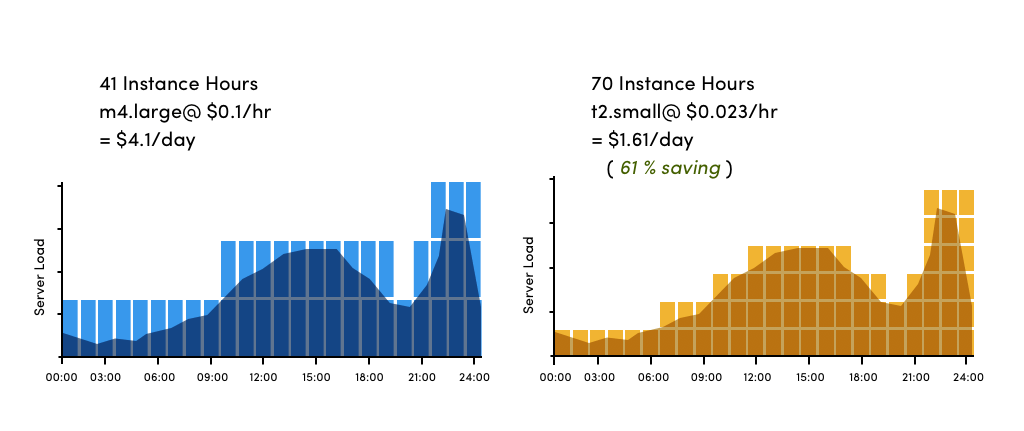

Downsizing one size in an instance family reduces costs by 50 per cent. Let’s take one example of m4.large and t2.small.

As shown in the image, m4.large is utilized less than 50% between 0 to 9 am. Server usage increases from 9 am to 2 pm. Here it requires two m4.large instances. Again, usage starts decreasing till 6 pm and increases in the night. As per the graph, it is clear that during some specific time span single m4.large can’t be utilized even 50% of its capacity. It costs $4.1/day.

Under-utilized instances should be considered as candidates for downsizing either one or two instance sizes. Keep in mind that downsizing one size in an instance family optimize costs by 50 per cent.

According to the server load graph, we can use t2.small for utmost utilization. As t2.small has a half compute capacity than m4.large so 70 t2.small instances are required instead of 41 m4.large instances. Using t2.small instances, decision-makers can save big, more than 60% of the instance cost.

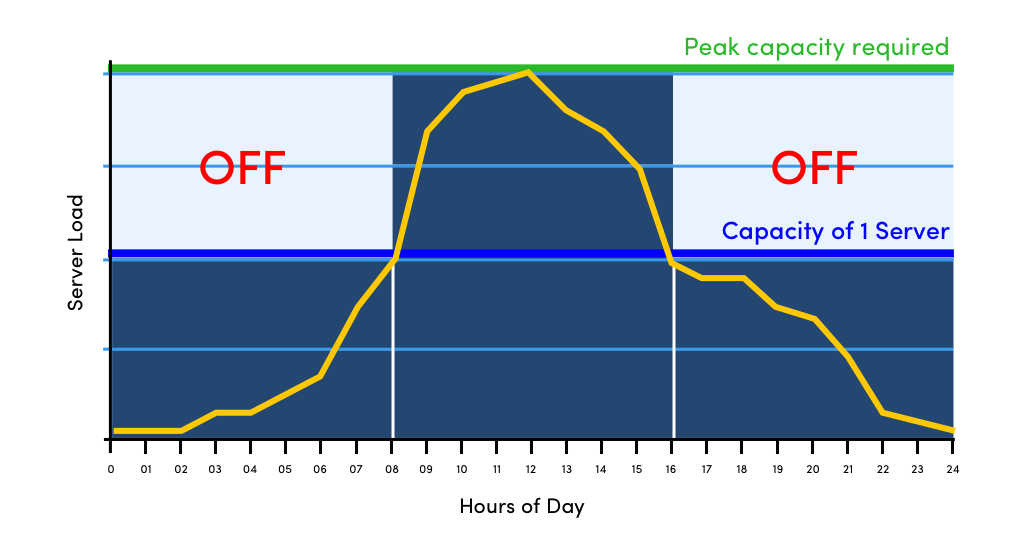

2. Turn off idle resources

Organizations use instances according to the highest peak of requirement which is not constant. Due to variations in the requirement, organizations have to pay for non-utilized instances. To fully optimize cloud spend, turn unused instances off. The below image shows how servers load varies on a particular day and then turn off instances.

As you can see in the above image, server load can be handled by 1 instance so you can turn off 2nd instance to save the cost. During the next 8 hours server load increases and requires two instances. Again, server load decreases from 16 to 24 and you can turn off 2nd instance.

Production instances should be auto-scaled to meet demand. Shutting down development and test instances in the evenings and on weekends when developers are no longer working can save 65 percent or more of costs. And instances for training, demos, and development must be terminated once projects are completed.

3. Delete unused EBS volumes

One more opportunity to limit costs for unutilized resources is to keep track of unused EBS volumes and delete those contributing to AWS spend. As EBS volumes are independent of Amazon EC2 compute instances, it happens that even if the associated EC2 instance is terminated, the EBS volumes tend to remain present.

For them to be terminated, you need to select the “Delete on Termination” option at the launch. Also, the instances being spun up and down may leave some EBS volumes if there are no workflows in use to delete them automatically.

Identify EBS volumes using the Trusted Advisor Underutilized Amazon EBS Volumes Check. You can start managing costs by deleting the low-utilization EBS volumes once you ensure if it is attached to an instance.

First, you have to detach the volume before deleting it and check the volume’s status in the console.

If a volume is attached to an instance, it will show an “in-use” state.

If a volume is detached from an instance, it will show in the “available” state, and you can delete this volume.

4. Use discounted instances

It is very important to understand Discount options of Instances for cloud cost optimization in detail for Amazon Web Services. With AWS Reserved Instances, you get the discount in exchange for making a one-year or three-year commitment with the longer commitment giving a higher discount. Discounts range from 24 to 75 percent depending on the RI term, the instance type, and the region.

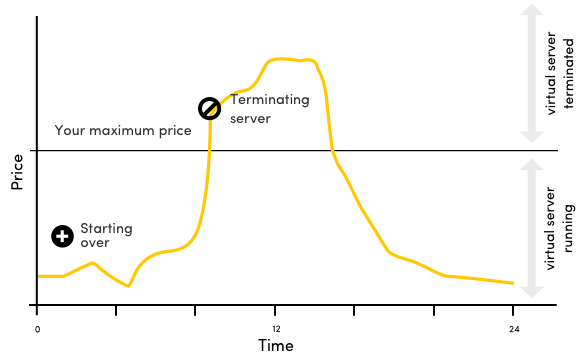

5. AWS spot instances

On the AWS spot market, the traded products are Amazon EC2 instances, and they’re delivered by starting an EC2 instance. It is very common to see 15-60% savings in some cases, you can save up to 90% with spot instances.

How does it work?

You set a maximal bid price and optionally a period up to 6 hours. The price you pay is the spot price each hour. When this price goes above your specified maximum bid price, the instance is terminated. Be careful that the new console for spot creates you a fleet, and even terminating the instance yourself, the fleet remains open.

You need to cancel the “spot request” (the fleet), as well as terminate the instance(s) when you are done. The instances are guaranteed to remain active for the specified amount of time up to 6 hours. See below image for more understanding.

There are many different spot markets available. A spot market is defined by:

- Instance type (e.g. m3.medium)

- Region (e.g. eu-west-1)

- Availability Zone (e.g. eu-west-1a)

Each spot market is offering a separate current price. So when using spot instances, it is an advantage to use different instance types in different availability zones or even regions, as this allows you to noticeably lower costs.

6. Minimizing data transfer costs

Make sure your Object Storage and Compute Services in the same region because Data transfer is free in the same region. For example, AWS charges $0.02/GB to download the file from another AWS region.

If you do a lot of cross-region transfers it may be cheaper to replicate your Object Storage bucket to a different region than download each between regions each time. Let’s understand it with Amazon S3’s example.

1GB data in us-west-2 is anticipated to be transferred 20 times to EC2 in us-east-1. If you initiate inter-region transfer, you will pay $0.20 for data transfer (20 * 0.02). However, if you first download it to mirror S3 bucket in us-east-1 then you just pay $0.02 for transfer and $0.03 for storage over a month. It is 75% cheaper. This feature is built into S3 called cross-region replication. You will also get better performance along with cost benefit.

Use AWS content delivery network called CloudFront If there are a lot of downloads from the servers which are stored in S3 (e.g. images on consumer site).

There are CDN providers such as CloudFlare who charge a flat fee. If you have a lot of static assets then CDN can save money over S3, as just a tiny per cent of original requests will hit your S3 bucket.

7. Use AWS compute savings plans

Compute Savings Plans automatically apply to the usage of EC2 and Lambda instances regardless of the instance family, regions, size, or tenancy. You get a discount of up to 54% compared to on-demand pricing if you use one year, no upfront Compute Saving Plan of AWS.

Use AWS Cost Explorer for satisfactory cost management of your AWS cloud costs and usage over a monthly or a daily granularity. Your compute usage is automatically charged at the discounted Saving Plans prices by signing up these plans, and any use beyond the commitment is charged at regular on-demand rates.

AWS cloud cost optimization best practices for storage

8. Delete or migrate unwanted files after a certain date

Cloud architects can programmatically configure rules for data deletion or migration between types of storage. This drastically reduces the long-term storage costs. All the major cloud vendors have the feature of Lifecycle Management.

For instance, active data can remain in Azure Blob Standard storage. But, if certain data begin to show signs of infrequent access, users can program rules to migrate that data over Azure Cool Blob storage to incur a cheaper storage rate.

A lot of deployments uses Object Storage for log collection. You may automate deletion using life cycles. Delete objects 7 days after their creation time. E.g. if you use S3 for backups, it makes sense to delete them after a year. Similarly, you can use the Object Lifecycle management feature in Google cloud and expiration of Blobs in Azure.

9. Compress data before storage

Compressing data reduces your storage requirements. Subsequently reducing the cost of storage. Using fast compression algorithm such as LZ4 gives better performance. LZ4 is a lossless compression algorithm, providing compression speed at 400 MB/s per core (0.16 Bytes/cycle).

It features an extremely fast decoder, with speed in multiple GB/s per core (0.71 Bytes/cycle). In many use cases, it makes sense to use compute-intensive compressions such as GZIP or ZSTD. So, compressing data will reduce your cloud waste significantly.

10. Take care of incomplete multipart uploads

There are many partial objects in Object Storage which had been interrupted while uploading. If you have a petabyte Object Storage bucket, then even 1% of incomplete uploads may end up wasting terabytes of space. You should clean up incomplete uploads after 7 days.

11. Reduce cost on API access

Cost on API access can be reduced by using batch objects and avoiding a large number of small files. Since API calls are charged per object, regardless of its size. Uploading 1-byte costs the same as uploading 1GB. So usually small objects can cause API costs to soar.

For example in Amazon S3, PUT calls cost $0.005/1000 calls. So to upload a 10GB file in 5MB chunks it will cost roughly $0.01. Whereas, for 10KB file chunks, it will cost approximately $5.00.

Similarly, it is a bad practice to use CALL/PUT options with tiny files using S3. It makes sense to use batch objects or a database such as DynamoDB or MySQL instead of S3. 1 writes per second in DynamoDB costs $0.00065/hour.

Assuming 80% utilization, DynamoDB will cost $0.000226/1000 calls. This is 95% cheaper to use DynamoDB compared to Amazon S3.

The S3 file names are not a database. Relying too much on S3 LIST calls is not the right design and using a proper database can typically be 10-20 times cheaper.

12. Design workloads for scalability

For any public cloud, scalability is a critical aspect. Scalability uses event-driven compute instances like AWS Lambda or containers like Google Container Engine to scale core services for important workloads, such as microservices.

These techniques are intended to utilize more computing when it is needed. Once the requirement increases, those related resources are released for reuse.

13. Cache storage strategically

Use memory-based caching, such as Amazon ElastiCache. Caching improves data accessibility by moving important or frequently accessed in-memory instead of retrieving data from storage instances.

This can reduce the expense of higher-tier cloud storage and improve the performance of some applications and website. It gives best results for performance-sensitive workloads which run in remote regions or when efficient replication is needed for resilience.

14. Use auto-scaling to reduce spend during off hours

Most apps have busier and slower periods throughout a week or day. Taking advantage of auto-scaling could save you some money during slow periods.

These deployment types all support auto-scaling:

- Cloud Services

- App Services

- VM Scale Sets (Including Batch, Service Fabric, Container Service)

Scaling could also mean shutting your app down completely. You can use “AlwaysOn” feature provides by App Services that controls if the app should shut down due to no activity. You could also schedule shutting down your dev/QA servers with something like DevTest Labs.

15. Analyze Amazon S3 usage and reduce costs

Leverage recommendations of S3 analytics and analyze storage access patterns on the object data set for 30 days or longer. AWS offers you Amazon S3 Glacier Storage classes which are purpose-built for archiving the data, and it provides you the lowest cost archive storage in the cloud.

Utilize S3 Infrequently Accessed for practical recommendations of reducing storage costs effectively.

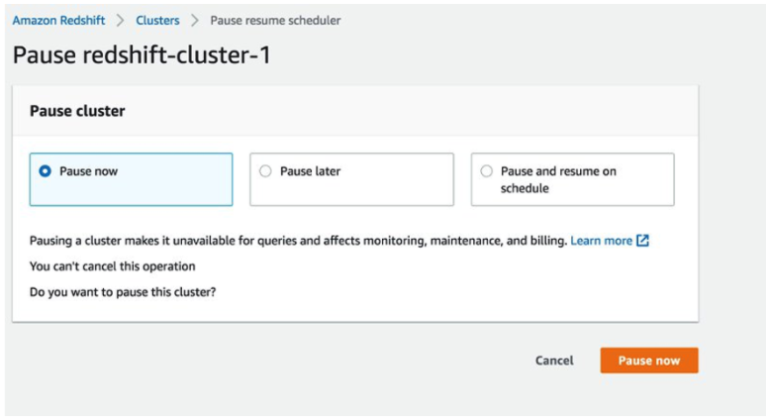

16. Identify less utilized Amazon RDS, Redshift instances

Identify DB instances that have no connection over the last 7 days using the Trusted Advisor Amazon RDS Idle DB instances check. You can reduce costs significantly by stopping these DB instances using DB instance stop and start feature in Amazon RDS for up to 7 days at a time.

This is one of the cost-effective ways to use Amazon RDS databases, as you can limit the costs when you are not using them.

For Redshift, AWS allows you to pause the clusters which have no connections for the last 7 days, and there is less than 5% cluster-wide average CPU utilization for 99% of the previous 7 days.

Identify underutilized clusters over the previous 7 days using Redshift clusters check.

AWS cost optimization tools

AWS inherently provides a list of tools to help you track usage data and the cost of your AWS bill. Some of these can also generate AWS cloud cost optimization report for you as well.

- AWS Cost Optimization Explorer – This helps you in observing patterns on your AWS bill, forecast future costs and identify areas that needs further improvement. In short, this works as a AWS cost optimization calculator.

- AWS Budgets – This allows you to set custom budgets limits and notification mechanism when your spends exceed the limits. Budgets can be set on tags, accounts and resource usage.

- Amazon CloudWatch – Apart from collecting and tracking metrics, this helps you in setting custom alarms that automatically reacts to the changes in AWS resources.

- Cost Optimization Monitor – This automatically processes the detailed billing remetrics ports so that you get granular metrics which can be searched, analysed and visualised in a customizable dashboard.

- CloudZero – It offers fully automated cost anomaly tracking & alerts and automatically generates resolution for issues that are actionable and straightforward.

- EC2 Right Sizing – Quickly analyze the EC2 utilization data and receive reporting recommendations for choosing the correct EC2 instances.

Conclusion

The key to using the above methods and any other cost optimization ideas is to have a proactive approach for your custom software development practices. These methods are some of the quintessential Cloud cost optimization techniques you can inculcate in your tasks on regular basis.

We’ve developed this AWS cloud cost optimization guide by closely understanding the existing cost and what changes are critical in both estimating and delivering real cost savings. We hope these will prove useful to your business needs as well and help your business units make informed decisions.

Say hello to us on Twitter for a detailed consultation of your cloud cost optimization practices and stay informed about more technology updates.