Yesterday while working with my software testing team, I had an epiphany that how often we get functional testing wrong.. Instead of working out a balanced testing strategy we take the simple approach of ‘testing the product the same way a real customer would use it’. I believe though an enticing statement emulating the customer experience has its own costs. Functional testing is costly and time-consuming if your organization is still doing it manually. But the costs can be remarkably reduced by implementing automated functional testing.

While working on software delivery projects, we will eventually face the decision of which tests should be automated to get a higher ROI. Also, to automate the functional testing you must have a pre-defined roadmap and strategy to save time and test maintenance. In this blog, you will get to know which tests should be automated and a roadmap to implement automated functional testing.

Table of Contents

- Why Automate Functional Testing?

- ROI of Automation Testing

- What Tests Should be Automated?

- Automated Functional Testing Process

Why Automate Functional Testing?

There is no question that rigorous functional testing is critical for successful application development. The challenge is how to speed up the testing process with accuracy without breaking your already tight budgets.

While manual testing is appropriate in some cases, it is a time-consuming and tedious process that is inefficient and conflicts with today’s shorter development cycles. It’s obvious that manual tests are prone to human error and inconsistencies that can skew test results.

Automated functional testing executes scripted test cases on a software application. For example, your application might have a registration form with a multiple-choice question. A script could automate each answer to ensure it works. When the outcomes don’t match the script, they are flagged for review. It’s a huge time saver and also reduces cost.

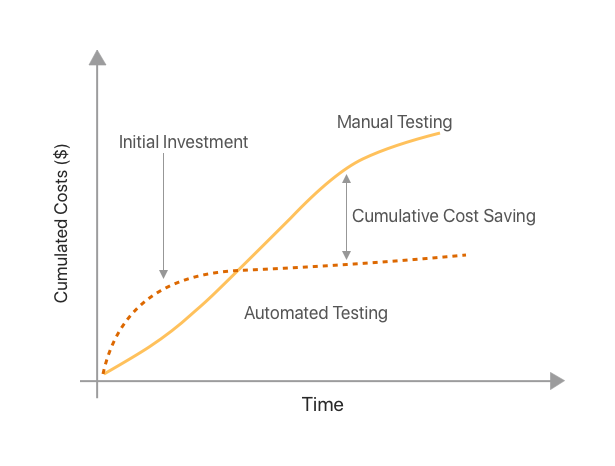

ROI of Automated Functional Testing

When it comes to automating functional testing processes, the costs are tangible but the net present value also includes many intangible factors. We said earlier that automation has the most value in situations where you can develop an automated test suite and then execute it many times. You can dramatically expand test coverage while maintaining the same labor cost. And expanding test coverage translates directly into benefits the CFO will understand.

Let’s take an example of a mobile application for which we’ll calculate the cost of automated functional testing and manual testing. The example calculations are based on the following assumptions:

- Test set-up time for each device: 5 minutes. It includes the removal of the old version of an application, installation of new APK, cleaning the memory card if necessary, cleaning the content database, and reboot of the device.

- The number of test cases is 200. Each test case takes on average about 15 seconds to execute. In total it takes 50 minutes to run all the test cases on each device.

- The number of application variants is 5. There are different application variants for smartphones and tablets as well as country variations.

- There are on an average 4 bugs found (out of 200 test cases) on every test run on every device. Every bug is reported with the detailed use case, application version, device specification (make, model, OS version), take a screenshot of the error and save the logs. Reporting of the bugs takes 40 minutes.

- Testing is done continuously, at least every time a new version will be released.

Time spent on 10 devices per test cycle:

- Set-up time (5 applications X 10 devices X 5 min): 250 min

- Runtime of test cases (5 applications X 10 devices X 50 min): 2500 min

- Reporting time (5 applications X 10 devices X 40 min): 2000 min

- Total: 79.16 hrs ~ 80 hrs.

To calculate the cost of one test cycle, we must estimate the hourly cost of a test engineer. If the average cost of human resource is $50/h, the total cost of one test cycle is 80h X $50/h = $4,000.

If you plan to have one test cycle every week, your monthly budgetary cost would be: 4 test cycles per month X cost of one test cycle: 4 X $4,000 = $16,000/month

Cost of Automated Testing

- Creation and Maintenance of automated test scripts with Testdroid Recorder: 200 test cases X 15 min/test case = 3000 minutes (50h), cost: 50hX $50/h = $2,500/month.

- License cost of automation tool: $2,000/month

- Total cost: $4500/month

Total direct savings are $16,000/month – $4,500/month = $11,500/month

ROI: (Gains – Investment) / Investment

ROI ratio: ($11,500 – $4,500)/ $4,500 = 1.55

In our example, the cost of 4 manual test cycles is compared to the costs of a complete set-up of an automation tool. Thus an ROI of 1.55 is huge if we consider automation testing for an e-commerce application where the features are iterated daily and continuous testing model is employed.

What Tests Should be Automated?

Smoke Tests

Once I was presenting an untested beta version to user testing group and failed miserably. I called the developers to fix the bugs immediately and developers had to search for root causes from start.

Our programmers hated this so much that we introduced an automated smoke test. This test checks if we have a newly built code snippet or a slice of the program; then it installs the beta, and finally it checks the basic functions of the product. Now we present only smoke-tested beta versions.

Though we don’t have this issue anymore, we continue the automated smoke tests for our nightly builds because they provide a good understanding of the state of our software. Since the smoke test is potentially run on every build, there is a good value in automating it.

Microsoft claims that after code reviews, smoke testing is the most cost-effective method for identifying and fixing defects in software.

Regression Tests

Regression tests are ideal for automation as these are monotonous and large in numbers. Automating regression tests can significantly reduce the time to test the application. One of our clients, a financial organization reaped huge benefits from automation testing.

We were working on the second build of their web application. It had new features to be implemented daily. There could be bug fixes, improvements, integration and compatibility issues which required code changes. So, in every release, we were spending regression cycles on re-verification of previously implemented features.

This regression cycle required a significant amount of resources and proved to be a very expensive part of the release cycle. Just imagine dozens of new features and hundreds of bug fixes, requested by customers, are on hold for several months.

Our automation process enabled us to achieve consistency of automation practices across the financial app. We showed a benefit of $86,000 after 8 months. This benefit calculation method was reviewed by our client at the highest level. The total amount invested in the testing project including the automation part was $42,000. The amount spent on automation was less than 15% of this total budget, including the acquisition of functional testing tools, consulting, and the creation and execution of automated test scripts.

The benefit calculation was primarily based on the time saved in doing QA, one of our internal project of the employee management system used took, on average, 2 weeks with 10 human resources to regression test. With automation, we reduced that process to 2 days and two resources: a reduction from 700 man-hours to 28 man-hours. This translated to a financial savings of about $120,500 per regression cycle.

Integration Tests

Integration testing checks the functionality of the individual units when combined with other units. Integration tests specifically target integration points between the components and its dependencies. The specific characteristic referred to here is the natural dependence between units in object-oriented systems.

When testers run integration tests in the context of functional testing, it includes dependent components like database, web services, APIs, etc. All these dependent components make integration testing time-consuming and complex. All of these problems can be addressed by automated integration testing. It enables development teams to continue working on a product’s development as it is being tested. Automated integration tests are prone to human mistakes, fast and reliable.

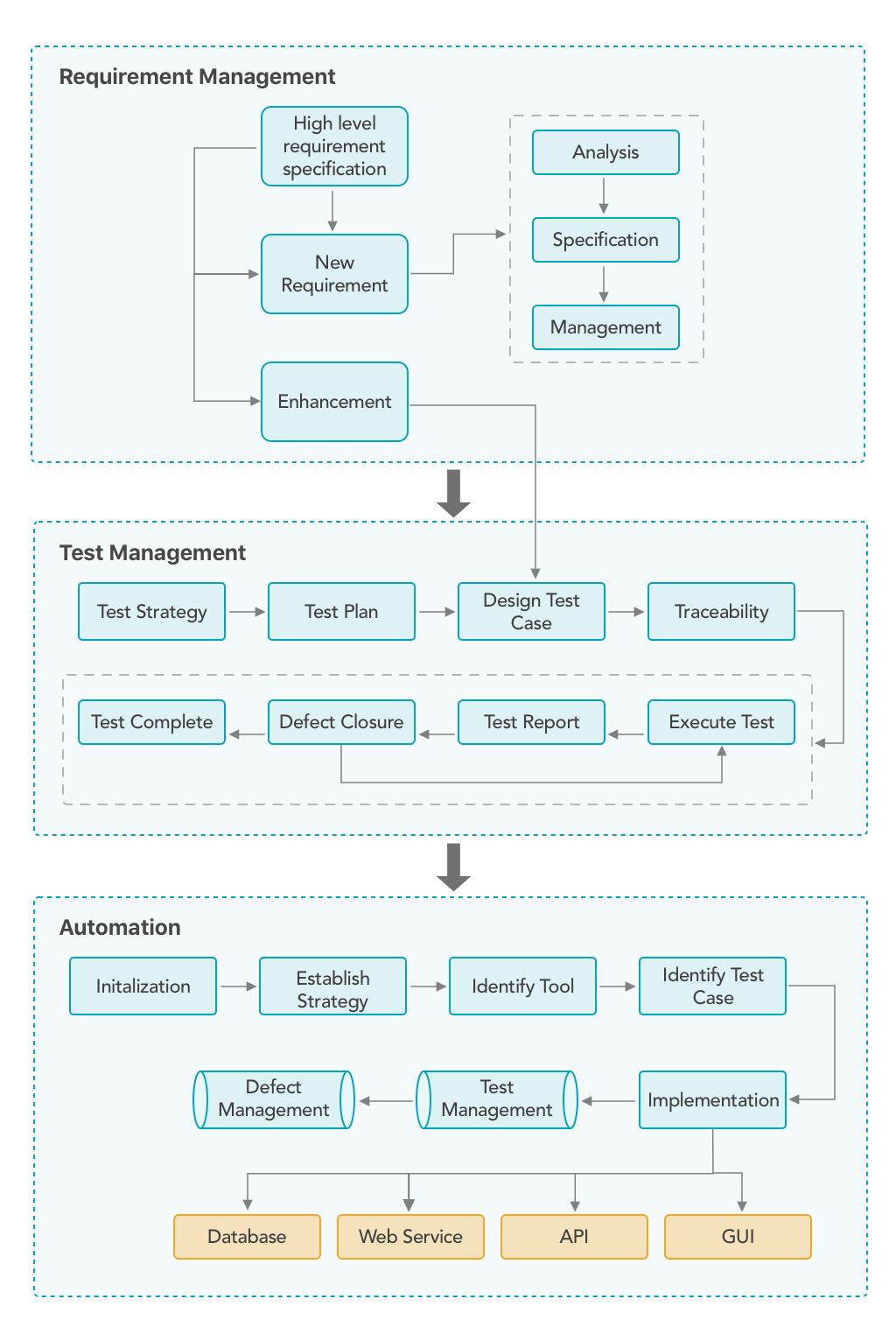

Automated Functional Testing Process

Many software teams are looking to testing automation as they seek to cope with continuous integration/continuous development—which is a common delivery framework for agile teams. Although automation can eventually achieve the efficiency that enterprises need for critical, repetitive, and complex test processes, it can become a disaster if there is a lack of planning and analysis.

Building a QA team with Diverse Expertise

“Many failures I see with automation are not caused by technical issues, but rather by a company’s cultural issues.”

To automate the functional testing you will need to assemble a dedicated test team which has a blend of testing, programming, and tool knowledge. It is important to match the skills of the persons in your team with the responsibilities of their role.

For example, writing automated test scripts requires expert knowledge of scripting languages. Thus, in order to perform these tasks, you should have QA engineers that know the script language provided by the automated testing tool. Some team members might not be versed in writing automated test scripts, but that is okay. These QA engineers might be better at writing test cases.

Choosing the Automated Testing Approach

Several methodologies exist for creating automated functional tests:

Test modularity

This approach divides the application under test into script components or modules. Using the scripting language of the automated testing solution, QA builds an abstraction layer in front of each component—in effect, hiding it from the rest of the application.

Test library architecture

It is another scripting-based framework like “Test Modularity”. The difference is that the test library architecture framework describes modules in procedures and functions rather than scripts—enabling even greater modularity, maintainability, and reusability.

Keyword-driven testing

Keyword-driven testing is an application-independent framework that uses easy-to-understand “keywords” to describe the actions to be performed on the application under test. The actions and keywords are independent of both the automated testing solution that executes them and the test scripts that drive the application and its data.

Data-driven testing

Data-driven testing is a testing framework that stores data in an external file (such as a spreadsheet) instead of hard-coding data into test scripts. With this approach, a single script can test all the desired data values.

Record/playback testing

This approach eliminates the need for scripting in order to capture a test. It starts by recording the inputs of manual interactions with the application under test. These recorded inputs are used to generate automated test scripts that can be replayed and executed later.

- How often is the test run? Would you benefit from having it run more often?

A common test, like making sure you can log in, is simple and often easy to automate. It would also be beneficial to find out right away if you cannot log in, and could be run after every single build (as in a smoke test). A test that only needs to be run once before release isn’t likely to be worth automating.

- How much data needs to be entered for the test?

There are many tests which require adding thousands of entries into the database and it’s a real pain. But it’s not the case for an automated test. In general, the more data that’s entered, the better the automation candidate.

- Is the output of the test easily measured?

If you can easily tell whether a test succeeded or failed, then it’s a good test to automate. Tests which require multiple cross-references to know the output are difficult to automate.

- Does UI change frequently?

An automated testing tool can handle some changes to UI elements. It’s common that UI changes frequently in an application’s lifecycle and many of them are dependent on other elements. For such automated UI tests, you have to modify the automated script frequently.

- Does the test utilize any customized controls?

If a test uses ordinary buttons, edit boxes, combo boxes, or grids, it can be an automated test. If you have custom controls, it will be difficult. Not that custom controls cannot be automated. But you may get better results by automating the more standard parts first.

- Does the test invite exploration of corner cases or improvisation?

An automated test can only do what it is instructed to do. It is not creative and won’t explore corner cases.

Based on the above questions, I’ve listed the parameters on which you can select the test cases that need to be automated.

Test cases that must be automated:

- Tests are used repeatedly.

- Tests involve a lot of data entry.

- Tests clearly pass or fail.

- Tests deliver an exact result.

- Tests use consistent UI and regular controls.

- Tests are only to do what they’re told — not check anything else.

Test Cases that cannot be automated?

- Exploratory tests

- UX tests

- UI tests

- API tests

Create the Test Data

When planning your test, it’s important to be aware of what test data your tests need. Many times, tests run against different environments that might not have the data you expect, so make sure to have a test data management strategy in place.

To ensure correct and precise results for each functionality or module to be tested, it is preferable that the test data should be created in parallel along with the other activities carried out for a particular test phase. This approach would derive out and make the availability of appropriate and desired test data inputs for each of the testing process. In this approach, test data may be generated using insert operation over the database or may be simply through the user interface of the software application.

Set up the Test Environment

A testing environment is a setup of software and hardware for the testing teams to execute test cases. In other words, it supports test execution with hardware, software and network configured. Generally, I divide this process into three parts:

- Setup

- Execution: Given X, When Y happens, Then expect Z

- Teardown

This actually occurs at two (or more) levels:

Entire test suite

Certain files, constants, database commands, etc. need to be run before the entire test suite. Often there is no ‘tear-down’ in this area.

Each test

Each test should also have the three stages of setup, execute, teardown. One of the primary things here is the database. Initially, it should be empty. Then the test suite starts and it is seeded with common data, then an individual test runs and it is populated with the specific set for that test. After the test runs it is important that the DB transaction is rolled back or that the DB is empty again (if no whole suite seed data).

Other

There may also be groupings of tests that require specific setup, for example, a specific service to be running.

Continuous integration tools that will run your tests in the cloud, such as Jenkins or CircleCI can be expected to have the capability to run scripts and setup tasks.

Maintenance of Test Suite

To facilitate the maintenance of your automated test suites, locate your test assets in a repository that can be accessed by the development team. Many test automation environments provide test management tools that make it easier to organize and access your test assets by maintaining the test assets (test cases, test scripts, and test suites) in a common repository.

In addition, some form of access control is enforced by the automation test tool. This eases the maintenance burden by ensuring the integrity of your test suites. You may choose to grant stakeholders and managers read-only access, whereas developers and testers at the practitioner level may have read/write access.

Conclusion

With the right planning and effort, automated functional testing can optimize software quality by verifying the accuracy and reliability of an application’s end-user functionality in pre-production. By automating functional testing, you can take major steps forward in your ability to improve both the user experience and the quality of your application/software. Both the Development and QA team can increase the speed and accuracy of the testing processes which ultimately helps to achieve higher ROI.

William Hruska

Nice article. Thank you for sharing.

Umamaheswarreddy

Mentioned that API test should not automate. Could you explain the reason? As API tests are fast and cover most business scenarios, they must automation for fast feedback.